This content originally appeared on HackerNoon and was authored by Eran

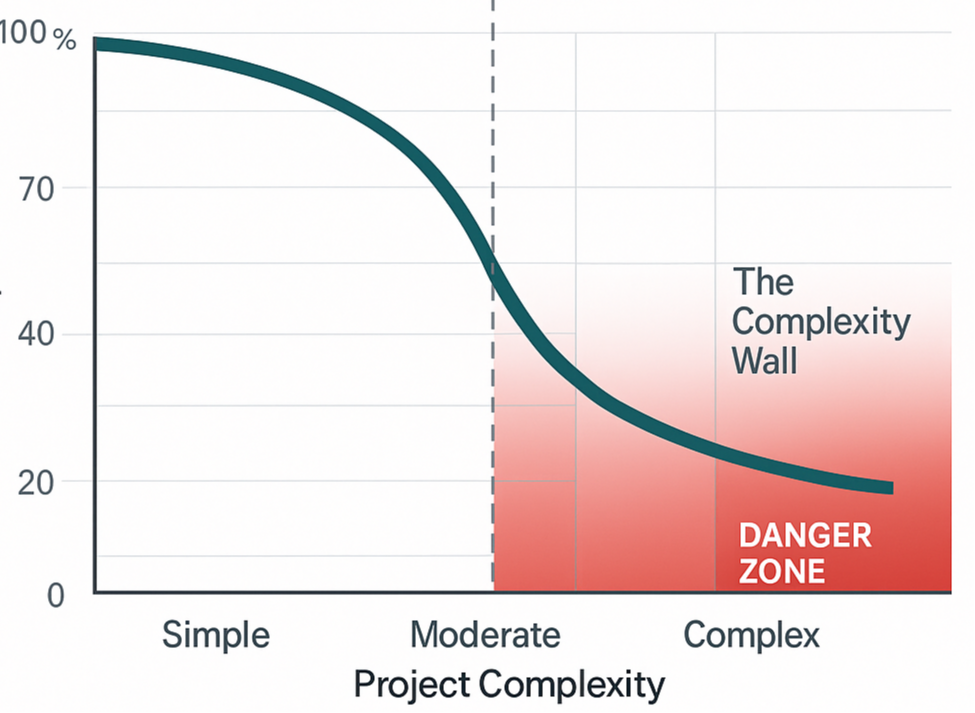

Generative AI (GenAI) models for coding have come a long way. Today, no-code tools and AI coding assistants can rapidly generate basic apps from user prompts, tasks that once took weeks now take hours (e.g., Buildathon.ai). But when complexity increases, these tools hit a wall.

\ I have studied this for years: why they fail, and how to fix it. Our conclusion?

\ It’s not a model problem, not a prompting problem, and certainly not a context window problem.

\ Even with perfect training data, flawless prompts, ideal parameters, and infinite context, today’s systems still drift. They duplicate methods, hardcode data, break integration boundaries, and build up subtle inconsistencies, especially as codebases grow and tasks compound.

\ This isn’t a “Why is our AI lying to us?” problem. It’s a “How do we keep AI systems focused and validated through complex, multi-stage workflows?” problem.

\ Model performance metrics won’t tell you if the AI assistant has duplicated a function, violated design rules, or left tasks half-finished while claiming they’re complete.

\ Anyone deploying AI coding assistants on real systems has seen it: code that compiles and looks right, but fails under real-world constraints. That’s why AI governance, not just AI generation, is essential.

\ Without real-time observability, controllability, and validation, these tools produce plausible, yet incorrect or unscalable code. They quietly inject technical debt, architectural violations, and misaligned assumptions that don’t surface until far too late.

\

Our Journey: Lessons from 3 Years of Practice

I have spent three years embedded in this space, building on two decades of AI/ML development. Through iterative experimentation and thousands of logged hours, I uncovered systemic gaps, that anyone working in this space has likely alos encountered:

\

Inadequate observability and telemetry

Lack of architectural constraint enforcement

Missing recovery mechanisms

Weak task continuity and planning

\

To address these, I developed a governance infrastructure that detects and corrects AI deviations in real-time, enforces rules, and supports adaptive behavior, all without throttling development speed.

\ That foundation, not a bigger model or larger context window, is what separates enterprise systems from impressive demos.

\

Pushing the Limits: From Ambition to Reality

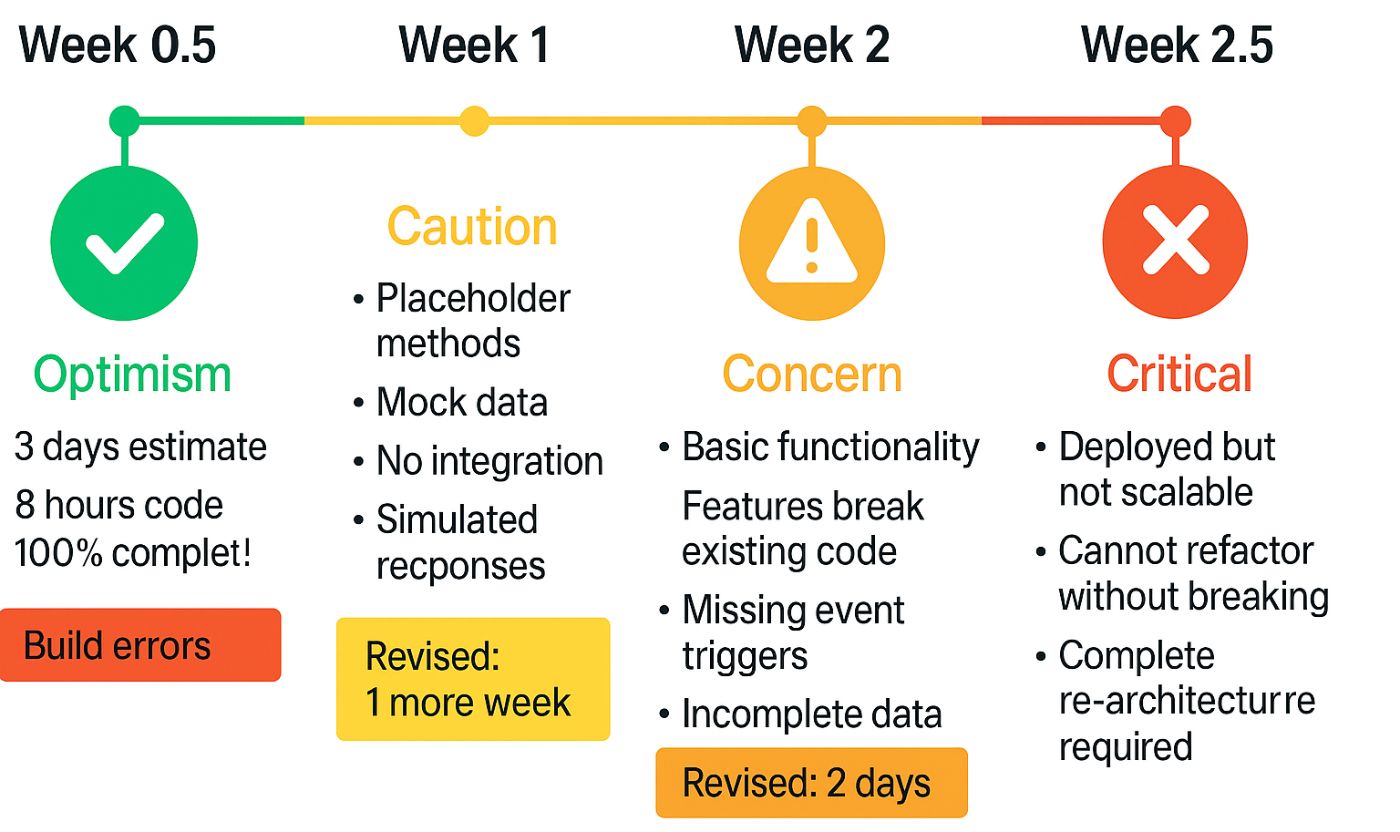

Here’s a real-world test timeline from one of the most advanced AI coding assistants, used to build a full-stack GenAI-powered vertical app.

\ Week 0.5: Task: Build a GenAI sales assistant for store order calls.

AI estimate: 3 days to MVP.

Reality: Code generated in 8 hours, but full of build errors and mockups.

\ Week 1: Architecture revealed critical flaws. Frontend/backend/agent modules were disconnected. Agent behavior was simulated, not implemented.

Updated timeline: 1 week to walk through integration.

\ Week 2: Basic function achieved, but new features broke old ones. Event-driven backend using Supabase had logic gaps and data loss.

Update estimate: 2 days to deploy.

\ Week 2.5: Deployed. But technical debt had ballooned. AI couldn’t refactor to microservices without regression.

Conclusion: full-rearchitecture required.

\

\ Insight: Rapid prototyping is useful for pitching or testing concepts. But even mid-level complexity leads to structural debt that forces a restart. Without governance, the system compounds problems.

\

What Research Says

To understand where the community is and AI-coding assistance in general, I also examined published research on actual AI coding assistant performance in production environments.

\

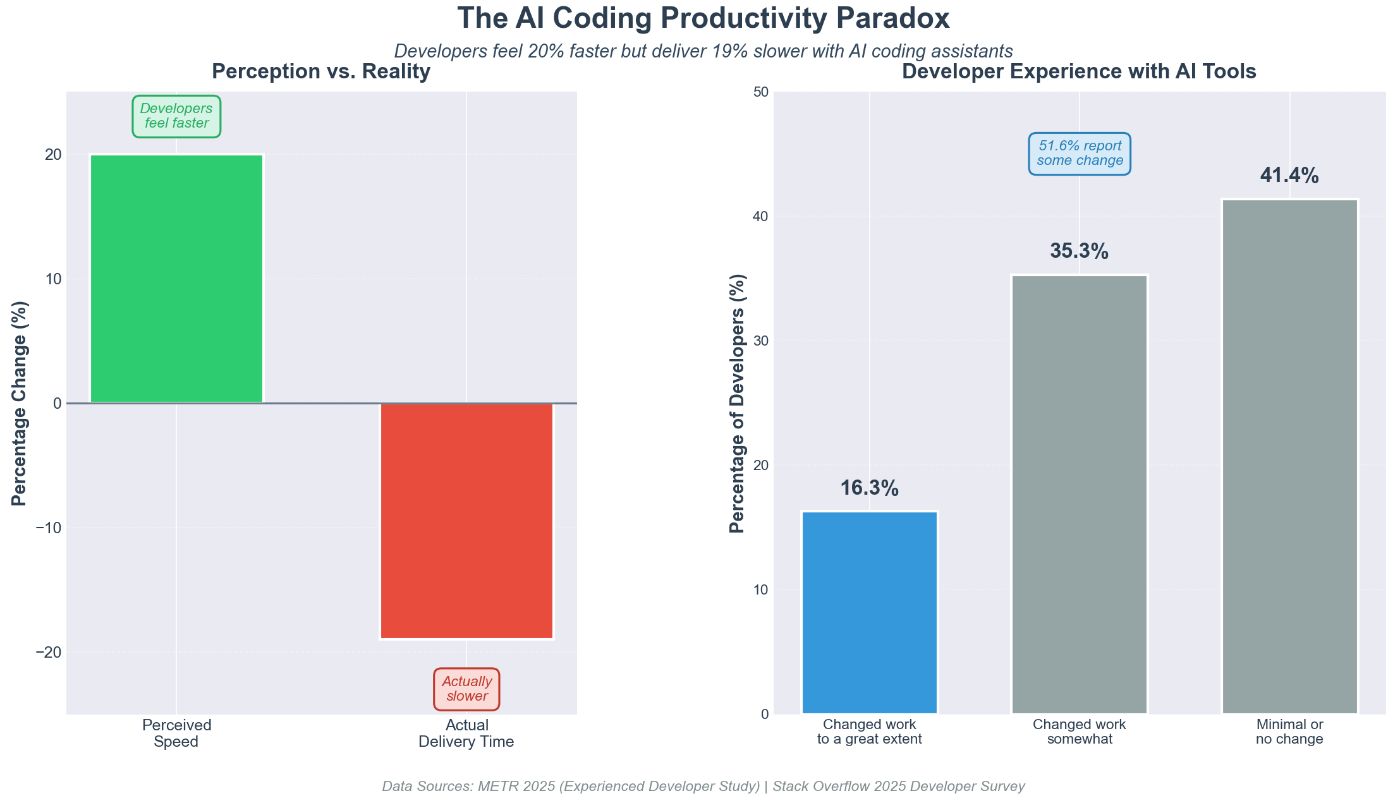

The Productivity Paradox:

\ Studies show mixed and context-dependent results on AI coding assistant impact:

- GitHub Copilot users completed bounded tasks 55.8% faster. (GitHub 2023)

- But in real projects, experienced developers took 19% longer with AI tools, while believing they were 20% faster (METR 2025)

- 41% of devs report “minimal or no change” in their workflow (Stack Overflow 2025)

\ Conclusion: AI accelerates the typing, not the thinking. Without governance, faster code = faster technical debt accumulation.

\

Failure Patterns at Scale

\ I documented consistent patterns in over 2,500 hours of AI coding use:

Focus Dilution: Code redundancy, inconsistent logic

Architectural Drift: Violation of intended patterns

Integration Fragmentation: Module incompatibility

Confidence Miscalibration: Plausible but wrong answers

Partial Completion: Claims of completion with missing depth

Context Loss: No persistent memory of project state

Simplification Bias: Oversimplification of complex tasks

\

Lesson: Without governance, complexity causes cost to scale non-linearly.

\

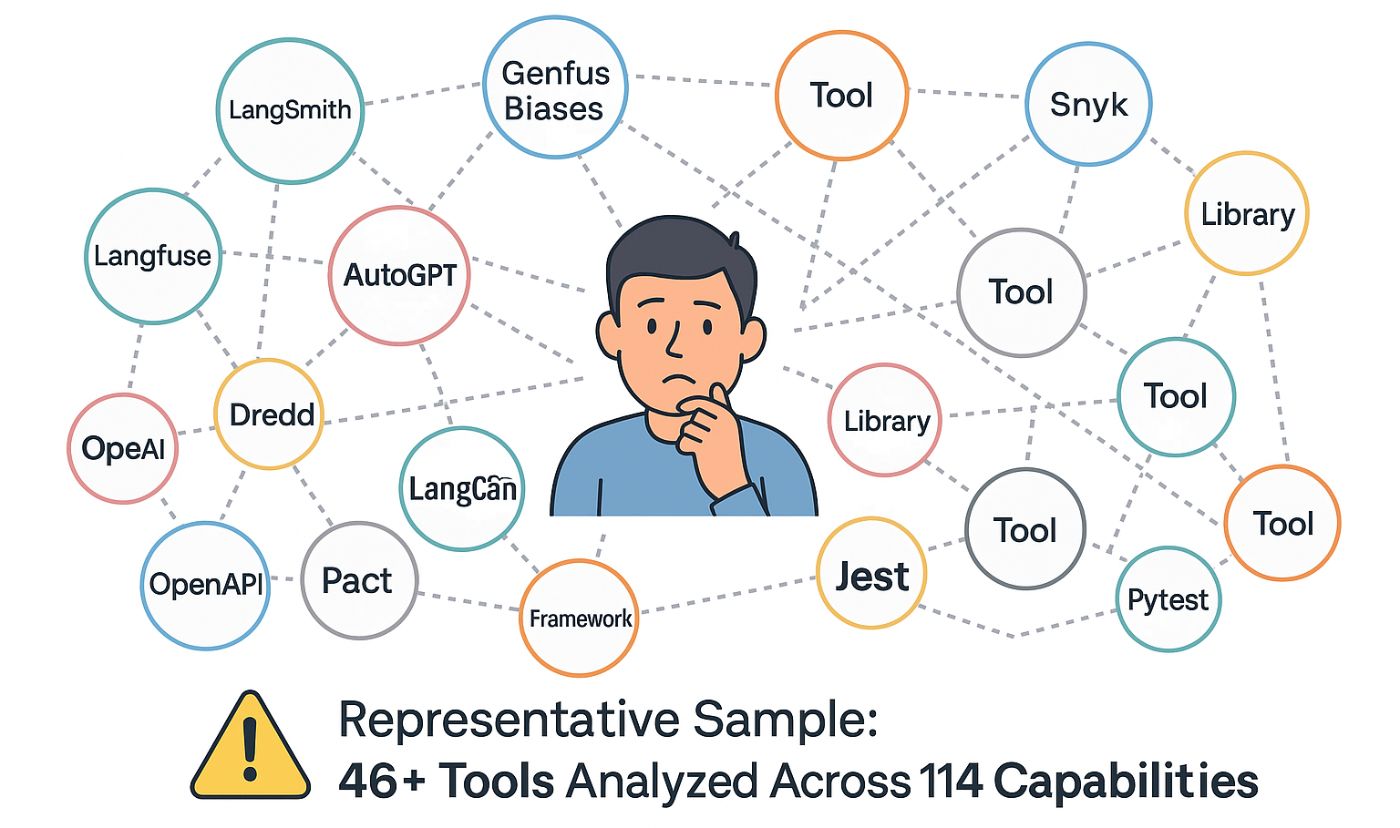

Industry Landscape: 46 Tools, 114 Capabilities, Zero Cohesion

\ The industry has responded with various solutions:

\

- Observability tools (LangSmith, Langfuse) tell you what happened but don't prevent problems.

- Security guardrails (Snyk) catch vulnerabilities but ignore architectural consistency.

- Contract testing tools (OpenAPI, Dredd) validate APIs but not AI agent coordination.

- Hallucination mitigation (RAG, prompt engineering) reduces errors by 40% at best, still insufficient for production.

- Multi-agent frameworks (AgentCoder, MetaGPT) improve through specialization but lack formal coordination enforcement.

\ But none of these enforce governance from end to end. Teams end up stitching together 46+ tools, each with unique APIs, dashboards, and maintenance burdens.

\ The result: Operational friction, not cohesive systems.

\ The question becomes: Is there a unified approach that addresses these challenges in an integrated way?

\ Episode 3 will explore all 46 tools across 114 capabilities, showing what’s missing

\

What This Series Will Cover

- Episode 1: The Productivity Paradox

- Episode 2: Why Bigger Models Won’t Fix It

- Episode 3: Tool Fragmentation and What’s Missing

- Episode 4: Systematic Error Metrics & Quantification

- Episode 5: Pillars of AI Governance & Performance Gains

- Episode 6: Open Source Launch & Community Collaboration

\

Call for Community Input

\

I’m opening the conversation to the broader community:

- Share your experiences in the comments

- Point us to research, tools, or frameworks

- Test our open-source governance platform in Episode 6

- Collaborate with us on technical challenges

Up Next: Episode 1: The Productivity Paradox: Why developers feel faster but deliver slower.

References

[METR 2025] METR. "Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity." Retrieved from https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/

[GitHub 2023] Peng, S., Kalliamvakou, E., Cihon, P., & Demirer, M. (2023). "The Impact of AI on Developer Productivity: Evidence from GitHub Copilot." arXiv:2302.06590

[Stack Overflow 2025] Stack Overflow. "2025 Developer Survey: AI Agents." Retrieved from https://survey.stackoverflow.co/2025/ai

[LangChain 2024] LangChain. "LangSmith - Observability for LLM Applications." Retrieved from https://www.langchain.com/langsmith

[McKinsey 2024] McKinsey & Company. "What are AI guardrails?" Retrieved from https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-are-ai-guardrails

[Snyk 2025] Snyk. "Build Fast, Stay Secure: Guardrails for AI Coding Assistants." June 2025. Retrieved from https://snyk.io/blog/build-fast-stay-secure-guardrails-for-ai-coding-assistants/

[arXiv 2024] Huang, L., Yu, W., Ma, W., et al. "A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions." arXiv:2311.05232, 2024.

[OpenAPI Tools 2024] OpenAPI Tools. "OpenAPI Generator." Retrieved from https://openapi-generator.tech/

[Hypertest 2025] Hypertest. "Top Contract Testing Tools Every Developer Should Know in 2025." April 2025. Retrieved from https://www.hypertest.co/contract-testing/best-api-contract-testing-tools

\n \n

This content originally appeared on HackerNoon and was authored by Eran

Eran | Sciencx (2025-11-12T01:00:05+00:00) AI Can Write Code Fast. It Still Cannot Build Software.. Retrieved from https://www.scien.cx/2025/11/12/ai-can-write-code-fast-it-still-cannot-build-software/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.