This content originally appeared on Level Up Coding - Medium and was authored by Shawn John Samuel

An easy introduction to metrics in machine learning (with fun analogies from The Matrix movie)

Although this blog post is not an argument in favor of The Matrix being one of the greatest sci-fi movies of all time, I won’t be disappointed if you end up feeling that way. To be clear, similar sentiments may not be prevalent among fans about the rest of the trilogy, and in particular the latest reboot The Matrix Resurrections.

I’ll break down the basic machine learning metrics of accuracy, recall, and precision as well as the confusion matrix, Type 1 Errors, and Type 2 Errors. All of this accompanied by a little mind trip through The Matrix to hopefully build your intuition.

(*Edit: a short addendum on how this might apply to medical diagnostic tests like those used for COVID-19)

Isn’t Accuracy Enough?

One of the basic processes in Machine Learning is binary classification. This simply means deciding if something should have one label or another. In a supervised learning model, the initial data will teach our model what the correct label is. Based on this, the model will learn to predict the labels for new data. How do we tell how well our model is doing? Naturally, our brain thinks about accuracy — how many did the model get right out of all its predictions?

Accuracy is definitely important in evaluating models. However, it may not always be the best one! Say we’re trying to flag a fraudulent transaction on a credit card. If 99% of the transactions are legit, and only 1% is fraudulent, a model could just say that all transactions are legit and be 99% accurate! However, that wouldn’t be very helpful in catching the criminal. There are other metric options that may be more useful based on your objectives.

Before we get into other metrics and confusion matrix, what were the acronyms TP, TN, FP, FN in the above formula all about? First, we need to address something that usually confuses people quite a bit: Type I and Type II errors (also known as False Positive and False Negative).

Type I and Type II Errors

Ok back to our movie reference. Did you know that there is a very interesting theory that Agent Smith is actually ‘The One’? For most fans that don’t believe this to be true, claiming Mr. Smith is ‘The One’ would be a Type I error — or False Positive (FP).

One of the primary tensions introduced early in the story is Neo trying to figure out if he is indeed ‘The One’. This especially becomes difficult when he is told by the Oracle, apparently a very wise seer, that he is not ‘The One’. If we assume that he indeed was ‘The One’ (even at that time) then what the Oracle told him would be considered a Type II error — or False Negative (FN).

Bring on the (Confusion) Matrix!

So with False Positive (FP) and False Negative (FN) settled, understanding a confusion matrix becomes a lot easier. To get us started let me go ahead and ask — what is a matrix?

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns. (Wikipedia)

Early in my data science pursuit, I encountered an important version of this called a confusion matrix. Admittedly, my initial attempts to quickly understand this important tool left me (for lack of a better word) confused. (Side note, the “confusion” is referring to how confused the model is, in making the right predictions.) Let’s look at a depiction of what a confusion matrix would convey:

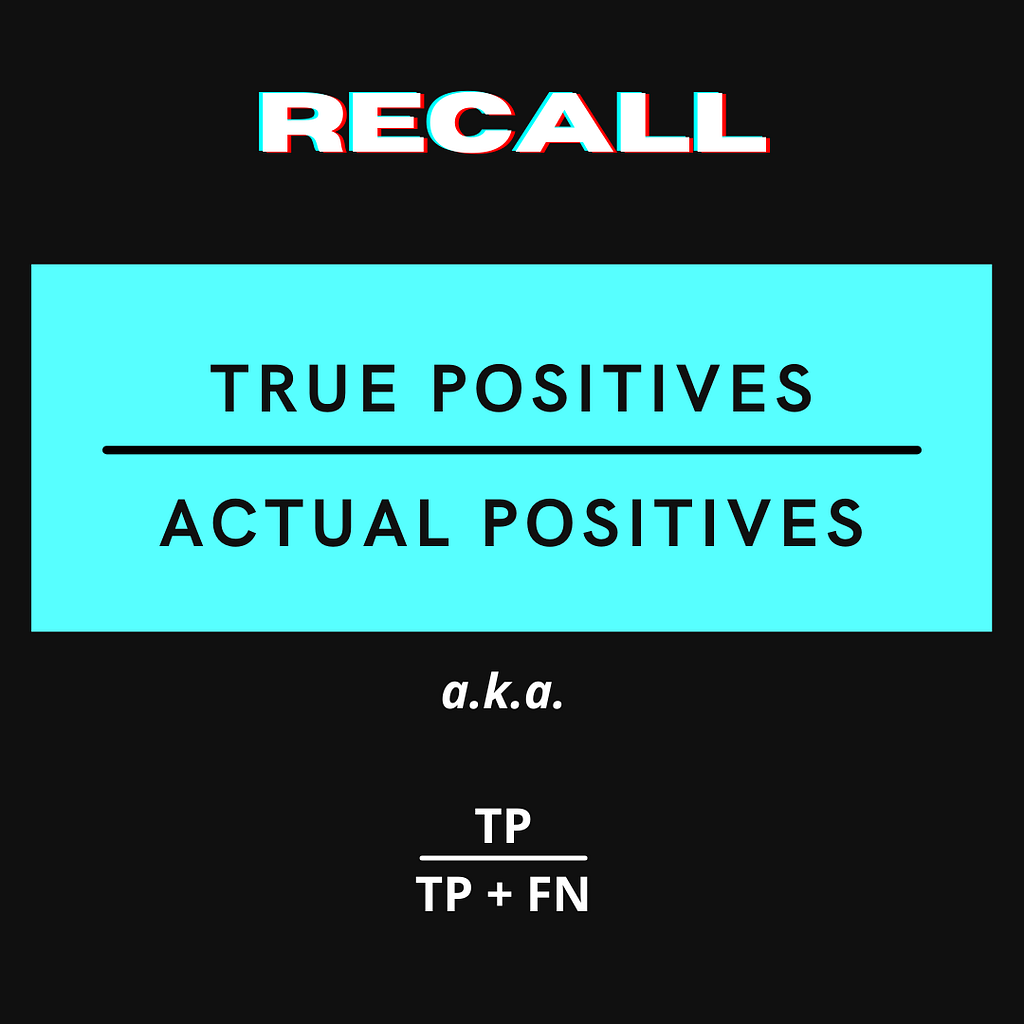

Recall

Well, this isn’t really helpful without a movie reference, right? So let’s take Morpheus for example. He was told by the Oracle that he would find ‘The One’. In essence, he was a binary classification algorithm that should be able to label ‘The One’ based on a given set of human features.

What would be worse for Morpheus? To think that someone is ‘The One’, but then find out they actually aren’t (FP) or to think that someone is not ‘The One’ and then doom humanity to its utter demise because he was wrong (FN)? In this case, since there is only one ‘The One’, the FN is more costly. This is when we need to be concerned about having a higher Recall score. Here’s the formula:

The fraudulent transaction example above, would be a real-world example for when we would want a high Recall score. The cost of labeling a transaction that is fraud as not fraud (FN) is much higher than labeling a transaction that is not fraud as fraud (FP). A high Recall score would be correlated with fewer False Negatives.

Precision

Let’s look at another person who received a prophecy from the Oracle. Trinity was told she would fall in love with ‘The One’. She’s certain Neo must be ‘The One’, as she is developing strong feelings for him. However, this all gets very confusing when Neo tells her that he thinks (keyword: thinks) the Oracle told him he isn’t ‘The One’. There is an epic scene when Neo is dead in the Matrix and is about to die in the real world as well. Trinity sticks with him in the real world at great risk to herself and declares her love for Neo with a kiss.

At the risk of gross oversimplification, if we take this kiss to be a binary classifier that will either label the receiver as truly being ‘The One’ or not, what is important to Trinity? The goal would be to decrease the chances of a FP. In this case, the kiss was precise; saving both Neo, Trinity, and presumably all of humanity. Here’s the formula for Precision:

A real-world example of needing a high Precision score is email spam labeling. If your email spam filter has a low precision score, it is more likely to label an email that is not spam as spam (FP). A high Precision score would be correlated with fewer False Positives.

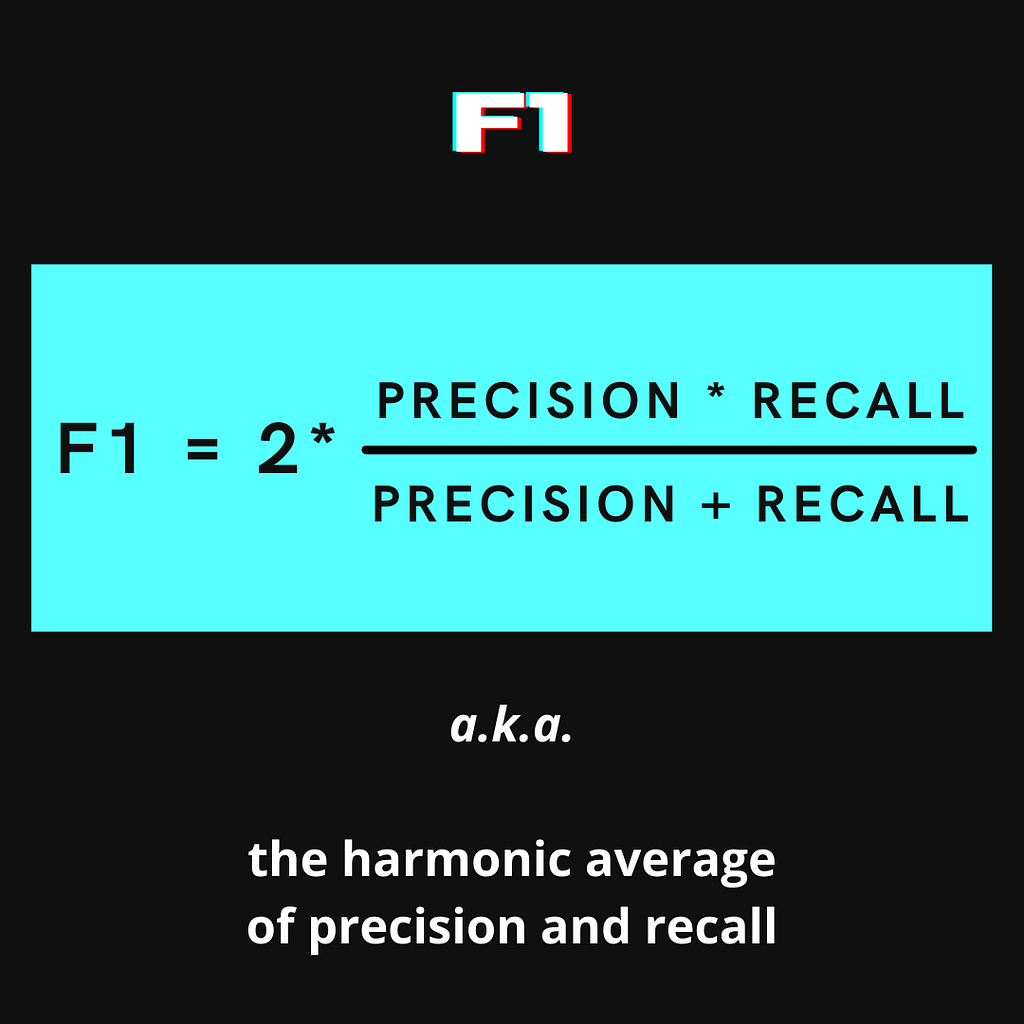

F1

What if both Recall and Precision are important? This is where the F1 score comes in! It balances the Recall and Precision of a model and will penalize an imbalance in the above two scores. Here’s the formula:

So a model with perfect Recall and Precision would get an F1 score of 1. However, a model with either perfect Recall or Precision and then a score of 0 in the other would get an F1 score of 0. So a model like the one described earlier in this blog that guessed all credit card transactions are not fraudulent would be 99% accurate, but only get an F1 score of 50%!

Conclusion

There are so many ways to measure how well your machine learning model is doing beyond accuracy. I hope these (admittedly intricate) Matrix movie analogies can contribute to your intuitive understanding of scores like precision, recall, and F1 work and when it would be beneficial to use them.

P.S.

How About Medical Diagnostic Tests (Like Covid Tests)?

Great question! One that I asked myself, as I write this addendum to the original post. Medical tests use slightly different terms for metrics, namely Sensitivity and Specificity. Sensitivity perfectly overlaps with Recall as described above — how good a test is at detecting the positives? Specificity, though similar to precision in minimizing false positives, presents a new formula: TN / (TN + FP). Simply said — how good a test is at avoiding false alarms (read that last link for further explanation in simple terms).

So I’ll throw this one to you — what’s more important for a Covid test, sensitivity or specificity?

Metrics in the Matrix was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Shawn John Samuel

Shawn John Samuel | Sciencx (2022-01-06T14:47:30+00:00) Metrics in the Matrix. Retrieved from https://www.scien.cx/2022/01/06/metrics-in-the-matrix/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.