This content originally appeared on Level Up Coding - Medium and was authored by Imaze Enabulele

Scenario

- Create an EKS cluster with the desired capacity of 2

- Create a random string that allows 5 characters to build the cluster name

- Output the cluster name and the ip address of the containers in the cluster.

Definitions

Elastic Kubernetes Service (EKS): Amazon EKS is a managed service that helps make it easier to run Kubernetes on AWS. Through EKS, organizations can run Kubernetes without installing and operating a Kubernetes control plane or worker nodes. Simply put, EKS is a managed containers-as-a-service (CaaS) that drastically simplifies Kubernetes deployment on AWS. Documentation

Cluster: Clusters are made up of a control plane and Worker nodes.

EKS control plane: The control plane runs on a dedicated set of EC2 instances in an Amazon-managed AWS account, and provides an API endpoint that can be accessed by your applications. It runs in single-tenant mode and is responsible for controlling Kubernetes master nodes, such as the API Server and etcd.

Worker Nodes: Kubernetes worker nodes run on EC2 instances in your organization’s AWS account. They use the API endpoint to connect to the control plane, via a certificate file. A unique certificate is used for each cluster Documentation

The configuration files are stored in my GitHub repo. See link

Step 1: Set up code in configuration files

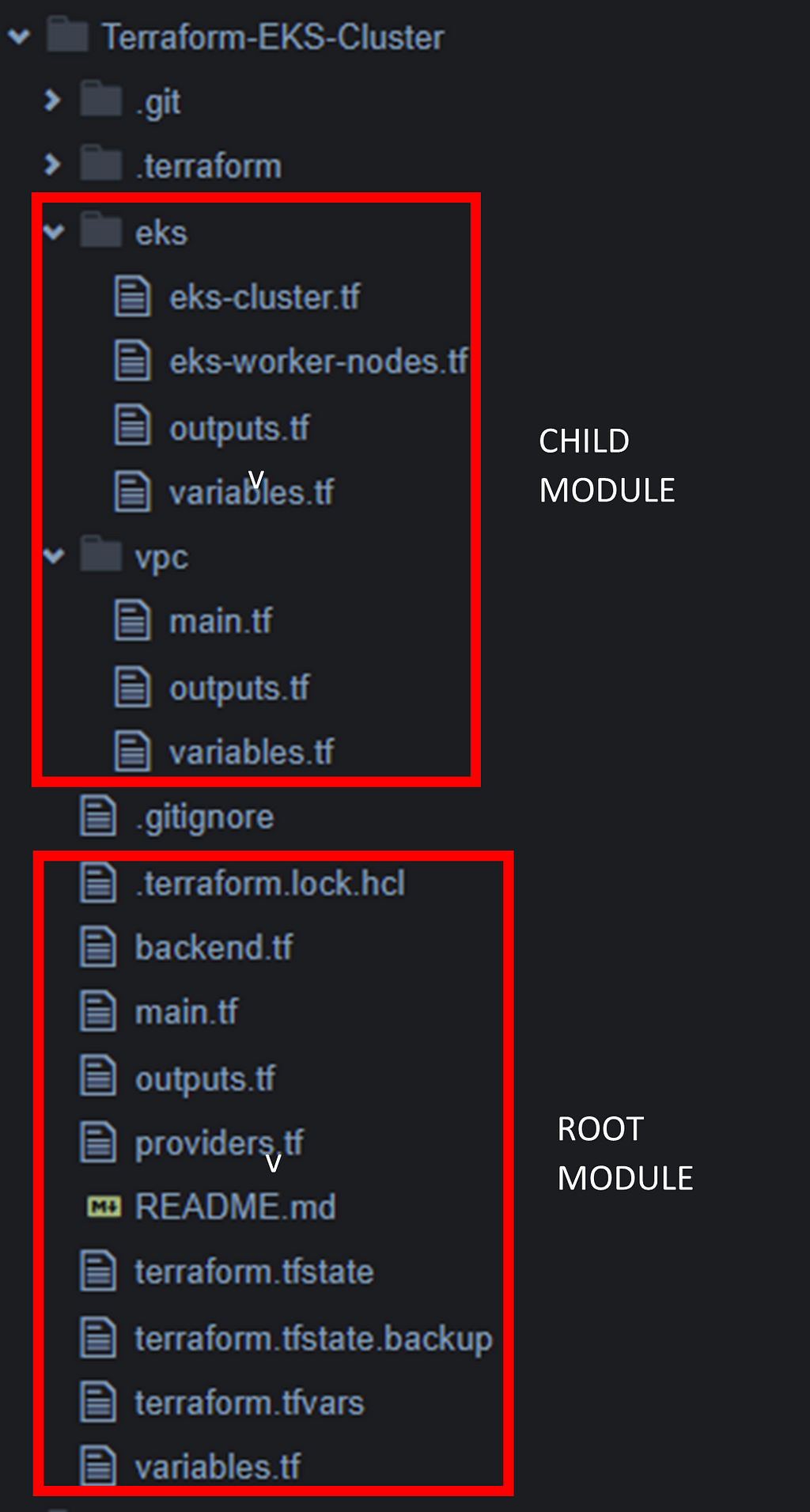

Root Module

The configuration files contained here are providers.tf, main.tf, variables.tf, outputs.tf, state file as well as the child modules

Child Module

VPC module: This module contains configuration files explicitly used to launch the VPC, public subnets, route table, internet gateway etc. The outputs.tf file, outputs some arguments that are used in the root module main.tf

EKS module: This module contains configuration files to deploy an EKS cluster and the EKS worker nodes.

Step 2: Initiate command Workflow

We’ll navigate to Terraform cloud to get infrastructure build. In my previous project, I showed how to connect your GitHub account to Terraform cloud via a VCS provider but only selected the repository I needed for the project. I’ll show below how to pull all the repositories present in your GitHub account

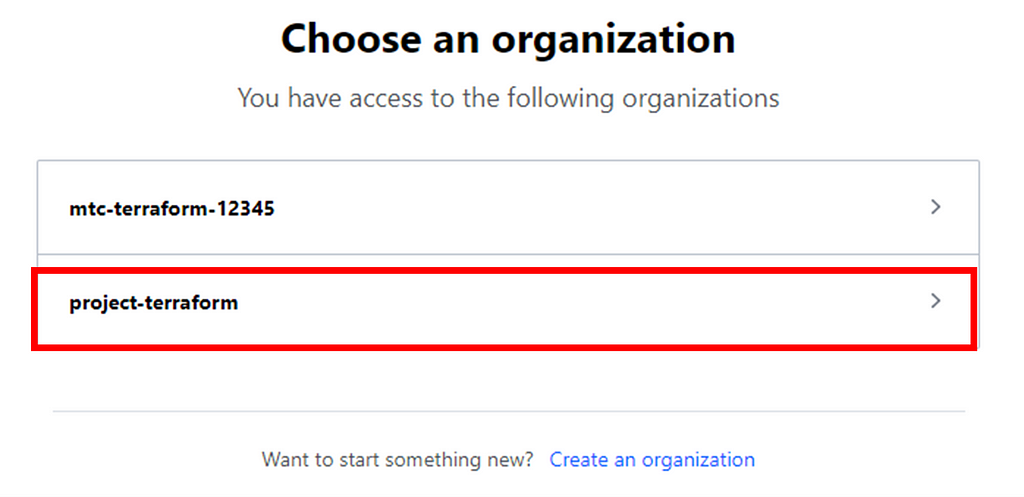

Let’s begin. Login into Terraform Cloud and create an Organization. I’ll be making use of the previously created Organization — project-terraform

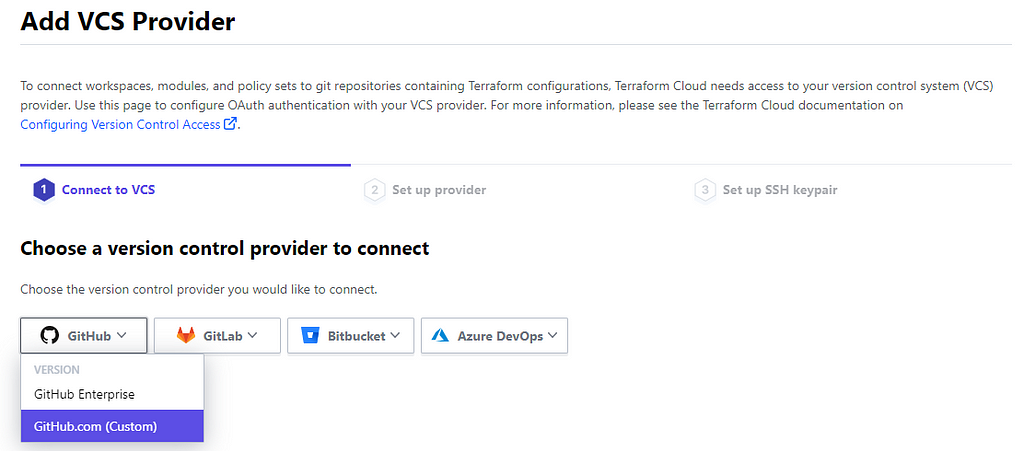

Step 3: Choose a VCS Provider

Terraform Cloud becomes a more powerful tool when integrated with the Version Control System (VCS) Provider. With workspaces linked to the repository, Terraform cloud can automatically initiate runs when changes in code are committed and pushed to specified branches.

Go to Settings > Providers > Add a VCS provider.

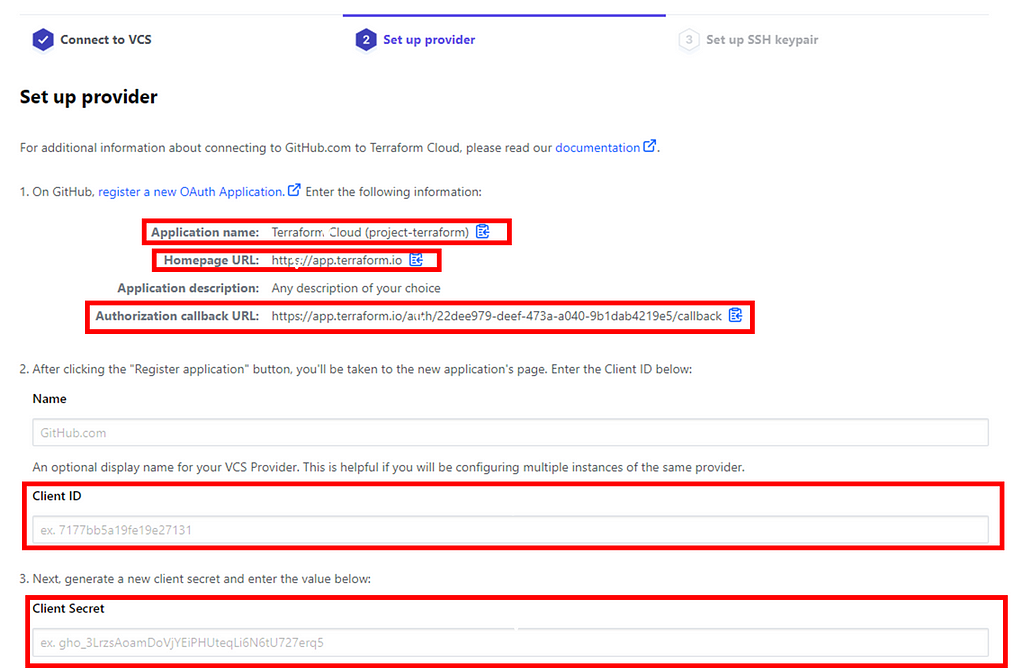

We would choose Github.com which takes us to set up the provider

Click on the link to register a new OAuth Application and it would open up on your GitHub account.

Copy and paste the information from Terraform Cloud into your GitHub account and click Register application

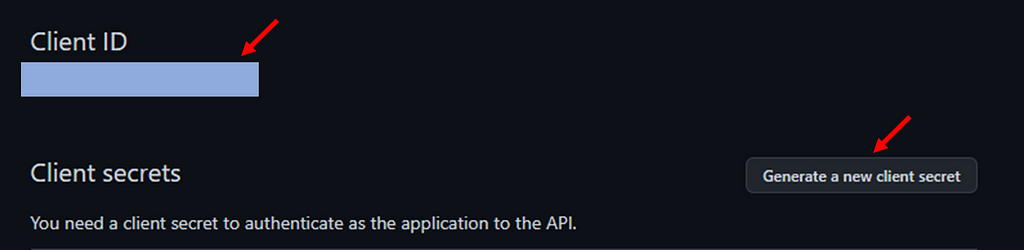

Now copy the client ID and also generate a new client secret. Navigate back to Set up Provider and paste the information in the required field. Click Connect and continue and Authorize Terraform Cloud to connect with your repository

Now we have that sorted out, we will create a workspace and choose the Version Control workflow. Select the version control provider which is your repository name

Select your repository and create the workspace

Now we have that setup, we’ll go on to make changes to our code and push it to the GitHub repo

Step 3: Trigger a CI/CD pipeline

The first step is to trigger a plan. A plan is triggered when you commit and push changes in your configuration files to your branch in the GitHub repository. When the branch is merged with the main branch, a plan is automatically queued in Terraform cloud

Once that’s done, we can go ahead to confirm the plan and Apply it to deploy the code.

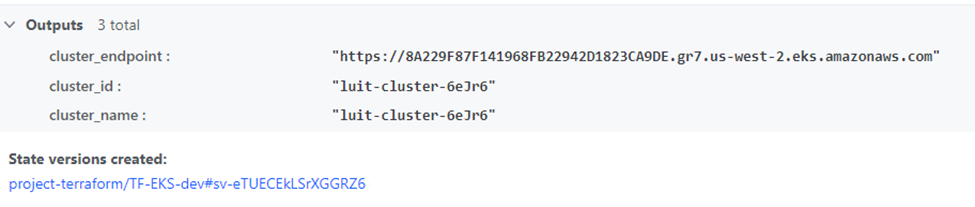

Now that the deployment is finished, let’s check the outputs

NOTE: Based on the task at hand to output the cluster name and IP address of the containers, the output block MUST be specified in the root module outputs.tf file in order to see it printed out

Great. It worked just fine.

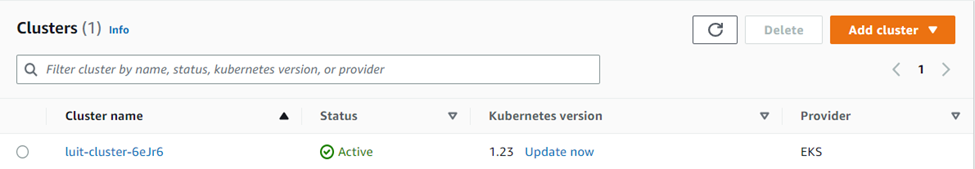

Now let’s take a look at our AWS console to see what was deployed

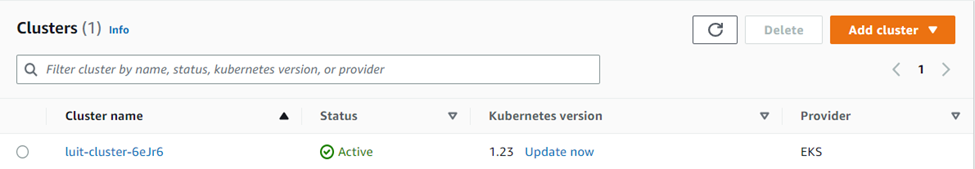

Cluster

Node groups

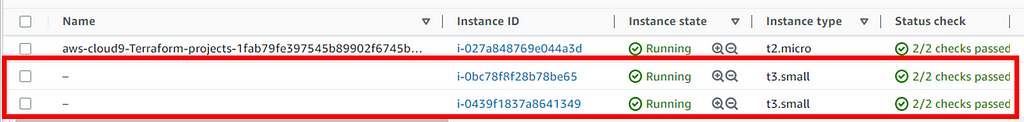

Worker nodes (Instances)

Thank you for Reading.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

Deploying an EKS Cluster with Terraform Cloud was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Imaze Enabulele

Imaze Enabulele | Sciencx (2022-11-28T00:36:36+00:00) Deploying an EKS Cluster with Terraform Cloud. Retrieved from https://www.scien.cx/2022/11/28/deploying-an-eks-cluster-with-terraform-cloud/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.