This content originally appeared on Level Up Coding - Medium and was authored by Alexander

Are you tired of getting rickrolled constantly? If so, facial recognition may be the solution you are looking for! In this article, I will explain and demonstrate how I used facial recognition to detect rickrolls before watching the video.

The Strategy

Although detecting rickrolls through audio fingerprinting is the more intuitive approach, I believe using facial recognition is a more interesting and reliable approach. I believe it is more reliable because

- There can be rickrolling videos without sound

- The audio can be distorted (sped up, pitch change, or just low quality)

- There cannot be a rickroll without Rick’s face

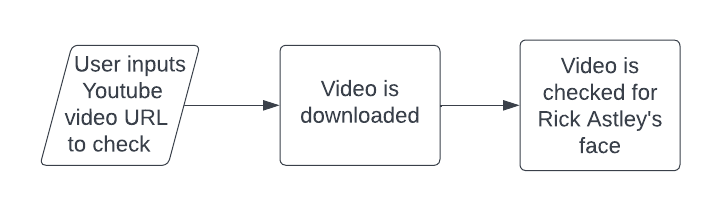

Here is an overly simplified flow chart for this operation.

We are going to be using the face-recognition python package that uses dlib’s deep learning technology to recognize faces. This works by generating landmarks on the subject’s face and saving them to compare to other facial landmarks.

The Programming

Imports

First and foremost we need to import our packages. The three main packages we will be using are face-recognition , opencv-python , and yt-dlp . I chose yt-dlp over the original YoutubeDL because I found it to be much faster at downloading videos.

# import packages

import face_recognition # to detect Rick's face

import cv2 # to read the video

import sys

from yt_dlp import YoutubeDL # to downoad the video

import tempfile # to create a temp folder to store the downloaded video

import uuid # to create the unique folder name for the temp folder

from termcolor import colored # to add some spice to the program

Download the video

Now we need to download the Youtube video the user wants to check. In this scenario, the URL will be provided as the first argument in the terminal. So let's get the URL and set some other variables such as the temporary folder location and the path we will save the video to.

# get options & set variables

youtube_url = sys.argv[1] # the video URL the user wants to check

temp_folder = f"{tempfile.gettempdir()}/{uuid.uuid1()}" # the location of the temp folder

video_location = f"{temp_folder}/output.mp4" # the location the video will be saved to

We can then create a simple logger to mute the output clutter and only voice errors.

# create logger for video downloader

class Logger(object):

def debug(self, msg):

pass

def warning(self, msg):

pass

def error(self, msg):

print(msg)

Finally, we can download the video using the yt-dlp module. We want to make sure we make the output format mp4 because that's what we defined when setting the video_location variable.

# download video

with YoutubeDL({

"outtmpl": f"{temp_folder}/output", # save to temp folder

"format": "bestvideo[ext=mp4]+bestaudio[ext=m4a]/mp4", # make sure it comes out as an mp4

"logger": Logger() # pipe output to logger

}) as ydl:

ydl.download([youtube_url])

print(colored("Video downloaded.", "green"))

Facial Recognition

If you weren’t having fun so far, I’m sure you will now! First, we have to get the face encoding from a pre-existing picture of Rick Astley. This lets us compare it to other faces in videos.

# get rick's face encoding

rick_face_encodings = [

face_recognition.face_encodings(face_recognition.load_image_file("./images/rick.jpg"))[0],

]

We now have to loop through every 10th frame in the video to scan it for faces. After testing I decided to check every 10th frame because checking every frame is unnecessary and a waste of time plus system resources. If there is a face in the frame we can get its encoding and compare it to Rick’s.

Let's start with the capture loop.

cap = cv2.VideoCapture(video_location) # create video capture

count = 0 # frame count

while True:

if count % 10 != 0: # only check every 10th frame

continue

success, frame = cap.read() # read the current frame

count += 1 # update frame count

cap.release() # release the video capture

We can now search the frames for any faces inside the loop, get their encodings and compare them to Rick’s. We are not going to loop through all the faces in the frame because the scene we are looking for only has one person.

faces = face_recognition.face_encodings(frame) # get all the faces in the frame

if faces: # if a face is found

result = face_recognition.compare_faces(rick_face_encodings, faces[0]) # compare rick's face encoding with the one found in the frame

if result: # if the face encoding match

print(colored("Rick roll detected!", "red"))

break

else: # skip frame if there are no faces

continue

Last but not least, let's tell the user that there are no rickrolls detected if the video capture ends with no matches (put this at the top of the loop).

if not success:

cap.release()

print(colored("No rick rolls found!", "green"))

break

Now if you run python3 main.py "https://www.youtube.com/watch?v=dQw4w9WgXcQ" it should output Rick roll detected! .

Conclusion

Well done! You just made a rickroll detector using facial recognition. Hopefully, this article taught you something or at least saved you from being rickrolled. You can find the finished code here.

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

How to Use Facial Recognition and Python to Never Get Rickrolled Again was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Alexander

Alexander | Sciencx (2023-01-09T03:26:50+00:00) How to Use Facial Recognition and Python to Never Get Rickrolled Again. Retrieved from https://www.scien.cx/2023/01/09/how-to-use-facial-recognition-and-python-to-never-get-rickrolled-again/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.