This content originally appeared on HackerNoon and was authored by Batching

Table of Links

3. Revisiting Normalization

3.1 Revisiting Euclidean Normalization

4 Riemannian Normalization on Lie Groups

5 LieBN on the Lie Groups of SPD Manifolds and 5.1 Deformed Lie Groups of SPD Manifolds

7 Conclusions, Acknowledgments, and References

\ APPENDIX CONTENTS

B Basic layes in SPDnet and TSMNet

C Statistical Results of Scaling in the LieBN

D LieBN as a Natural Generalization of Euclidean BN

E Domain-specific Momentum LieBN for EEG Classification

F Backpropagation of Matrix Functions

G Additional Details and Experiments of LieBN on SPD manifolds

H Preliminary Experiments on Rotation Matrices

I Proofs of the Lemmas and Theories in the Main Paper

A NOTATIONS

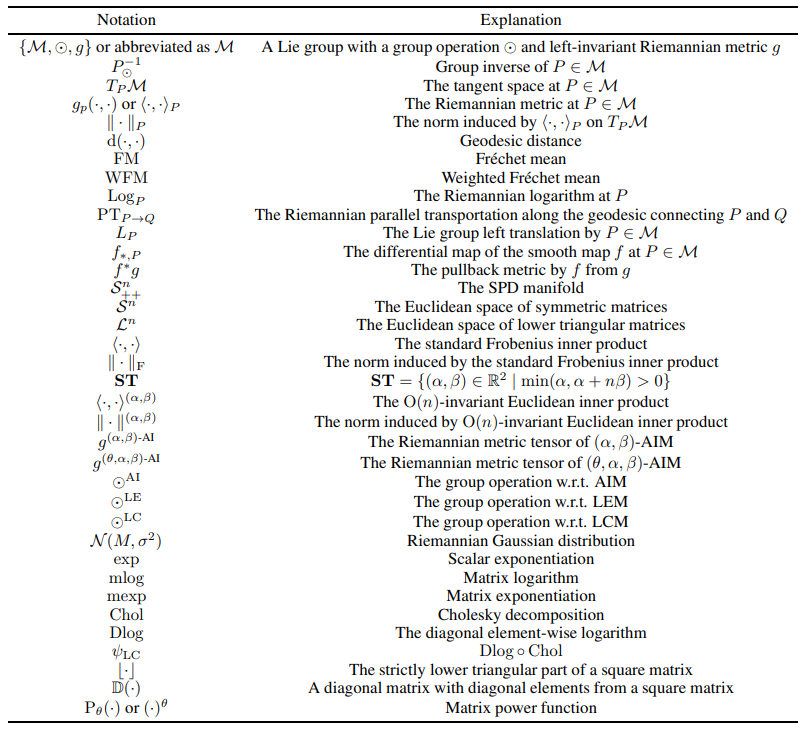

For better clarity, we summarize all the notations used in this paper in Tab. 6.

\

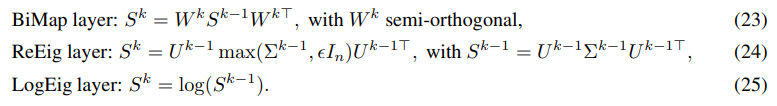

B BASIC LAYERS IN SPDNET AND TSMNET

SPDNet (Huang & Van Gool, 2017) is the most classic SPD neural network. SPDNet mimics the conventional densely connected feedforward network, consisting of three basic building blocks

\

\

\ \ where max(·) is element-wise maximization. BiMap and ReEig mimic transformation and nonlinear activation, while LogEig maps SPD matrices into the tangent space at the identity matrix for classification.

\

\

\

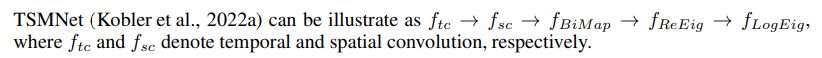

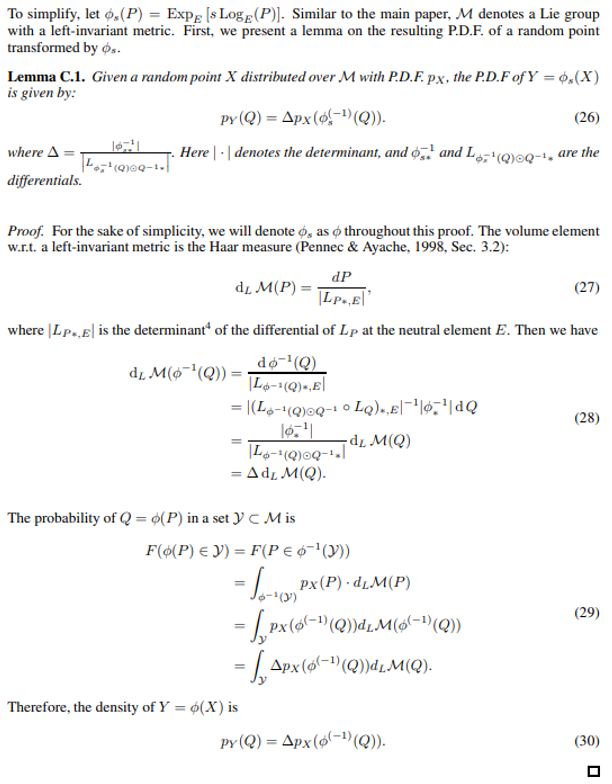

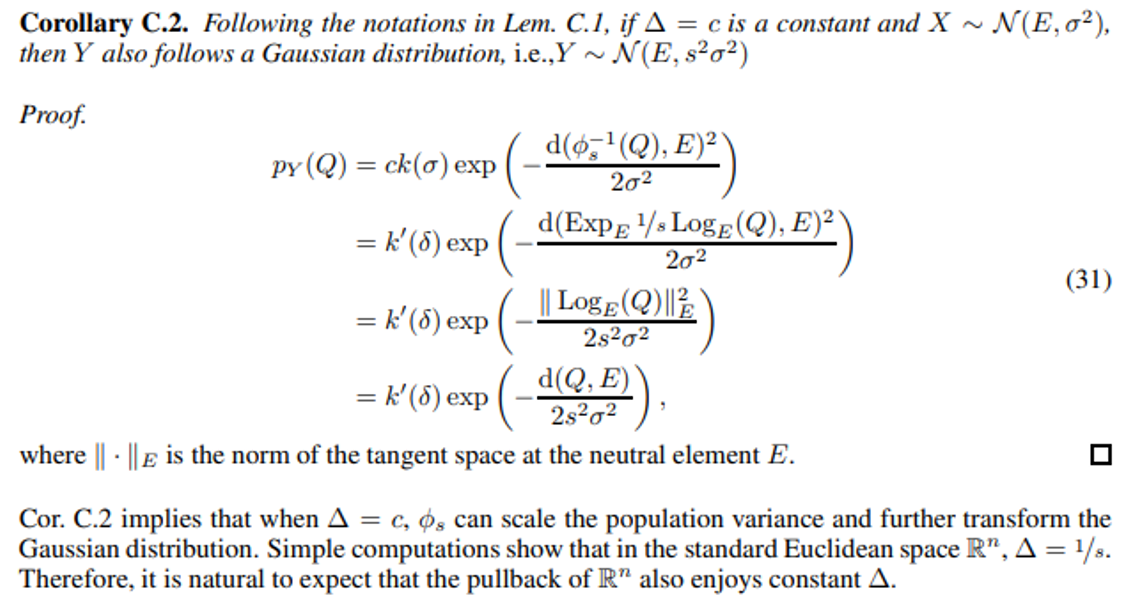

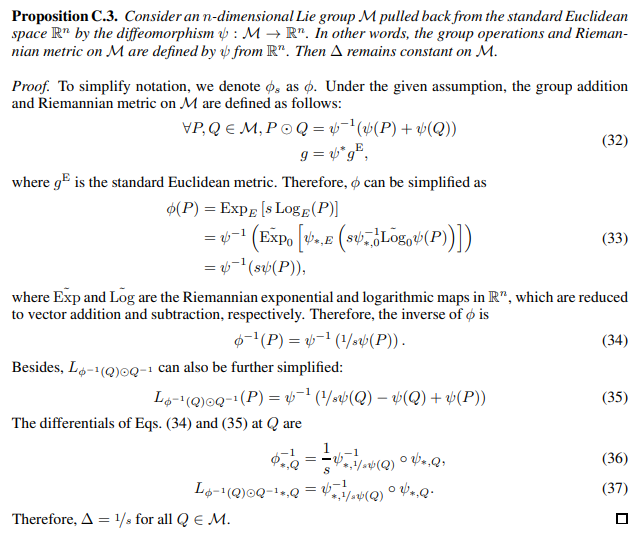

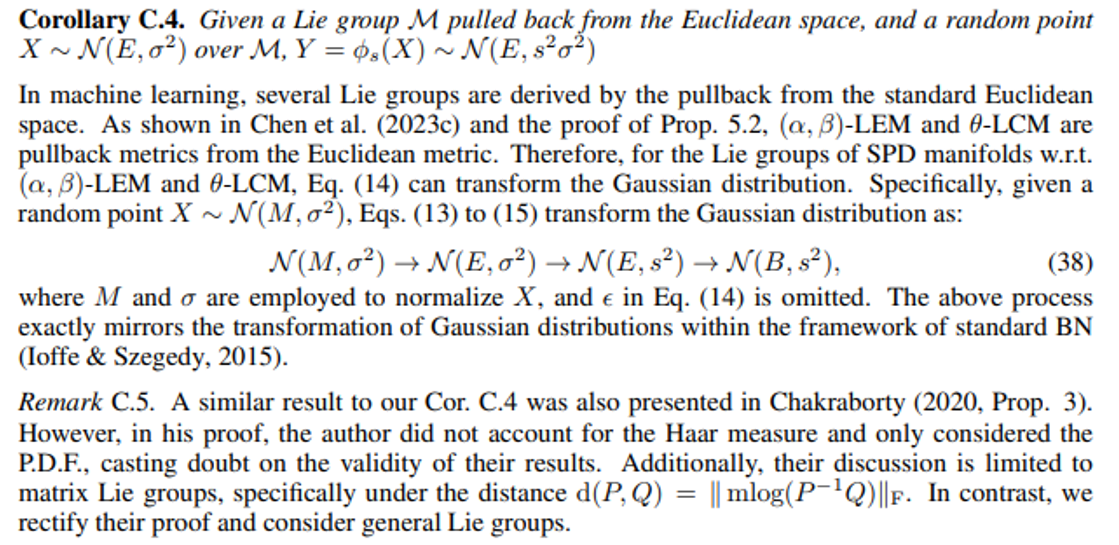

C STATISTICAL RESULTS OF SCALING IN THE LIEBN

In this section, we will show the effect of our scaling (Eq. (14)) on the population. We will see that while the resulting population variance is generally agnostic, it becomes analytic under certain circumstances, such as SPD manifolds under LEM or LCM. As a result, Eq. (14) can normalize and transform the latent Gaussian distribution.

\

\

\ \ The above lemma implies that when ∆ is a constant, Y also follows a Gaussian distribution.

\

\

\

\

\

\ \ By Prop. C.3, we can directly obtain the following corollary.

\

\

\

:::info This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

:::

:::info Authors:

(1) Ziheng Chen, University of Trento;

(2) Yue Song, University of Trento and a Corresponding author;

(3) Yunmei Liu, University of Louisville;

(4) Nicu Sebe, University of Trento.

:::

\

This content originally appeared on HackerNoon and was authored by Batching

Batching | Sciencx (2025-02-27T03:15:33+00:00) Basic Layers In SPDNET, TSMNET, and Statistical Results of Scaling in the LieBN. Retrieved from https://www.scien.cx/2025/02/27/basic-layers-in-spdnet-tsmnet-and-statistical-results-of-scaling-in-the-liebn/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.