This content originally appeared on Level Up Coding - Medium and was authored by Stefan Pietrusky

A tool for individual exchange with PDF files and websites

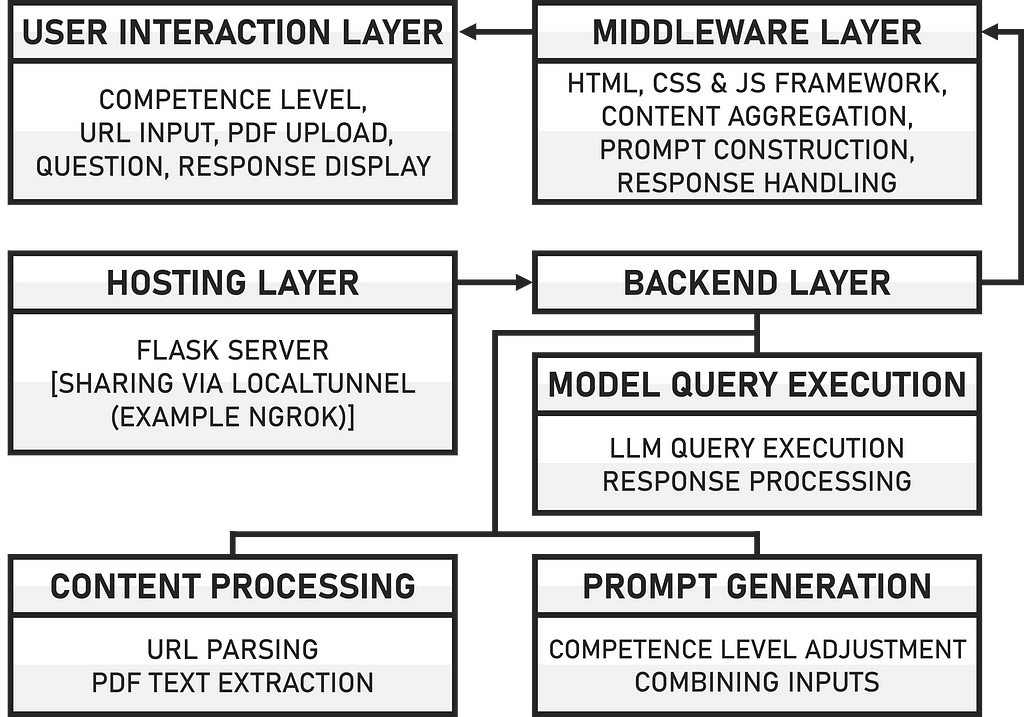

This article is about setting up the IEC V1.5 application that can be executed locally. The interface of the application is not created using a framework such as Gradio or Streamlit, but via HTML, CSS and JS. The advantage of this is that the design of the application can be customized more quickly. In IEC, users can implement PDF files and/or URLs as a source. The text extracted from the sources can be viewed or hidden. The questions are only answered on the basis of the sources used, which means that the chatbot is not restricted by its limited knowledge database, starts hallucinating in the event of ignorance or does not provide an answer at all for current topics. The level of the answer can also be set individually. The basic structure is shown in the following illustration.

This function was rated very positively in an initial IEC trial [1]. Communication between the application and LLM works via CLI and Ollama. The model currently used is Llama3.1 (8b) from Meta. However, other models can also be used via the model management system or, depending on the available computing power, models with more parameters. As the installation of Ollama and an LLM or SLM has already been described in various articles [2], it will not be discussed again here. The functionality of the code is briefly described below. The application can be installed via the GitHub repository linked below and currently looks as follows.

REQUIREMENTS

Flask and other libraries (e.g. “requests” to retrieve content from the web, “fitz” to extract text from PDF files, etc.) are imported to start the web application. For the tokenization of the texts for the LLM, “tiktoken” a tokenizer from OpenAI (GPT-2) is used.

from flask import Flask, request, jsonify, Response

import os

import subprocess

import re

import requests

from readability import Document

import fitz

from bs4 import BeautifulSoup

import tiktoken

app = Flask(__name__)

os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE"

TOKENIZATION AND HELP FUNCTIONS

This is followed by tokenization and text splitting. The maximum input length of the Llama3.1 model from Meta is 131072 tokens (llama.context_length: 131072 [3]). Depending on which model is used, this value must be adjusted. The extracted text is converted into tokens “tokenize-text(text)” and then back to text “detokenize_text(tokens)”. If the text exceeds the token limit, it is split into several blocks to be processed by the LLM.

TOKEN_LIMIT = 131072

enc = tiktoken.get_encoding("gpt2")

def tokenize_text(text):

return enc.encode(text)

def detokenize_text(tokens):

return enc.decode(tokens)

def split_text_into_blocks(text, max_tokens):

tokens = tokenize_text(text)

for i in range(0, len(tokens), max_tokens):

yield detokenize_text(tokens[i : i + max_tokens])

FUNCTIONS FOR EXTRACTING CONTENT

The next step is the extraction of content. The content of the website is retrieved from the HTML content of the website using “requests.get(url)”. HTML tags are removed by the “BeautifulSoup” library so that only the plain text is transmitted. The uploaded PDF file is read in as a stream. The file is opened by “fitz.open(stream=pdf_data, filetype=”pdf”)” directly from memory and extracted page by page “.get_text()”. Several spaces are removed by “re.sub()”. The uploaded media (URL/PDF files) and the content extracted from them are combined into a single string “all_content.strip()”.

def get_readable_content(url):

try:

response = requests.get(url, timeout=30)

response.raise_for_status()

doc = Document(response.text)

readable_html = doc.summary()

soup = BeautifulSoup(readable_html, 'html.parser')

readable_text = soup.get_text()

return re.sub(r'\s+', ' ', readable_text).strip()

except requests.exceptions.RequestException as e:

return f"Error fetching content: {str(e)}"

def extract_text_from_pdf(file):

text = ""

pdf_data = file.read()

try:

with fitz.open(stream=pdf_data, filetype="pdf") as pdf:

for page in pdf:

text += page.get_text()

except Exception as e:

return f"Error reading PDF: {str(e)}"

cleaned_text = re.sub(r'\s*\n\s*', ' ', text)

cleaned_text = re.sub(r'\s{2,}', ' ', cleaned_text)

return cleaned_text.strip()

def extract_content(url_input, pdf_files):

all_content = ""

if url_input:

urls = [url.strip() for url in url_input.split(",")]

for url in urls:

if url.startswith("http"):

all_content += get_readable_content(url) + "\n"

if pdf_files:

for pdf_file in pdf_files:

pdf_text = extract_text_from_pdf(pdf_file)

all_content += pdf_text + "\n"

return all_content.strip() or "No content extracted from the provided inputs."

FUNCTIONS FOR ANSWERING QUESTIONS

The user’s question is sent to the locally installed LLM via “subprocess.run()”. The model currently has 60 seconds “timeout=60” for the answer. If the LLM takes too long, an error is output.

def query_llama_via_cli(input_text):

try:

result = subprocess.run(

["ollama", "run", "llama3.1"],

input=f"{input_text}\n",

stdout=subprocess.PIPE,

stderr=subprocess.PIPE,

text=True,

encoding='utf-8',

timeout=60

)

if result.returncode != 0:

return f"Error in the model request: {result.stderr.strip()}"

response = re.sub(r'\x1b\[.*?m', '', result.stdout)

return response.strip()

except subprocess.TimeoutExpired:

return "The model request timed out. Please try again."

except Exception as e:

return f"An unexpected error has occurred: {str(e)}"

As already mentioned, the individual definition of the competence level of the answer was very well received during the first test [1]. Depending on the level selected by the user, the model’s answer is simplified or more detailed. Such a function is not included in many commercial applications. If you want a “simpler” answer, the question must be formulated accordingly. With IEC, the answer to the same question can be quickly and easily adjusted in terms of level. The extracted content is transmitted in blocks, and the answer is generated and assembled block by block.

def generate_responses_from_blocks(competence_level, extracted_content, user_question):

if not competence_level or not user_question.strip():

return "Please select a competence level and enter a question."

if not extracted_content.strip():

return "No content extracted on which the answer could be based."

if competence_level == "Beginner":

style_instruction = (

"Respond in very simple words, avoid technical terms, and keep the answer short and concise. "

"Explain the topic in a way that even laypersons can understand."

)

elif competence_level == "Intermediate":

style_instruction = (

"Provide a clear and detailed response, occasionally using technical terms and offering a balanced explanation. "

"Explain the topic so that the reader gains a good understanding without it becoming too technical."

)

elif competence_level == "Advanced":

style_instruction = (

"Provide a highly detailed and technical response, use specialized terminology, and offer a comprehensive analysis. "

"Explain the topic at a high, academic level."

)

else:

style_instruction = "Respond based on the provided information."

base_prompt = (

f"System Instruction: {style_instruction}\n\n"

"Note: The following information serves only as a reference. Please phrase the answer in your own words and consider the desired style.\n\n"

"Provided Information (Excerpt):\n"

)

question_prompt = f"\n\nQuestion: {user_question}\n\nAnswer:"

prompt_prefix_tokens = len(tokenize_text(base_prompt)) + len(tokenize_text(question_prompt))

max_tokens_for_block = TOKEN_LIMIT - prompt_prefix_tokens

responses = []

for block in split_text_into_blocks(extracted_content, max_tokens_for_block):

input_text = base_prompt + block + question_prompt

response = query_llama_via_cli(input_text)

responses.append(response)

return "\n".join(responses)

Here is an example. To the question: What this article is about? (article), the IEC provides the following answer depending on the level:

Beginner answer:

This article appears to be a collection of references and information related to named entity recognition (NER), which is a subtask of natural language processing (NLP) that involves identifying and categorizing entities mentioned in text into predefined categories such as names, locations, organizations, etc. The article cites various papers, articles, and workshops related to NER, including those focused on Twitter-specific challenges and noisy user-generated text. It also touches on topics like domain adaptation, crowdsourcing for gold standard development, and graph-based semi-supervised learning models. Overall, the purpose of this article seems to be to provide a comprehensive overview of the state-of-the-art in named entity recognition research, with a focus on Twitter-specific challenges and noisy user-generated text.

Intermediate answer:

This article is about Named Entity Recognition (NER), a subtask of natural language processing that involves identifying and categorizing named entities in text into predefined categories such as names of people, organizations, locations, etc. The article provides an overview of the NER task, its applications, challenges, and various techniques used to address them, including machine learning-based approaches, rule-based systems, and hybrid models. It also discusses the importance of high-quality gold standard development in clinical NLP and the use of crowdsourcing for this purpose. Additionally, the article touches on the topic of linking named entities to external knowledge resources such as Wikipedia. Overall, it provides a comprehensive review of the NER task and its related research areas.

Advanced answer:

This article appears to be a comprehensive review of the topic of named entity recognition (NER), which is a subtask in natural language processing that involves identifying and categorizing entities such as names, locations, organizations, dates, times, quantities, and monetary values mentioned in unstructured text.

The article covers various aspects of NER, including:

- The importance and applications of NER

- Overview of different machine learning approaches to NER, including conditional random fields (CRFs), support vector machines (SVMs), and deep neural networks

- Discussion of domain adaptation techniques for improving NER performance on noisy or unseen data

- Review of various datasets and evaluation metrics used in NER tasks

- Presentation of different methods for handling named entity linking and disambiguation

Overall, the article provides a thorough overview of the field of NER and its current state-of-the-art approaches.

DESIGN, STRUCTURE AND INTERACTIVITY

The design (CSS_CONTENT), structure (HTML_CONTENT) and interactivity (JS_CONTENT) of the IEC are defined via the corresponding areas and can be customized. CSS, HTML and JS have been combined in one script to avoid having to switch back and forth between different files. Depending on personal preference, the parts can also be separated. Here are a few examples of how the application can be modified by changing the CSS, HTML or JS code. The spinner that is displayed when processing input can be customized using the CSS rule “.spinner”.

.spinner {

border: 8px solid #f3f3f3;

border-top: 8px solid #3498db;

border-radius: 50%;

width: 50px;

height: 50px;

animation: spin 1s linear infinite;

margin: 20px auto;

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}The model’s answer to the user’s question is displayed letter by letter using a typewriter effect. The “speed” can be adjusted using the corresponding function.

function typeWriterHTML(html, element, speed = 5) {

let i = 0;

let isTag = false;

let output = "";

function type() {

if (i < html.length) {

let char = html[i];

output += char;

if (char === "<") {

isTag = true;

}

if (char === ">") {

isTag = false;

}

element.innerHTML = output;

i++;

setTimeout(type, isTag ? 0 : speed);

}

}

type();

}The names of the buttons for the different competence levels can be customized via HTML.

<div class="button-group" id="competence-group">

<button class="competence-button" data-level="Beginner">Beginner</button>

<button class="competence-button selected" data-level="Intermediate">Intermediate</button>

<button class="competence-button" data-level="Advanced">Advanced</button>

</div>

FLASK API-Endpoints

Finally, the Flask API endpoints are defined, which form the core functionalities of the app. Specifically, the user interface, processing of content and generation of answers to user questions.

@app.route('/')

def index():

return Response(HTML_CONTENT, mimetype='text/html')

@app.route('/styles.css')

def styles():

return Response(CSS_CONTENT, mimetype='text/css')

@app.route('/script.js')

def script():

return Response(JS_CONTENT, mimetype='application/javascript')

@app.route('/extract_content', methods=['POST'])

def extract_content_endpoint():

if request.content_type.startswith('multipart/form-data'):

url_input = request.form.get("urls", "")

pdf_files = request.files.getlist("pdfs")

else:

data = request.get_json()

url_input = data.get("urls", "")

pdf_files = []

extracted = extract_content(url_input, pdf_files)

return jsonify({"content": extracted or "Kein Inhalt extrahiert."})

@app.route('/ask_question', methods=['POST'])

def ask_question():

data = request.get_json()

competence_level = data.get("competence_level")

extracted_content = data.get("content", "")

user_question = data.get("question", "")

if not competence_level or not user_question.strip():

return jsonify({"response": "Please select a competence level and enter a question."})

if not extracted_content.strip():

return jsonify({"response": "No extracted content available."})

response = generate_response_from_extracted_content(competence_level, extracted_content, "", None, user_question)

return jsonify({"response": response})

@app.route('/clear_extracted', methods=['POST'])

def clear_extracted():

return jsonify({"content": ""})

if __name__ == '__main__':

app.run(debug=True, host="0.0.0.0", port=5000) APPLICATION POSSIBILITIES

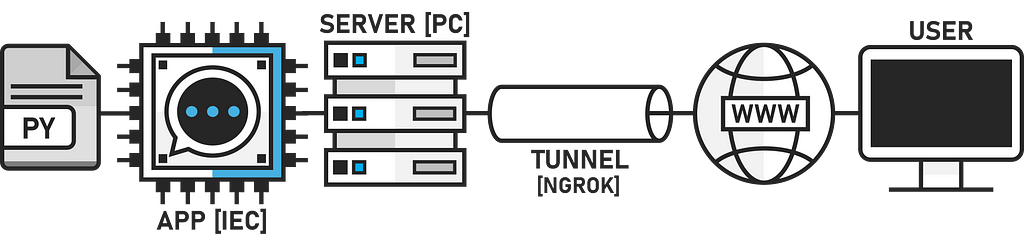

The IEC can be used in the private sphere to ask questions about the content of PDF files or websites, but also in educational institutions to provide individual support to learners. Specifically, by installing the IEC directly on the end devices, sharing it with others via the local network or making it available via the Internet using a tunneling tool (ngrok).

The only thing that needs to be ensured here is that the PC from which the application is made available to the learners acts as a server. If the computing capacity of the PC is too low, the requests cannot be processed effectively. The application currently contains three different levels. These can be expanded together with the learners if necessary.

IEC V1.5 PYTHON SCRIPT [GITHUB]

The application can be installed via the following GitHub repository.

CONCLUSION

This article showed how to create an interactive chatbot using HTML, CSS and JS without using well-known frameworks for web interfaces such as Dash, Streamlit, Gradio, Voila etc. Even if the LLMs used via Ollama are closed, the models can use their NLP function and corresponding content (PDF files and/or URLs) to provide information and thus enable an exchange on current topics.

[1] Pietrusky, S. (2024). Promoting AI Literacy in Higher Education: Evaluating the IEC-V1 Chatbot for Personalized Learning and Educational Equity. ARXIV CS.CY Link

[2] Pietrusky, S. (2024). How to talk to a PDF file without using proprietary models: CLI + Streamlit + Ollama. Towards Data Science. Link

[3] https://ollama.com/library/llama3.1/blobs/667b0c1932bc

IEC V1.5: Individual Educational Chatbot. was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Stefan Pietrusky

Stefan Pietrusky | Sciencx (2025-03-05T02:17:25+00:00) IEC V1.5: Individual Educational Chatbot.. Retrieved from https://www.scien.cx/2025/03/05/iec-v1-5-individual-educational-chatbot/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.