This content originally appeared on HackerNoon and was authored by Image Recognition

Table of Links

2 MindEye2 and 2.1 Shared-Subject Functional Alignment

2.2 Backbone, Diffusion Prior, & Submodules

2.3 Image Captioning and 2.4 Fine-tuning Stable Diffusion XL for unCLIP

3 Results and 3.1 fMRI-to-Image Reconstruction

3.3 Image/Brain Retrieval and 3.4 Brain Correlation

6 Acknowledgements and References

\ A Appendix

A.2 Additional Dataset Information

A.3 MindEye2 (not pretrained) vs. MindEye1

A.4 Reconstruction Evaluations Across Varying Amounts of Training Data

A.5 Single-Subject Evaluations

A.7 OpenCLIP BigG to CLIP L Conversion

A.9 Reconstruction Evaluations: Additional Information

A.10 Pretraining with Less Subjects

A.11 UMAP Dimensionality Reduction

A.13 Human Preference Experiments

A.10 Pretraining with Less Subjects

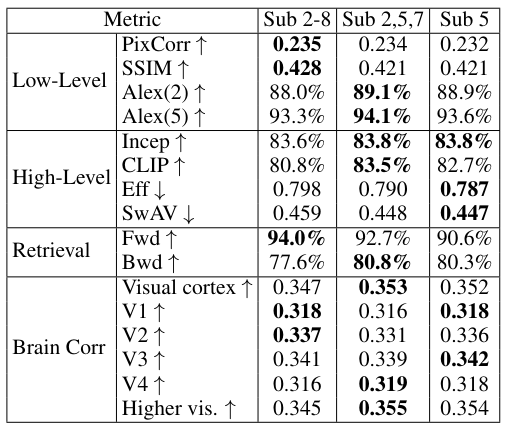

To determine the relative impact of using additional subjects for pretraining, we separately fine-tuned a MindEye2 model for subject 1 (using 1 hour of their training data) that was pretrained only on subjects 2, 5, and 7 (these are the subjects who completed all 40 scanning sessions), as well as only on subject 5 (the subject whose single-subject model performed the best). Results in Table 10 show similar performance for these models compared to pretraining on the full set of available subjects, suggesting that the number of pretraining subjects does not seem to play a major role in subsequent fine-tuning performance.

\

\

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

:::info Authors:

(1) Paul S. Scotti, Stability AI and Medical AI Research Center (MedARC);

(2) Mihir Tripathy, Medical AI Research Center (MedARC) and a Core contribution;

(3) Cesar Kadir Torrico Villanueva, Medical AI Research Center (MedARC) and a Core contribution;

(4) Reese Kneeland, University of Minnesota and a Core contribution;

(5) Tong Chen, The University of Sydney and Medical AI Research Center (MedARC);

(6) Ashutosh Narang, Medical AI Research Center (MedARC);

(7) Charan Santhirasegaran, Medical AI Research Center (MedARC);

(8) Jonathan Xu, University of Waterloo and Medical AI Research Center (MedARC);

(9) Thomas Naselaris, University of Minnesota;

(10) Kenneth A. Norman, Princeton Neuroscience Institute;

(11) Tanishq Mathew Abraham, Stability AI and Medical AI Research Center (MedARC).

:::

\

This content originally appeared on HackerNoon and was authored by Image Recognition

Image Recognition | Sciencx (2025-04-16T01:42:51+00:00) Pretraining Efficiency: MindEye2’s Performance with Fewer Subjects. Retrieved from https://www.scien.cx/2025/04/16/pretraining-efficiency-mindeye2s-performance-with-fewer-subjects/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.