This content originally appeared on HackerNoon and was authored by Abdellatif Abdelfattah

Yesterday, OpenAI announced GPT-4.1, featuring a staggering 1M-token context window and perfect needle-in-a-haystack accuracy. Gemini 2.5 now matches that 1M-token benchmark, with up to 10M tokens available in research settings. As the founder of a RAG-as-a-service startup, my inbox quickly filled with messages claiming this was the end of Retrieval-Augmented Generation (RAG)—suggesting it was time for us to pivot.

\ Not so fast.

The Allure—and the Reality—of Large Context Windows

On the surface, ultra-large context windows are attractive. They promise:

- Easy handling of vast amounts of data

- Simple API-driven interactions directly from LLM providers

- Perfect recall of information embedded within the provided context

\ But here's the catch: anyone who's tried large-context deployments in production knows reality quickly diverges from these promises.

Cost and Speed: The Hidden Bottlenecks

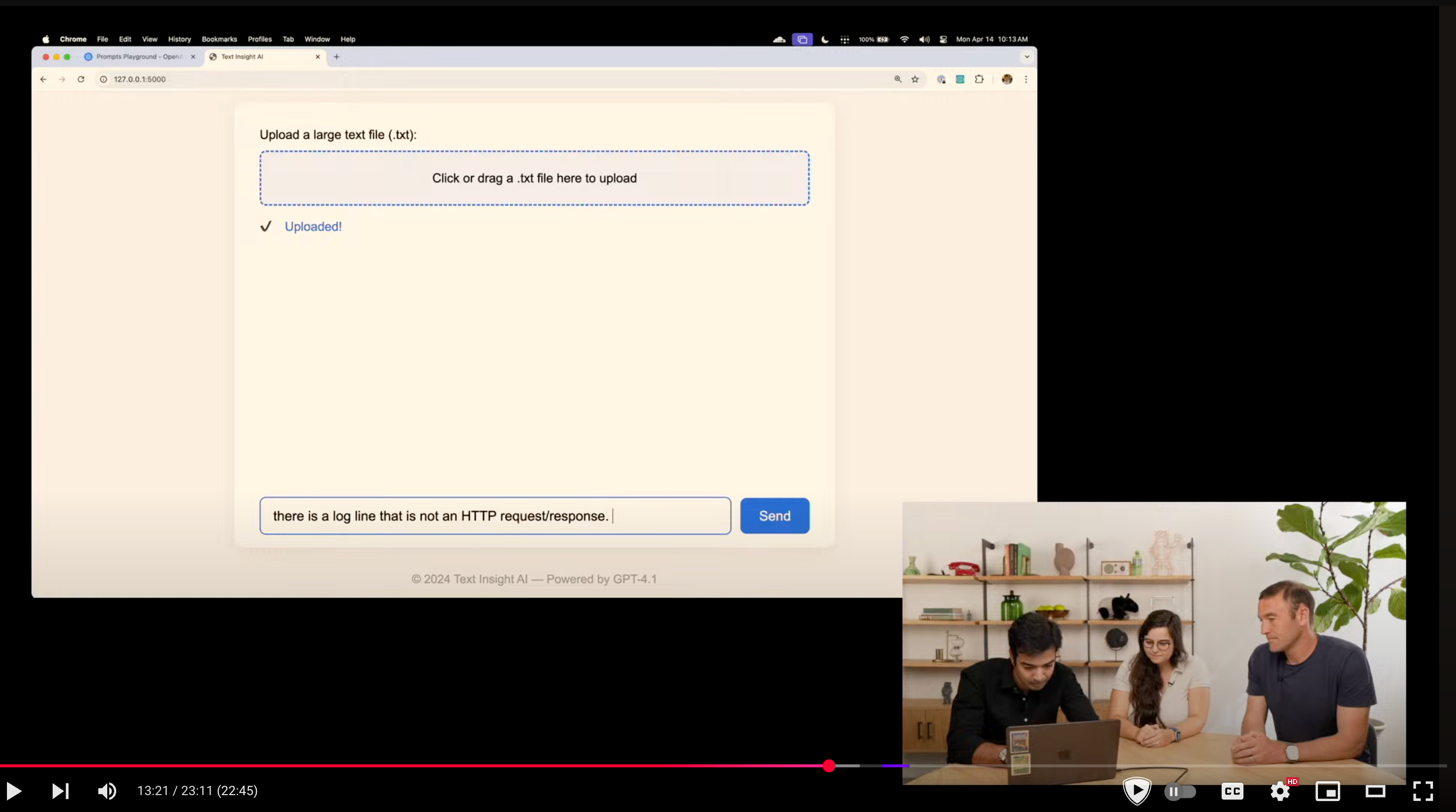

Consider the math: a typical RAG query uses around 1K tokens. Increasing the context window to 1M tokens boosts your cost 1000-fold—from about $0.002 to $2 per query. Yesterday's GPT-4.1 demo by OpenAI took 76 seconds for a single 456K-token request—so slow that even the demo team momentarily wondered if it had stalled.

Agentic Workflows Amplify the Problem

In modern AI applications, workflows are increasingly becoming agentic, meaning multiple LLM calls and steps before a final result emerges. The cost and latency problems compound exponentially. Large-context approaches quickly become untenable for production-scale, iterative workflows.

Citations: A Critical Gap in Large Context Models

Large-context LLMs lack built-in citation support. Users expect verifiable results and the ability to reference original sources. RAG systems solve this elegantly by pinpointing the exact chunks of content used to generate answers, enabling transparency and trust.

Scale Matters: Context Windows Alone Aren't Enough

Even at 1M tokens (~20 books), large contexts fall dramatically short for serious enterprise applications. Consider one of our clients, whose content database clocks in at a staggering 6.1 billion tokens. A 10M or even 100M context window won't scratch the surface. Tokenomics collapse at this scale, making RAG indispensable.

The Future of RAG

Far from obsolete, RAG remains the most scalable, verifiable, and cost-effective way to manage and query enterprise-scale data. Yes, future breakthroughs may eventually bridge these gaps. But until then—and despite recent advancements—we're doubling down on RAG.

\ TL;DR: GPT-4.1's 1M-token context window is impressive but insufficient for real-world use cases. RAG isn't dead; it's still the backbone of enterprise-scale AI.

This content originally appeared on HackerNoon and was authored by Abdellatif Abdelfattah

Abdellatif Abdelfattah | Sciencx (2025-04-17T09:30:15+00:00) GPT-4.1’s 1M-token Context Window is Impressive but Insufficient for Real-world Use Cases. Retrieved from https://www.scien.cx/2025/04/17/gpt-4-1s-1m-token-context-window-is-impressive-but-insufficient-for-real-world-use-cases/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.