This content originally appeared on DEV Community and was authored by Team Timescale

It's Timescale Launch Week and we’re bringing benchmarks: Postgres vs. Qdrant on 50M Embeddings.

There’s a belief in the AI infrastructure world that you need to abandon general-purpose databases to get great performance on vector workloads. The logic goes: Postgres is great for transactions, but when you need high-performance vector search, it’s time to bring in a specialized vector database like Qdrant.

That logic doesn’t hold—just like it didn’t when we benchmarked pgvector vs. Pinecone.

Like everything in Launch Week, this is about speed without sacrifice. And in this case, Postgres delivers both.

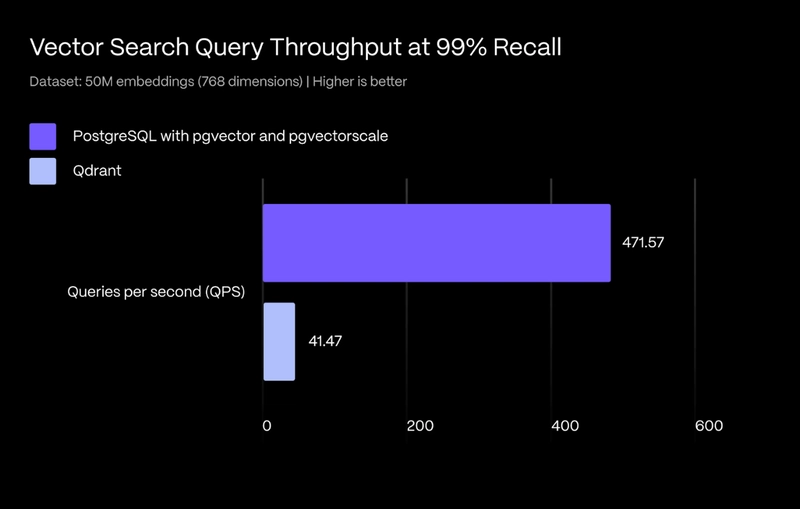

We’re releasing a new benchmark that challenges the assumption that you can only scale with a specialized vector database. We compared Postgres (with pgvector and pgvectorscale) to Qdrant on a massive dataset of 50 million embeddings. The results show that Postgres not only holds its own but also delivers standout throughput and latency, even at production scale.

This post summarizes the key takeaways, but it’s just the beginning. Check out the full benchmark blog post on query performance, developer experience, and operational experience.

Let’s dig into what we found and what it means for teams building production AI applications.

The Benchmark: Postgres vs. Qdrant on 50M Embeddings

We tested Postgres and Qdrant on a level playing field:

- 50 million embeddings , each with 768 dimensions

- ANN-benchmarks , the industry-standard benchmarking tool

- Focused on approximate nearest neighbor (ANN) search, no filtering

- All benchmarks run on identical AWS hardware

The takeaway? Postgres with pgvector and pgvectorscale showed significantly higher throughput while maintaining sub-100 ms latencies. Qdrant performed strongly on tail latencies and index build speed, but Postgres pulled ahead where it matters most for teams scaling to production workloads.

For the complete results, including detailed performance metrics, graphs, and testing configurations, read the full benchmark blog post.

Why It Matters: AI Performance Without the Rewrite

These results aren’t just a technical curiosity. They have real implications for how you architect your AI stack:

- Production-grade latency: Postgres with pgvectorscale delivers sub-100 ms p99 latencies needed to power real-time or responsive AI applications.

- Higher concurrency : Postgres delivered significantly higher throughput, meaning you can support more simultaneous users without scaling out as aggressively.

- Lower complexity : You don't need to manage and integrate a separate, specialized vector database.

- Operational familiarity : You leverage the reliability, tooling, and operational practices you already have with Postgres.

- SQL-first development : You can filter, join, and integrate vector search naturally with relational data, without learning new APIs or query languages.

Postgres with pgvector and pgvectorscale gives you the performance of a specialized vector database without giving up the ecosystem, tooling, and developer experience that make Postgres the world’s most popular database.

You don’t need to split your stack to do vector search.

What Makes It Work: Pgvectorscale and StreamingDiskANN

How can Postgres compete with (and outperform) purpose-built vector databases?

The answer lies inpgvectorscale (part of thepgai family), which implements the StreamingDiskANN index (a disk-based ANN algorithm built for scale) for pgvector. Combined with Statistical Binary Quantization (SBQ), it balances memory usage and performance better than traditional in-memory HNSW (hierarchical navigable small world) implementations.

That means:

- You can run large-scale vector search on standard cloud hardware.

- You don’t need massive memory footprints or expensive GPU-accelerated nodes.

- Performance holds steady even as your dataset grows to tens or hundreds of millions of vectors.

All while staying inside Postgres.

When to Choose Postgres, and When Not To

To be clear: Qdrant is a capable system. It has faster index builds and lower tail latencies. It’s a strong choice if you’re not already using Postgres, or for specific use cases that need native scale-out and purpose-built vector semantics.

However, for many teams—especially those already invested in Postgres— it makes no sense to introduce a new database just to support vector search.

If you want high recall, high throughput, and tight integration with your existing stack, Postgres is more than enough.

Want to Try It?

Pgvector and pgvectorscale are open source and available today:

- pgvector GitHub

- pgvectorscale GitHub

- Or save time and access both by creating a free Timescale Cloud account

Vector search in Postgres isn’t a hack or a workaround. It’s fast, it scales, and it works. If you’re building AI applications in 2025, you don’t have to sacrifice your favorite database to move fast.

Up Next at Timescale Launch Week

Next up, we’re taking Postgres even further: Learn how to stream external S3 data into Postgres with livesync for S3 and work with S3 data in place using the pgai Vectorizer. Two powerful ways to seamlessly integrate external data from S3 directly into your Postgres workflows!

This content originally appeared on DEV Community and was authored by Team Timescale

Team Timescale | Sciencx (2025-04-30T16:01:47+00:00) Postgres vs. Qdrant: Why Postgres Wins for AI and Vector Workloads. Retrieved from https://www.scien.cx/2025/04/30/postgres-vs-qdrant-why-postgres-wins-for-ai-and-vector-workloads/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.