This content originally appeared on Level Up Coding - Medium and was authored by Pavlo Kolodka

A practical guide to understanding JSON’s core principles by re-creating it in JavaScript.

Introduction

I believe you truly understand something only when you can reimplement it. That’s when you encounter edge cases, hidden complexities, and unexpected design decisions — things you might never notice by just using the tool.

Previously, we’ve discovered the inner workings of JavaScript’s Promise by implementing it ourselves. If you haven’t read this one, you can check it out here:

I wrote my own Promise implementation

Today, we’re going to write our own JSON serializer/deserializer to better understand how things work under the hood.

History of JSON

JSON (JavaScript Object Notation) is a lightweight data interchange format that was discovered and popularized by Douglas Crockford in the early 2000s. It is based on a subset of the JavaScript programming language, although JSON is language-independent and has been adopted across virtually all programming environments.

JSON emerged as a need for simple data exchange between web browsers and servers. Later, when AJAX was presented, JSON became even more popular than XML (the “X” in AJAX acronym) because of its core feature of simplicity, and nowadays it is de facto a standard for data interchange format in the web realm.

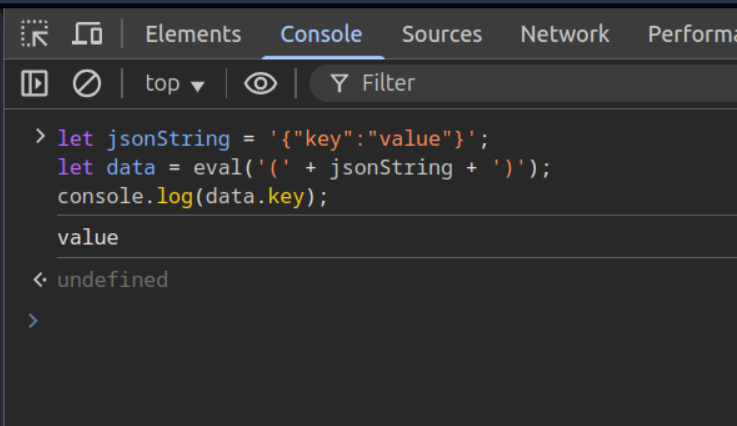

As it was mentioned before, JSON is a strict subset of JavaScript. That means all data expressed with its syntax is automatically valid JavaScript types.

Syntax

JSON supports a limited set of data types:

- Strings: “text”

- Numbers: 42, 3.14

- Booleans: true, false

- Null: null

- Objects: { “key”: “value” }

- Arrays: [1, 2, 3]

All strings and object keys must be in double quotes. Numbers are signed decimal numbers that could contain a fractional part and use the E-notation. Arrays in JSON are heterogeneous arrays (mixed-type arrays), and should start with “[“ and end with “]”. Objects are associative arrays delimited with curly brackets “{}”; they use commas to separate each pair, and the colon ‘:’ character to separate the key from its value.

Serializer

Serialization is the process of transforming data into a format that can be stored or transmitted, with the ability to restore it to its original form. Examples of such formats include CSV, JSON, XML, YAML, protobuf, and more.

Serialization in JavaScript land is called stringification. It’s done using stringify method and essentially boils down to the following string manipulations:

- All datatypes are converted to their string representation, for example, using String() casting.

- Strings and object keys should be additionally enclosed with double quotes.

- Undefined, functions, and symbols should be ignored as plain values or values in objects and replaced with null in arrays.

- Infinity and NaN are considered null. Converting BigInt throws an error.

- Only enumerable properties are taken into account. So, Map, Set, WeakMap, WeakSet, objects with explicit properties set as non-enumerable, etc., will result in an empty object “{}“.

And here is the implementation:

/**

*

* @param {any} value

* @returns {string | undefined}

*/

function stringify(value) {

switch (typeof value) {

case "number":

if (!Number.isFinite(value)) {

return "null";

}

return String(value);

case "bigint":

throw new Error("Cannot serialize BigInt value");

case "string":

return `"${value}"`;

case "boolean":

return value ? "true" : "false";

case "object":

if (value === null) {

return "null";

}

if (value instanceof Date) {

return `"${value.toISOString()}"`;

}

if (Array.isArray(value)) {

return stringifyArray(value);

}

return stringifyObject(value);

}

}

function stringifyArray(value) {

const values = value

.map((el) => (isInvalidJSONValue(el) ? stringify(null) : stringify(el)))

.join(",");

return `[${values}]`;

}

function stringifyObject(value) {

const entries = Object.entries(value)

.map(([key, val]) =>

isInvalidJSONValue(val) ? undefined : `"${key}":${stringify(val)}`

)

.filter((el) => el !== undefined)

.join(",");

return `{${entries}}`;

}

function isInvalidJSONValue(value) {

return (

typeof value === "undefined" ||

typeof value === "function" ||

typeof value === "symbol"

);

}

Let’s test it:

const user = {

name: "John Doe",

age: 30,

isActive: true,

hobbies: ["reading", "traveling", "gaming"],

address: {

street: "123 Main St",

city: "New York",

postalCode: "10001",

},

spouse: null,

middleName: undefined,

greet: function () {

return `Hello, my name is ${this.name}.`;

},

};

console.log(stringify(user));

// Outputs:

{

"name": "John Doe",

"age": 30,

"isActive": true,

"hobbies": [

"reading",

"traveling",

"gaming"

],

"address": {

"street": "123Main St",

"city": "New York",

"postalCode": "10001"

},

"spouse": null

}Deserializer

Deserialization is the opposite process of serialization.

To implement a deserializer, we basically need to write a parser that would take an arbitrary JSON-compatible string and return a valid JavaScript value or throw an error.

Parsing often contains two main stages: lexical analysis and syntactic analysis. During lexical analysis, the input is broken down into the smallest units of language, called tokens. At syntactic analysis, previously constructed tokens are output in a structured way, compared to a given input, for subsequent processing.

Lexer

Implementing a lexer may be the most difficult part of this project. First, we need to define a set of tokens for further searching in the input text.

const TokenType = {

OPEN_OBJECT: "OPEN_OBJECT",

CLOSE_OBJECT: "CLOSE_OBJECT",

COLON: "COLON",

COMMA: "COMMA",

OPEN_ARRAY: "OPEN_ARRAY",

CLOSE_ARRAY: "CLOSE_ARRAY",

NUMBER: "NUMBER",

STRING: "STRING",

NULL: "NULL",

BOOLEAN: "BOOLEAN",

};A token itself would look like this:

{ type: TokenType, value: any }Then we would go character by character and try to map defined tokens with their value.

let char = text[charIndex];

if (char === Char.WHITESPACE) {

charIndex++;

continue;

}

if (char === Char.DOUBLE_QUOTE) {

...

}

if (RegEx.NUMBER_START.test(char)) {

...

}

if (RegEx.TRUE.test(text.slice(charIndex))) {

...

}

if (RegEx.FALSE.test(text.slice(charIndex))) {

...

}

if (RegEx.NULL.test(text.slice(charIndex))) {

...

}

...

One of the challenges was to parse a number. The JSON spec allows signed numbers, which could be integers, floating point numbers, or expressed using scientific notation.

With that in mind, my approach was to look at a current character; if it’s a digit or a minus sign, then we presumably found the start of a number. After that, we would buffer the next characters until we encounter something that’s not a digit, the “.” or “e”/”E” character. Then we would concatenate our buffer and try to cast it to a number.

if (RegEx.NUMBER_START.test(char)) {

checkBoolOrNull(tokens, char);

const value = [char];

charIndex++;

char = text[charIndex];

while (RegEx.NUMBER.test(char)) {

value.push(char);

charIndex++;

char = text[charIndex];

}

if (

Number.isNaN(Number(value.join(""))) ||

(value.length > 2 &&

((value[0] === "0" && value[1] !== ".") ||

value[value.length - 1] === "."))

) {

throw new Error("Invalid number literal");

}

tokens.push({ type: TokenType.NUMBER, value: Number(value.join("")) });

RegEx.NUMBER.test(char) ? charIndex++ : null;

continue;

}For objects and arrays, we would parse critical tokens like “{”, “}”, “:”, “[“, “]”, “,” and then ensure that they appear in the right sequence.

{

...

// OBJECT

if (char === Char.OPEN_OBJECT) {

charIndex++;

const nextChar = text

.slice(charIndex)

.replace(RegEx.ALL_WHITESPACES, "")[0];

if (nextChar !== Char.DOUBLE_QUOTE && nextChar !== Char.CLOSE_OBJECT) {

throw new Error(`Unexpected token after '{': ${nextChar}`);

}

tokens.push({ type: TokenType.OPEN_OBJECT, value: char });

numberOfLeftBrace++;

continue;

}

if (char === Char.CLOSE_OBJECT) {

if (!numberOfLeftBrace || numberOfLeftBrace < numberOfRightBrace + 1) {

throw new Error(`Unexpected close object: ${char}`);

}

tokens.push({ type: TokenType.CLOSE_OBJECT, value: char });

numberOfRightBrace++;

charIndex++;

continue;

}

if (char === Char.COLON) {

const previousToken = tokens[tokens.length - 1];

if (previousToken && previousToken.type !== TokenType.STRING) {

throw new Error(`Unexpected colon: ${char}`);

}

tokens.push({ type: TokenType.COLON, value: char });

charIndex++;

continue;

}

if (char === Char.COMMA) {

const openBrace = tokens.find((el) => el.type === TokenType.OPEN_OBJECT);

const openBracket = tokens.find((el) => el.type === TokenType.OPEN_ARRAY);

if (!openBrace && !openBracket) {

throw new Error(`Unexpected non-whitespace character: ${char}`);

}

charIndex++;

const nextChar = text

.slice(charIndex)

.replace(RegEx.ALL_WHITESPACES, "")[0];

if (

nextChar == Char.CLOSE_OBJECT ||

nextChar == Char.CLOSE_ARRAY ||

nextChar == Char.COMMA ||

nextChar == Char.COLON

) {

throw new Error(`Unexpected token: ${nextChar}`);

}

tokens.push({ type: TokenType.COMMA, value: char });

continue;

}

// ARRAY

if (char === Char.OPEN_ARRAY) {

charIndex++;

const nextChar = text[charIndex];

if (!nextChar) {

throw new Error(`Unexpected token after '[': ${nextChar}`);

}

tokens.push({ type: TokenType.OPEN_ARRAY, value: char });

numberOfLeftBracket++;

continue;

}

if (char === Char.CLOSE_ARRAY) {

if (

!numberOfLeftBracket ||

numberOfLeftBracket < numberOfRightBracket + 1

) {

throw new Error(`Unexpected close array: ${char}`);

}

tokens.push({ type: TokenType.CLOSE_ARRAY, value: char });

numberOfRightBracket++;

charIndex++;

continue;

}

throw new Error(`Unexpected token: ${char}`);

}

if (numberOfLeftBrace !== numberOfRightBrace) {

throw new Error(`Expected ',' or '}' after property value`);

}

if (numberOfLeftBracket !== numberOfRightBracket) {

throw new Error(`Expected ',' or ']' after array element`);

}

}Now we have tokenized our input source, so we can move to the second part: parsing.

Parser

One of the main advantages of splitting deserialization into two parts is that we don’t need to check the correctness of the input data during the parsing stage. Instead, we can focus purely on constructing the required output — JavaScript objects.

To parse strings, numbers, booleans, and null values, we just need to return their already prepared values.

/**

*

* @param {Array<{type: TokenType, value: any}} tokens

* @returns {any}

*/

function parseValue(tokens) {

const token = tokens[tokenIndex];

switch (token.type) {

case TokenType.STRING:

case TokenType.NUMBER:

case TokenType.BOOLEAN:

case TokenType.NULL:

return token.value;

case TokenType.OPEN_OBJECT:

return parseObject(tokens, tokenIndex);

case TokenType.OPEN_ARRAY:

return parseArray(tokens, tokenIndex);

default:

throw new Error(`Unexpected token type: ${token.type}`);

}

}

To parse objects and arrays, we would use recursion, as data types can be easily nested into multiple levels.

/**

*

* @param {Array<{type: TokenType, value: any}} tokens

* @returns {Object}

*/

function parseObject(tokens) {

const obj = {};

tokenIndex++;

let token = tokens[tokenIndex];

while (token.type !== TokenType.CLOSE_OBJECT) {

if (token.type === TokenType.STRING) {

tokenIndex++;

}

if (token.type === TokenType.COMMA) {

tokenIndex++;

}

if (token.type === TokenType.COLON) {

const key = tokens[tokenIndex - 1];

const value = parseValue(tokens, ++tokenIndex);

obj[key.value] = value;

tokenIndex++;

}

token = tokens[tokenIndex];

}

return obj;

}

/**

*

* @param {Array<{type: TokenType, value: any}} tokens

* @returns {Object}

*/

function parseArray(tokens) {

const arr = [];

tokenIndex++;

let token = tokens[tokenIndex];

while (token.type !== TokenType.CLOSE_ARRAY) {

if (token.type === TokenType.COMMA) {

tokenIndex++;

} else {

const value = parseValue(tokens, tokenIndex);

arr.push(value);

tokenIndex++;

}

token = tokens[tokenIndex];

}

return arr;

}

Conclusion

That’s it! You’ve now built a basic, functioning JSON serializer/deserializer for JavaScript. While it’s far from perfect, you could enhance it with custom formatting options, support for string escaping, and more precise error reporting. Still, I hope you’ve learned something valuable from this article and that it inspires you to explore everyday tools and create something cool.

The full source code for this article is available on GitHub.

Thank you for reading this article!

Any questions or suggestions? Feel free to write a comment.

I’d also be happy if you followed me and gave this article a few claps! 😊

Check out some of my latest articles here:

Writing your own JSON serializer and deserializer was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Pavlo Kolodka

Pavlo Kolodka | Sciencx (2025-06-20T02:08:24+00:00) Writing your own JSON serializer and deserializer. Retrieved from https://www.scien.cx/2025/06/20/writing-your-own-json-serializer-and-deserializer/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.