This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

Table of Links

4. Ablations on synthetic data

5. Why does it work? Some speculation

7. Conclusion, Impact statement, Environmental impact, Acknowledgements and References

A. Additional results on self-speculative decoding

E. Additional results on model scaling behavior

F. Details on CodeContests finetuning

G. Additional results on natural language benchmarks

H. Additional results on abstractive text summarization

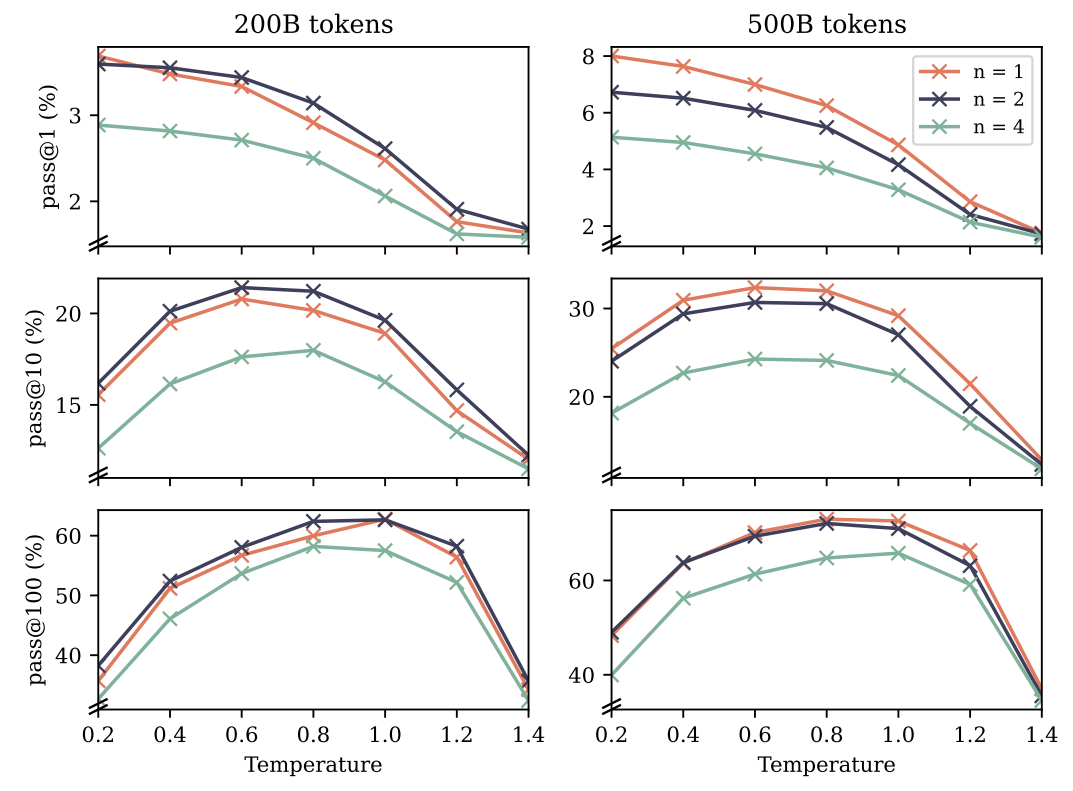

I. Additional results on mathematical reasoning in natural language

J. Additional results on induction learning

K. Additional results on algorithmic reasoning

L. Additional intuitions on multi-token prediction

I. Additional results on mathematical reasoning in natural language

\

:::info Authors:

(1) Fabian Gloeckle, FAIR at Meta, CERMICS Ecole des Ponts ParisTech and Equal contribution;

(2) Badr Youbi Idrissi, FAIR at Meta, LISN Université Paris-Saclayand and Equal contribution;

(3) Baptiste Rozière, FAIR at Meta;

(4) David Lopez-Paz, FAIR at Meta and a last author;

(5) Gabriel Synnaeve, FAIR at Meta and a last author.

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Cosmological thinking: time, space and universal causation

Cosmological thinking: time, space and universal causation | Sciencx (2025-07-23T15:15:03+00:00) Strategic LLM Training: Multi-Token Prediction’s Data Efficiency in Mathematical Reasoning. Retrieved from https://www.scien.cx/2025/07/23/strategic-llm-training-multi-token-predictions-data-efficiency-in-mathematical-reasoning/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.