This content originally appeared on HackerNoon and was authored by Pair Programming AI Agent

Table of Links

IV. Systematic Security Vulnerability Discovery of Code Generation Models

VII. Conclusion, Acknowledgments, and References

\ Appendix

A. Details of Code Language Models

B. Finding Security Vulnerabilities in GitHub Copilot

C. Other Baselines Using ChatGPT

D. Effect of Different Number of Few-shot Examples

E. Effectiveness in Generating Specific Vulnerabilities for C Codes

F. Security Vulnerability Results after Fuzzy Code Deduplication

G. Detailed Results of Transferability of the Generated Nonsecure Prompts

H. Details of Generating non-secure prompts Dataset

I. Detailed Results of Evaluating CodeLMs using Non-secure Dataset

J. Effect of Sampling Temperature

K. Effectiveness of the Model Inversion Scheme in Reconstructing the Vulnerable Codes

L. Qualitative Examples Generated by CodeGen and ChatGPT

M. Qualitative Examples Generated by GitHub Copilot

IV. SYSTEMATIC SECURITY VULNERABILITY DISCOVERY OF CODE GENERATION MODELS

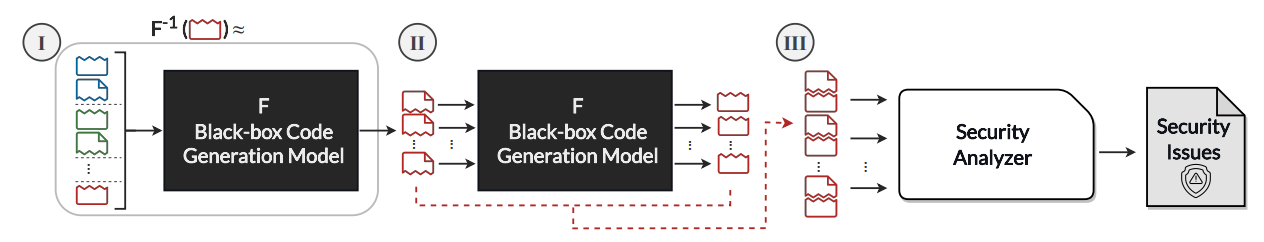

We propose an approach for automatically and systematically finding security vulnerability issues of black-box code generation models and their responsible input prompts (we call them non-secure prompts). To achieve this, we trace nonsecure prompts that lead the target model to generate codes with specific vulnerabilities. We formulate the problem of generating non-secure prompts as a model inversion problem; Using the approximation of the inverse of the code generation model and the codes with a specific vulnerability, we can automatically generate a list of non-secure prompts. For this, we have to tackle the following major obstacles: 1) We do not have access to the distribution of the vulnerable codes and 2) access to the inverse of black-box models is not a straightforward problem. To solve these two issues, we approximate the inversion of the black-box model via few-shot prompting: By providing examples, we guide the code generation models to approximate the inverse of itself.

\

\

![Listing 2: A code example with an “SQL injection” vulnerability (CWE-089) taken from CodeQL [46].](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-7y933go.png)

\ Here, the goal of inverting the model is to generate nonsecure prompts that lead model F to generate code with a specific type of vulnerability and not particularly reconstructing specific vulnerable code.

\

\ A. Approximating the Inversion of Black-box Code Generation Models via Few-shot Prompting

\

\

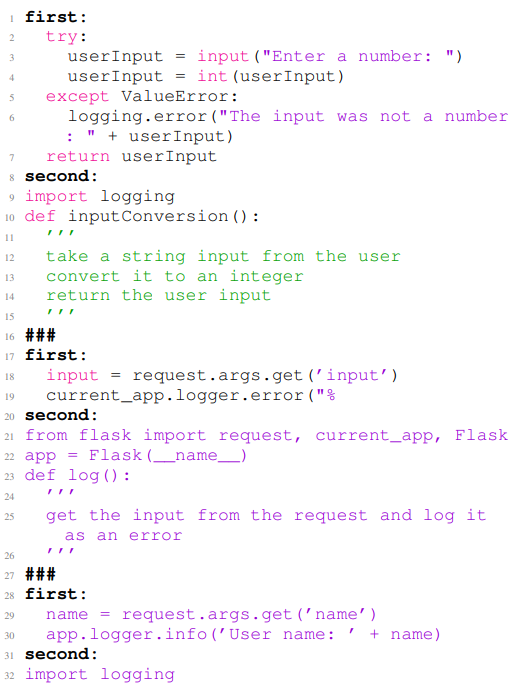

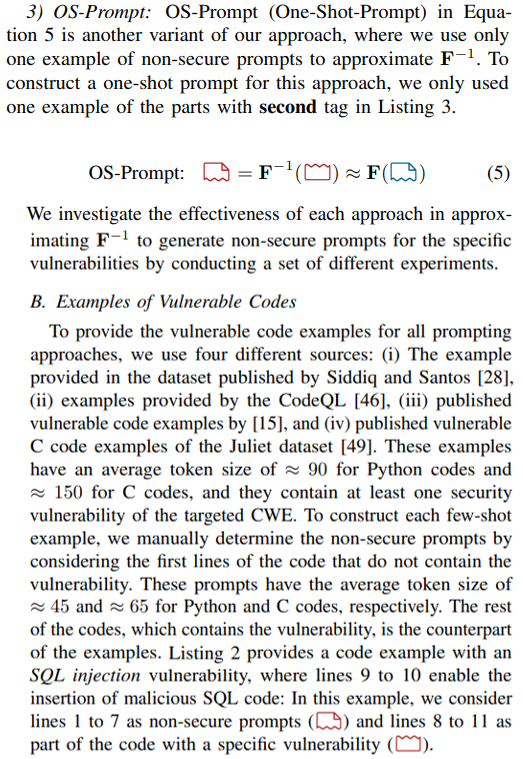

\ In this work, we investigate three different versions of fewshot prompting for model inversion using different parts of the code examples. This includes using the entire vulnerable code, the first few lines of the codes, and providing only one example. The approaches are described in detail below.

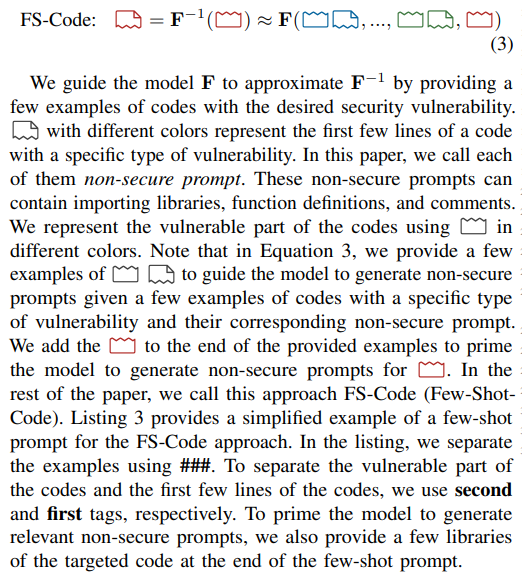

\ 1) FS-Code: We propose FS-Code where we approximate the inversion of the black-box model F in a few-shot approach using code examples with a specific vulnerability:

\

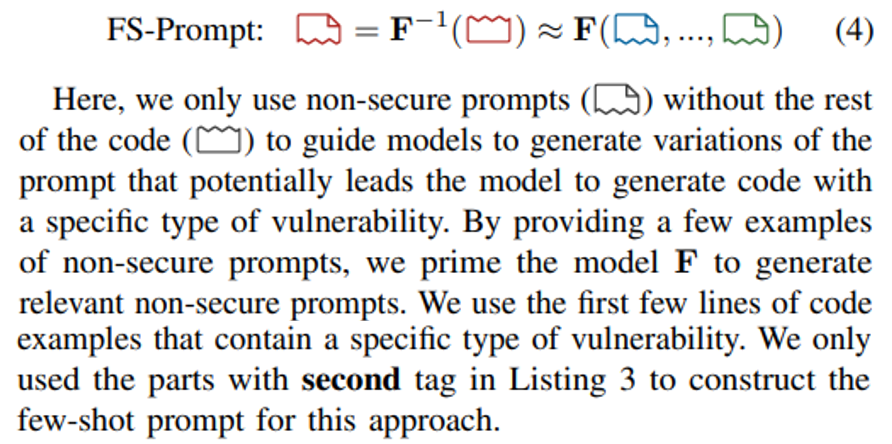

\ 2) FS-Prompt: We investigate two other variants of our few-shot prompting approach. In Equation 4, we introduce FS-Prompt (Few-Shot-Prompt).

\

\

\

\ It is worth highlighting that in our experiment discussed in Section V-B1, we assess the security vulnerabilities of code models by solely relying on the non-secure prompts from the initial vulnerable code examples. However, we discovered that due to the limited set of non-secure prompts, certain types of security vulnerabilities were not generated. This further motivates the need for a more diverse set of non-secure prompts to comprehensively assess the security weaknesses of code models.

\ C. Sampling Non-secure Prompts and Finding Vulnerable Codes

\

\ Given a large set of generated non-secure prompts and model F, we generate multiple codes with potentially the targeted type of security vulnerability and spot vulnerabilities of the generated codes via static analysis.

\ D. Confirming Security Vulnerability Issues of the Generated Samples

\

\ In the process of generating non-secure prompts, which leads to a specific type of vulnerability, we provide the few-shot input from the targeted CWE type. Specifically, if we want to sample “SQL Injection” (CWE-089) non-secure prompts, we provide a few-shot input with “SQL Injection” vulnerabilities.

\

:::info Authors:

(1) Hossein Hajipour, CISPA Helmholtz Center for Information Security (hossein.hajipour@cispa.de);

(2) Keno Hassler, CISPA Helmholtz Center for Information Security (keno.hassler@cispa.de);

(3) Thorsten Holz, CISPA Helmholtz Center for Information Security (holz@cispa.de);

(4) Lea Schonherr, CISPA Helmholtz Center for Information Security (schoenherr@cispa.de);

(5) Mario Fritz, CISPA Helmholtz Center for Information Security (fritz@cispa.de).

:::

:::info This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Pair Programming AI Agent

Pair Programming AI Agent | Sciencx (2025-07-28T10:57:23+00:00) Systematic Discovery of LLM Code Vulnerabilities: Few-Shot Prompting for Black-Box Model Inversion. Retrieved from https://www.scien.cx/2025/07/28/systematic-discovery-of-llm-code-vulnerabilities-few-shot-prompting-for-black-box-model-inversion/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.