This content originally appeared on HackerNoon and was authored by Supriya Lal

From the earliest days of mainframe computing through the rise of client-server models, service-oriented and microservices architectures, and now today’s AI-driven systems, software architecture has continually evolved to meet the ever-growing demands of technological advancement. However, supporting these advancements has led to increasingly complex and distributed systems, requiring the continuous expansion of the underlying infrastructure. More energy is required not only to operate the infrastructure but also to support the rising computational workloads it must handle. This article explores how the evolution of software architecture is contributing to increasing energy consumption.

Evolution

1960-70s: Monolithic Architecture

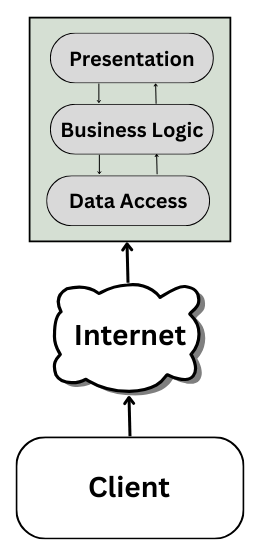

1980s: Client-Server & Layered Architecture

This architectural shift introduced a new layer of infrastructure—the network—which brought additional energy demands for data transmission and system operation. In 1999, Huber and Mills claimed that 8% of U.S. electricity was being consumed by the Internet and Internet-related devices. Although this figure has since been widely refuted, with more accurate estimates placing it closer to 1%, the article raised an important point that network communication inherently requires more energy. For example, it was estimated that creating, packaging, storing, and transmitting just 2 megabytes of data could consume the energy equivalent of burning one pound of coal.

The need for network communication introduced new sources of energy consumption. Data had to be transferred over the network between client and server, which was less energy-efficient than accessing local data within a mainframe. Network connectivity required extra power and CPU resources for components like network interface cards, transceivers, and protocol stacks (e.g., TCP/IP) to manage data transmission.

However, research on the energy implications of transitioning to layered client-server architectures remains limited. Drawing definitive conclusions about the overall energy impact is challenging due to the complex interplay of factors, including increased modularity, expanding infrastructure, and technological advancements aimed at reducing hardware energy consumption.

\

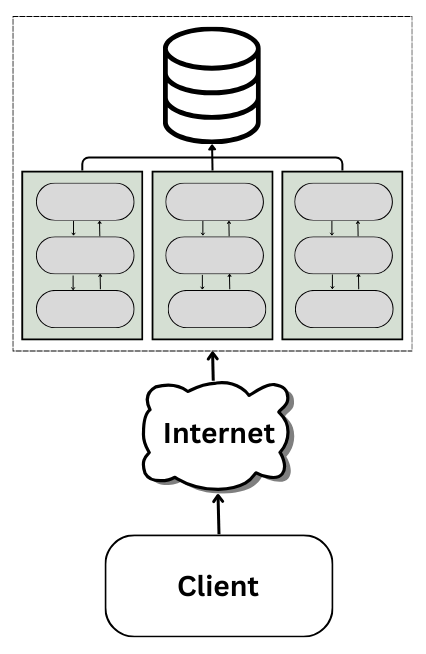

Late 1990s–2000s: Service-Oriented Architecture

This introduced new infrastructure requirements to support inter-service communication including service registries for discovery, messaging protocols (such as SOAP or REST), serialization and deserialization mechanisms (e.g. XML or JSON), and load balancers to manage traffic across services. A study conducted by Freie Universität Berlin in 2003 highlighted the challenges of this transition, noting that Web services significantly increased network traffic and imposed additional processing overhead on servers due to the need to parse serialized data formats such as XML. These operations consumed more CPU cycles and network bandwidth than traditional in-process function calls, ultimately contributing to higher energy consumption.

2010s: Microservices Architecture

\n

A performance comparative study published in the Journal of Object Technology showed Microservices consume approximately 20% more CPU than monolithic systems for the same workload. Another comparative analysis between monolithic and microservice architectures shows microservice consumed approximately 43.79% more energy per transaction than its monolithic counterpart. This architectural shift introduces significantly more inter-service communication than traditional SOA, along with remote database calls. Whereas a traditional client-server architecture might require only a few network calls to fulfill a request, a microservices-based system can involve hundreds of such calls, resulting in substantially higher network resource consumption.

\ \ \

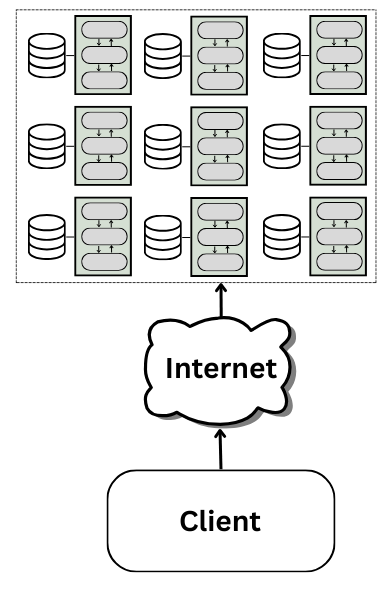

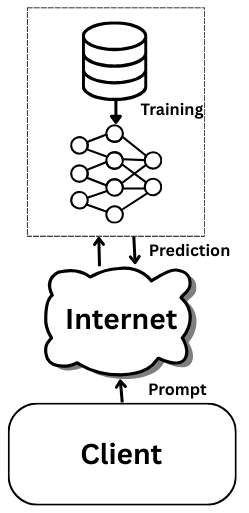

Mid 2020s and Beyond: AI-Driven Architectures

Beyond training, using AI models to produce results (a process known as inference) also requires substantial infrastructure. GPUs, high-speed memory, and advanced networking systems are needed to process new inputs through trained neural networks in real time. This inference stage, especially at scale, continues to drive high energy consumption.

\ As AI adoption grows, its energy demands are escalating rapidly. Alex de Vries, a Ph.D. candidate at VU Amsterdam, estimated in a 2023 article in Joule that AI systems could eventually consume as much electricity annually as Ireland if current growth trends continue.

Conclusion

As software architecture is evolving to support smarter, faster, and more flexible use cases, the underlying infrastructure is also growing exponentially. Each architectural leap has introduced more layers, more services, and more hardware dependencies. This has led to a steady rise in energy consumption. To build a sustainable digital future, we must rethink how we design and deploy software. The future of software must be not only intelligent and scalable, but also energy-efficient.

This content originally appeared on HackerNoon and was authored by Supriya Lal

Supriya Lal | Sciencx (2025-07-31T07:38:53+00:00) As Software Scales, So Does Its Energy Appetite. Retrieved from https://www.scien.cx/2025/07/31/as-software-scales-so-does-its-energy-appetite/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.