This content originally appeared on HackerNoon and was authored by Hyperbole

Table of Links

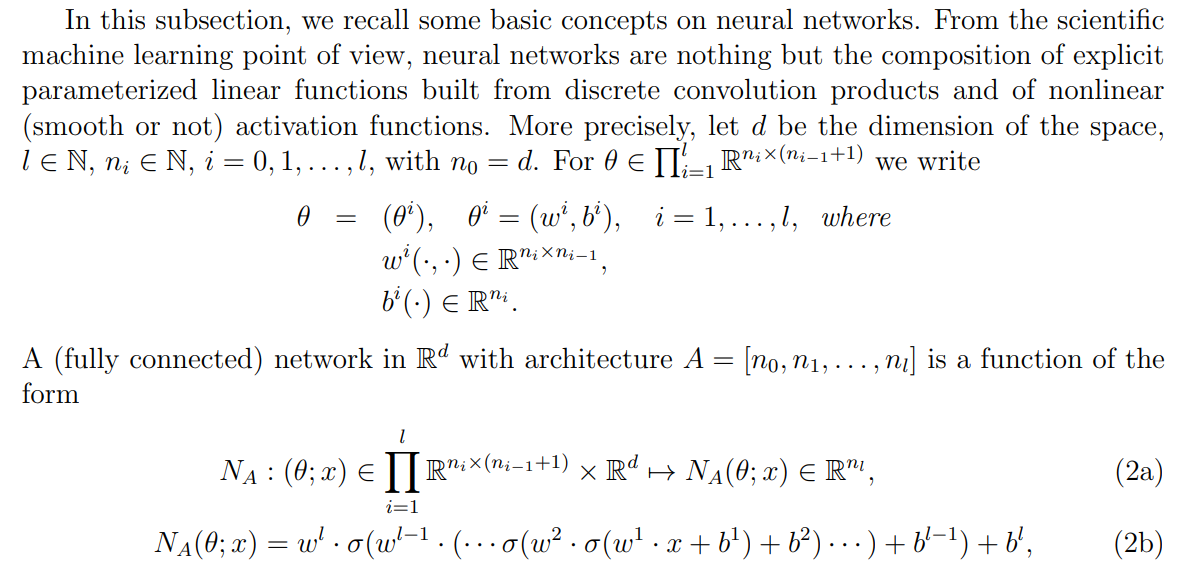

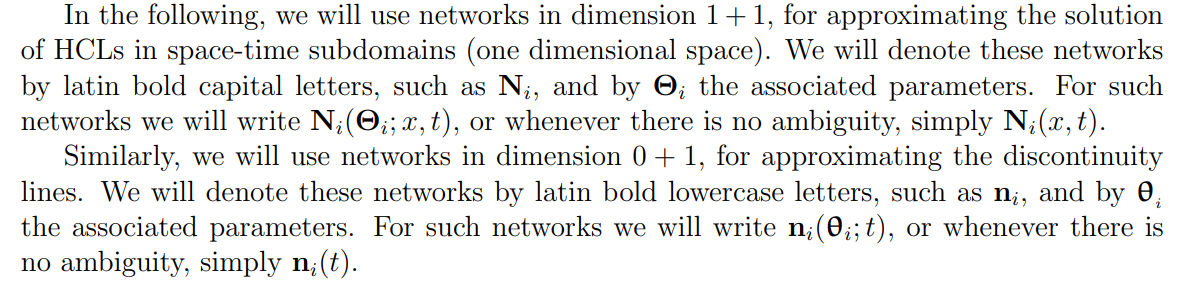

1.2. Basics of neural networks

1.3. About the entropy of direct PINN methods

1.4. Organization of the paper

Non-diffusive neural network solver for one dimensional scalar HCLs

2.2. Arbitrary number of shock waves

2.5. Non-diffusive neural network solver for one dimensional systems of CLs

Gradient descent algorithm and efficient implementation

Numerics

4.1. Practical implementations

4.2. Basic tests and convergence for 1 and 2 shock wave problems

1.2. Basics of neural networks

\ where σ : R 7→ R is a given function, called activation function, which acts on any vector or matrix component-wise. It is clear from this exposition that the network NA is defined uniquely by the architecture A. In all the networks we will consider the architecture A is given/fixed, and we will omit the letter A from NA.

\ These network functions, which will be used to approximate solutions to HCLs, benefit from automatic differentiation with respect to x and θ (parameters). This feature allows an evaluation of (1) without error. Naturally, the computed solutions are restricted to the function space spanned by the neural networks.

\

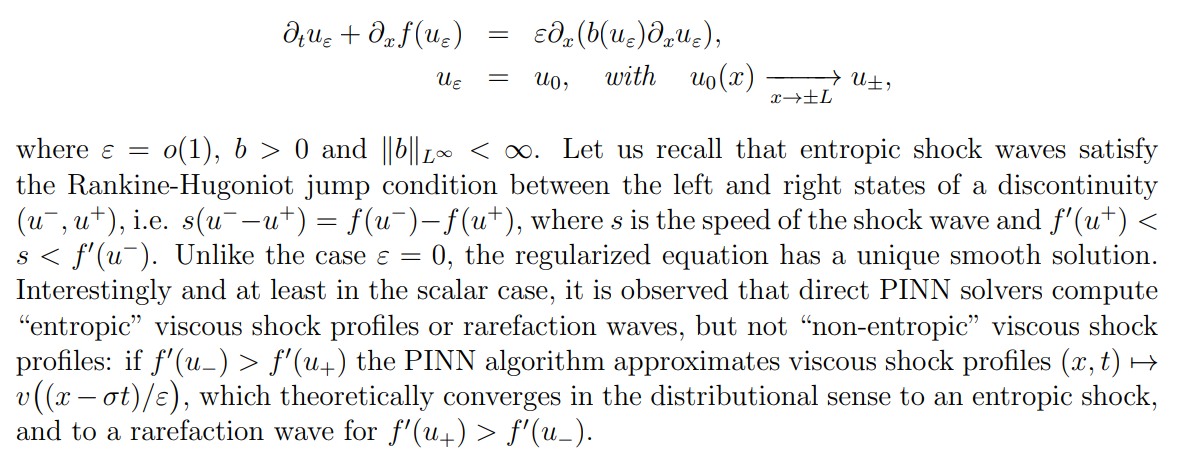

1.3. About the entropy of direct PINN methods

We finish this introduction by a short discussion on the entropy of PINN solvers for HCLs. As direct PINN solvers can not directly capture shock waves (discontinuous weak solution), artificial diffusion is usually added to HCLs, uε is searched as the solution to

\

\

\ \ While we propose in Experiment 6 an illustration of this property of the PINN method, in this paper, we propose a totally different strategy which does not require the addition of any artificial viscosity, and computes entropic shock wave without diffusion.

1.4. Organization of the paper

This paper is organized as follows. Section 2 is devoted to the derivation of the basics of the non-diffusive neural network (NDNN) method. Different situations are analyzed including multiple shock waves, shock wave interaction, shock wave generation, and the extension to systems. In Section 3, we discuss the efficient implementation of the derived algorithm.

\ In Section 4, several numerical experiments are proposed to illustrate the convergence and the accuracy of the proposed algorithms. We conclude in Section 5.

\

:::info Authors:

(1) Emmanuel LORIN, School of Mathematics and Statistics, Carleton University, Ottawa, Canada, K1S 5B6 and Centre de Recherches Mathematiques, Universit´e de Montr´eal, Montreal, Canada, H3T 1J4 (elorin@math.carleton.ca);

(2) Arian NOVRUZI, a Corresponding Author from Department of Mathematics and Statistics, University of Ottawa, Ottawa, ON K1N 6N5, Canada (novruzi@uottawa.ca).

:::

:::info This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::

\

This content originally appeared on HackerNoon and was authored by Hyperbole

Hyperbole | Sciencx (2025-09-19T10:38:18+00:00) Can Neural Networks Capture Shock Waves Without Diffusion? This Paper Says Yes. Retrieved from https://www.scien.cx/2025/09/19/can-neural-networks-capture-shock-waves-without-diffusion-this-paper-says-yes/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.