This content originally appeared on HackerNoon and was authored by Maria Piterberg

\

A Personal Journey Through Childhood Drawing, Imagination, and the Magic of Generative AI

TL;DR: What if AI could do more than generate images - what if it could imagine? In this personal exploration, I used the generative AI model DALL·E to transform rough, childlike sketches - including one drawn by a real child - into vivid, detailed artworks. Along the way, I discovered that AI isn’t just mimicking human creativity; it can sometimes amplify it.

\ From pattern recognition to unexpected “hallucinations” that feel more like imagination, this journey reveals how generative AI might just become the most surprising creative partner of all - especially for those still learning to draw.

Where does imagination begin - and can a machine ever truly share in it?

Children don’t draw to impress; they draw to express. Their sketches are raw, impulsive, and often indecipherable to adults - yet somehow bursting with meaning.

\ What if generative AI, a tool built on logic and data, could enter that fragile world of crayon lines and unspoken dreams? What if it could not only recognise what was drawn, but also intuit what was meant?

Why Do Kids Love to Draw?

A whole lot of kids love drawing. It’s intuitive, creative, and fun. But here’s the catch: most children crave instant gratification and praise for their efforts.

\ Try explaining to a toddler that drawing a flower the way they imagine requires years of skill-building. Not easy.

\ Some children are perfectly content with their abstract creations - their imaginations fill in the gaps. To adult eyes, it’s often just a jumble of lines, but to them, it’s a rocket ship, a princess, or a unicorn in disguise.

\ Others, however, can feel discouraged when their drawings don’t match the image in their mind. Just like adults, children hold themselves to different standards - and some are harsher critics than we’d expect.

Drawing Is a Superpower for Child Development

The benefits of drawing for children are widely recognized - and scientifically supported.

\ Take, for example, the work of Dr. Richard Jolley and Dr. Sarah Rose, child development experts from Staffordshire University. Their research spans cognitive, aesthetic, educational, and cross-cultural perspectives. In one of their published articles, they write:

\

“Drawing can help children learn. Research shows that using drawing as a teaching activity can increase children’s understanding in other areas, such as science.”

“Drawing may also help improve children’s memory. Research has found that children give more information about a previously experienced event when they are asked to draw about it while talking about it.”

\ And these findings are far from isolated. As I explored this topic further, I discovered a wealth of similar studies confirming that drawing enhances memory, comprehension, focus, and emotional expression in children.

A Lightbulb Moment

These insights sparked an idea in my mind. A single, powerful question emerged:

If drawing is both enjoyable and beneficial - but often frustrating for kids - can I make it more magical? Could I motivate children to draw more often and with greater satisfaction?

\ What if I could help my child become an artist instantly?

\ It almost sounds too good to be true. But the answer is - yes, it can be done.

Enter Generative AI: Magic With a Sketch

With my background in generative AI tools like DALL-E, I realized something almost instantly:

\ The model should be able to translate a simple sketch into a high-quality, realistic image - if guided with the right instructions.

\ Why? Because these models were trained on vast datasets that taught them how humans perceive shapes and assign meaning to them.

\ The only thing missing was the correct instruction (i.e., the right prompt).

My First Experiment - A Sunny Day

To put my idea to the test, I created a quick sketch on my iPad - though traditional pencil and paper would have worked just as well. I deliberately kept it simple, yet included multiple distinct elements to challenge the model’s interpretive ability.

\ The sketch was intentionally rough, unpolished, and colorless - very much in the spirit of a child’s drawing. While this version was drawn by me digitally, it mimicked the kind of spontaneous, imaginative output you might see from a young child.

To guide the model, I used a single prompt - one that would remain consistent throughout the entire experiment:

“Generate a realistic image based on this sketch, placing each element exactly where it appears in the original drawing.”

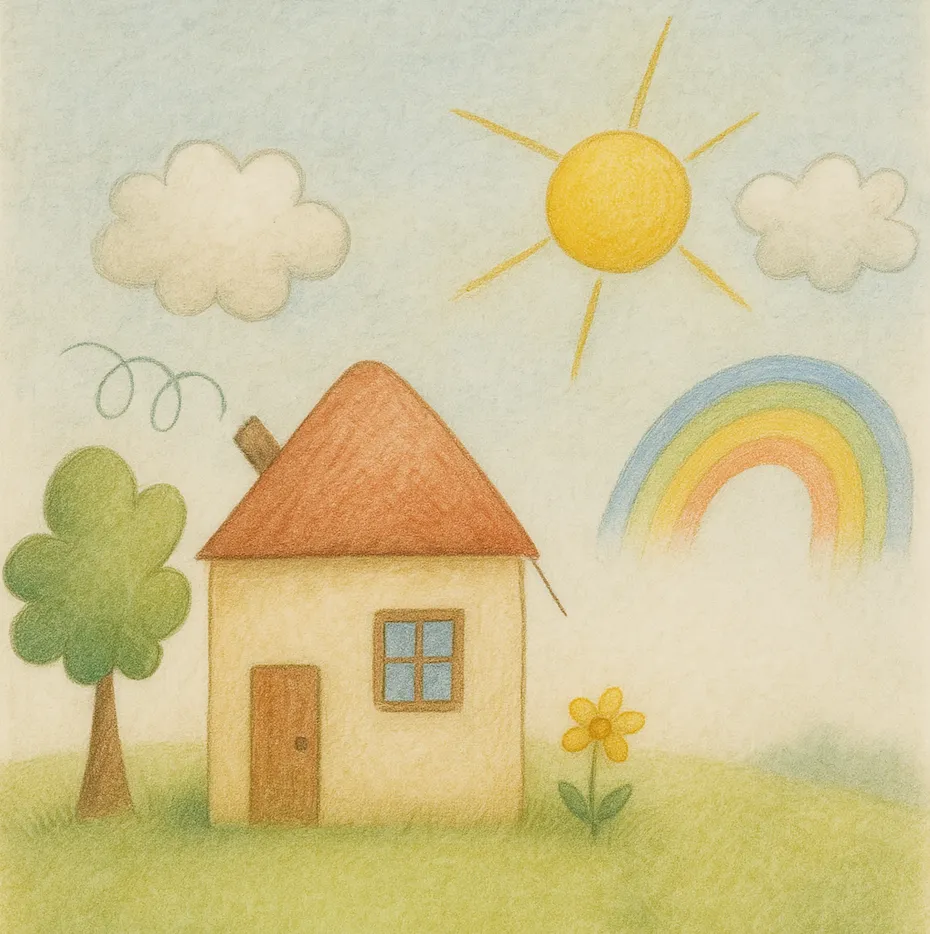

\ The results were nothing short of astonishing.

More Than a Copy

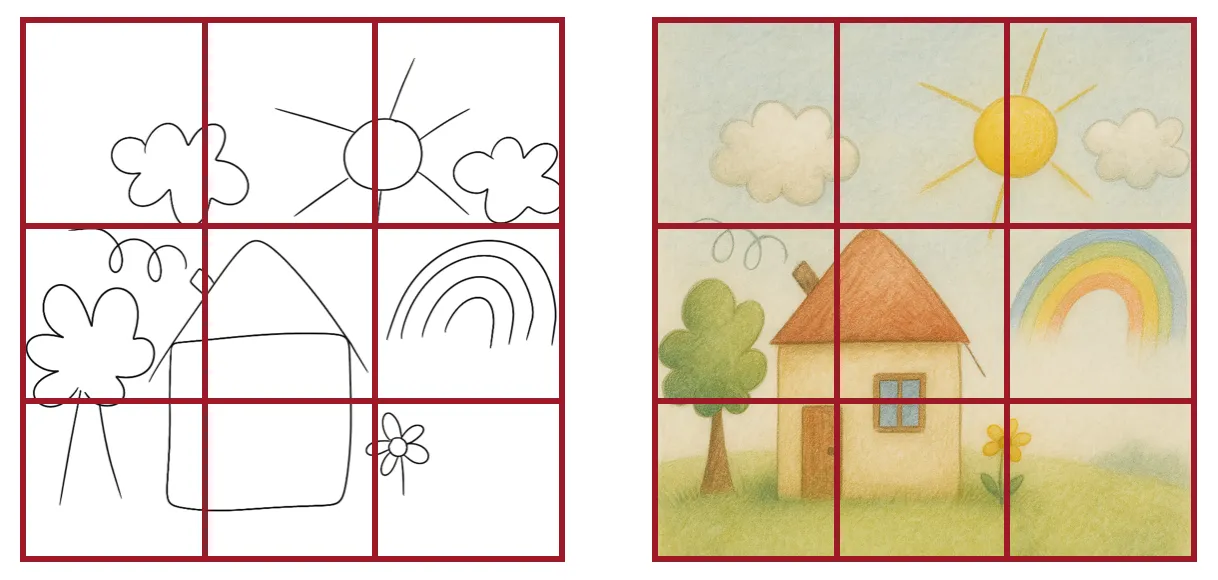

Let’s take a closer look at the result to understand what makes it so remarkable.

\ Every element from the original sketch is not only present in the generated image - but positioned with impressive spatial accuracy. Just as requested. The model didn’t just recognise the components; it respected their placement and size, preserving the composition almost exactly as drawn.

\ To better illustrate this, I’ve overlaid a grid that highlights the alignment between the sketch and the final output:

But let’s go even deeper - because DALL-E didn’t just follow instructions, it enhanced them.

\ The house now features a window and a door - details I left out, yet perfectly natural additions. The model intuitively understood what a “house” should include and filled in the blanks.

\ It also introduced a blue sky and green grass - classic, logical choices. In fact, all the colors make perfect sense: the sun is yellow, the clouds are white, and the rainbow is multicolored, just as we’d expect. This isn’t randomness - it’s the result of deep, pattern-based training on how humans interpret and expect visual information.

\ What we ended up with wasn’t just an AI-generated image - it was a beautiful, coherent painting, born from a rough sketch.

\ For a child, producing a drawing at this level would be incredibly difficult. And yet here it is - vivid, complete, and yes, DALL-E even manages to stay within the lines.

The Science Behind the Magic

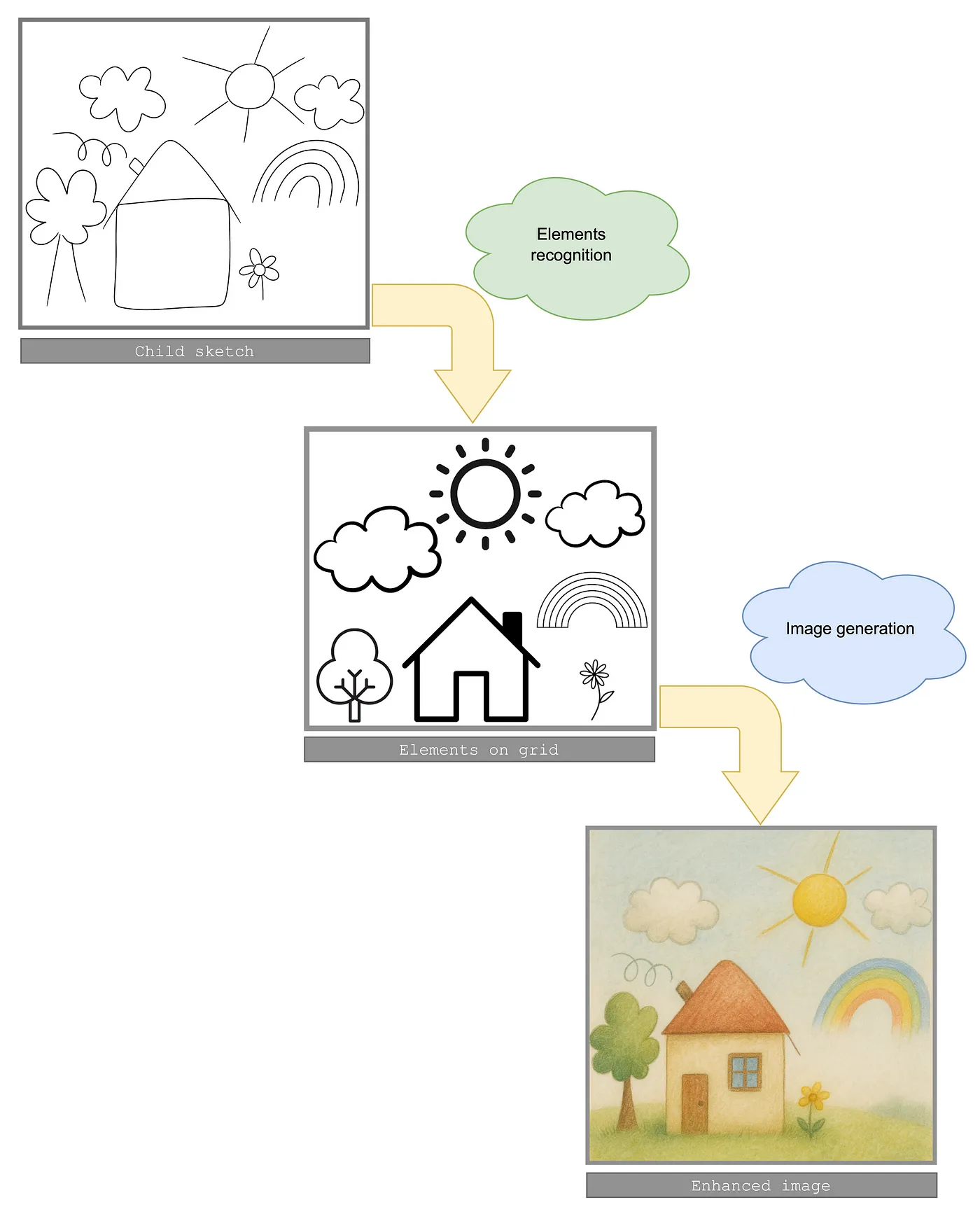

DALL-E is able to generate a realistic image from a rough sketch because it has been trained on vast datasets containing millions of images and their associated textual descriptions.

\ Through this training, the model has learned to recognise patterns - not just in how objects look, but also how they’re typically arranged and described by humans.

\ So, when it’s given a sketch, even a crude one, DALL-E can infer what the shapes represent (a square with a triangle on top likely means “house”) and use its learned knowledge to fill in the visual details in a coherent, contextually appropriate way. It doesn’t just copy; it interprets, enhances, and completes the picture based on statistical patterns from human-created content.

\ (For those curious about how DALL-E 3 operates, I delve deeper into the mechanics of a model training in my previous article)

\ Let’s try to illustrate the flow in a generative AI model's mind to explain the process even further:

At this point, I was genuinely excited - and eager to push the model even further.

My Second Experiment - Frosty the Snowman

The next sketch was slightly more complex, as it introduced a subtle contextual clue: a snowman. This single element suggests a specific season - winter - which adds an extra layer of interpretation for the model.

The result? It did not disappoint.

\ DALL-E was able to infer the time of year from a single visual clue - the snowman - and responded accordingly by adding snow to the scene. It’s a logical and contextually accurate choice, given that snow is a fundamental requirement for building a snowman.

\ Just like in the earlier sketch, the model generated a house complete with a door, a window, and a working chimney. The snowman was brought to life with two stick arms, a scarf, a hat, and a carrot nose. A pine tree, naturally dusted with snow, was also included - another thoughtful and fitting addition.

\ Even finer details were respected: the image contained the exact number of clouds from the original sketch.

\ For those paying close attention, there was one unexpected addition - a flower. Interestingly, this flower closely resembled one from our previous creation, albeit with a color change from yellow to red. While it wasn’t part of the current sketch, it wasn’t a random error either. It suggests a kind of “memory leak” from the prior session - an intriguing quirk of the model’s behavior.

\ Still, in the grand scheme of things, this was a minor flaw (or was it?) in an otherwise impressively accurate and imaginative output.

\ An intriguing question quickly emerged: What if I had generated the second image in a new DALL-E chat window - effectively resetting the context, the way our minds naturally do when switching focus?

My Third Experiment - The Ultimate Challenge

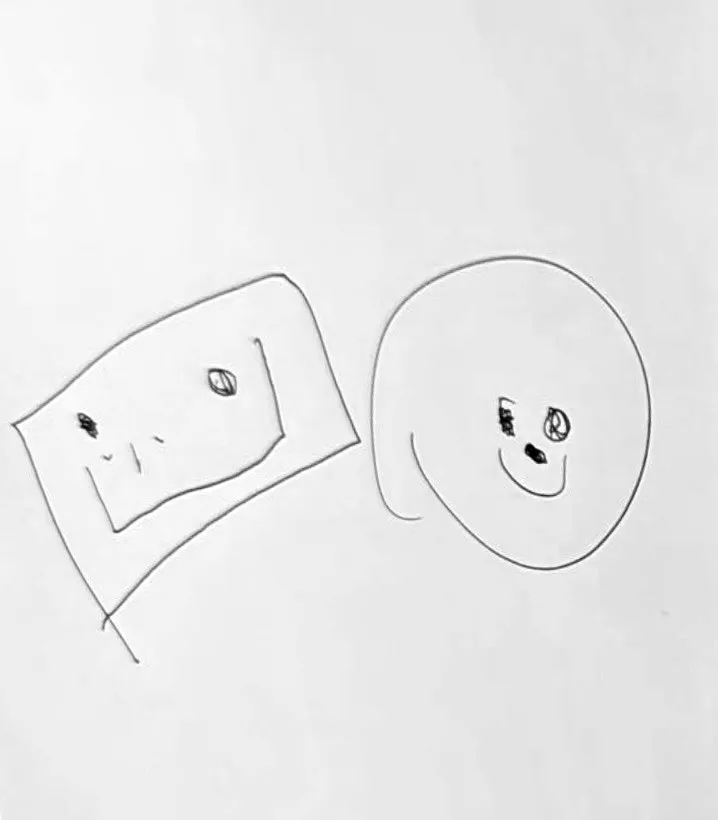

Now, it was time for the ultimate challenge: using a real-life child’s drawing.

\ Thankfully, my best friend’s daughter, Naomi, was happy to help - and quickly produced the following sketch at my request.

I’ll admit, I was a bit concerned. The drawing was abstract and open-ended, with little in the way of concrete shapes or conventional forms. It came entirely from Naomi’s imagination - these characters don’t exist in the real world, so the model couldn’t rely on familiar patterns it had seen in training. This was uncharted territory.

\ Still, I knew this was the real test. If DALL-E could interpret Naomi’s creation and bring her imagined characters to life, it would be nothing short of magical - a moment where technology truly meets childhood creativity. It would be, in every sense, a dream come true.

\ The result from DALL·E exceeded anything I could have imagined:

The model not only replicated the shapes and facial features from Naomi’s sketch - it transformed vague, abstract lines into vivid, expressive characters. It created something from nothing.

\ This was true creation: a visual manifestation of an idea that had previously existed only in Naomi’s imagination. These characters had no reference, no precedent - just the spark of a child’s creativity, now brought to life by AI.

\ In the world of generative AI, when a model invents something that wasn’t explicitly provided, we often call it a “hallucination.” The term carries a negative connotation - implying error, misjudgment, or deviation from the user’s intent.

\ Take, for example, the unexpected flower that appeared in our snowman scene. Technically, that could be classified as a mild hallucination: a small, unrequested detail that slipped in from a prior context.

\ But with Naomi’s drawing, DALL-E created something truly remarkable - art that was both original and expressive - by hallucinating. It wasn’t copying, it wasn’t referencing; it was imagining.

\ This raises a deeper question: in the right context, could what we call a hallucination actually be something more human - like imagination?

A Final Reflection

What started as a simple test became something deeply moving: a moment where technology stepped into a child’s imagination - not to replace it, but to honor it.

\ Watching Naomi’s rough lines transform into living, breathing characters was more than just impressive - it felt magical.

\ In a world where children are still learning how to express what they see and feel, AI can serve as a bridge between imagination and reality, giving shape to dreams too big for small hands to draw alone. And maybe that’s the most beautiful part - realizing that when used with care, AI doesn’t dull human creativity; it amplifies it, one sketch at a time.

\ About me

I am Maria Piterberg - an AI expert leading the Runtime software team at Habana Labs (Intel) and a semi-professional artist working across traditional and digital mediums. I specialize in large-scale AI training systems, including communication libraries (HCCL) and runtime optimization. Bachelor of Computer Science.

This content originally appeared on HackerNoon and was authored by Maria Piterberg

Maria Piterberg | Sciencx (2025-07-03T13:00:18+00:00) I Made Dall-E Transform Children’s Sketches Into Realistic Images. Retrieved from https://www.scien.cx/2025/07/03/i-made-dall-e-transform-childrens-sketches-into-realistic-images/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.