This content originally appeared on HackerNoon and was authored by The FewShot Prompting Publication

Table of Links

2 Concepts in Pretraining Data and Quantifying Frequency

3 Comparing Pretraining Frequency & “Zero-Shot” Performance and 3.1 Experimental Setup

3.2 Result: Pretraining Frequency is Predictive of “Zero-Shot” Performance

4.2 Testing Generalization to Purely Synthetic Concept and Data Distributions

5 Additional Insights from Pretraining Concept Frequencies

6 Testing the Tail: Let It Wag!

8 Conclusions and Open Problems, Acknowledgements, and References

Part I

Appendix

A. Concept Frequency is Predictive of Performance Across Prompting Strategies

B. Concept Frequency is Predictive of Performance Across Retrieval Metrics

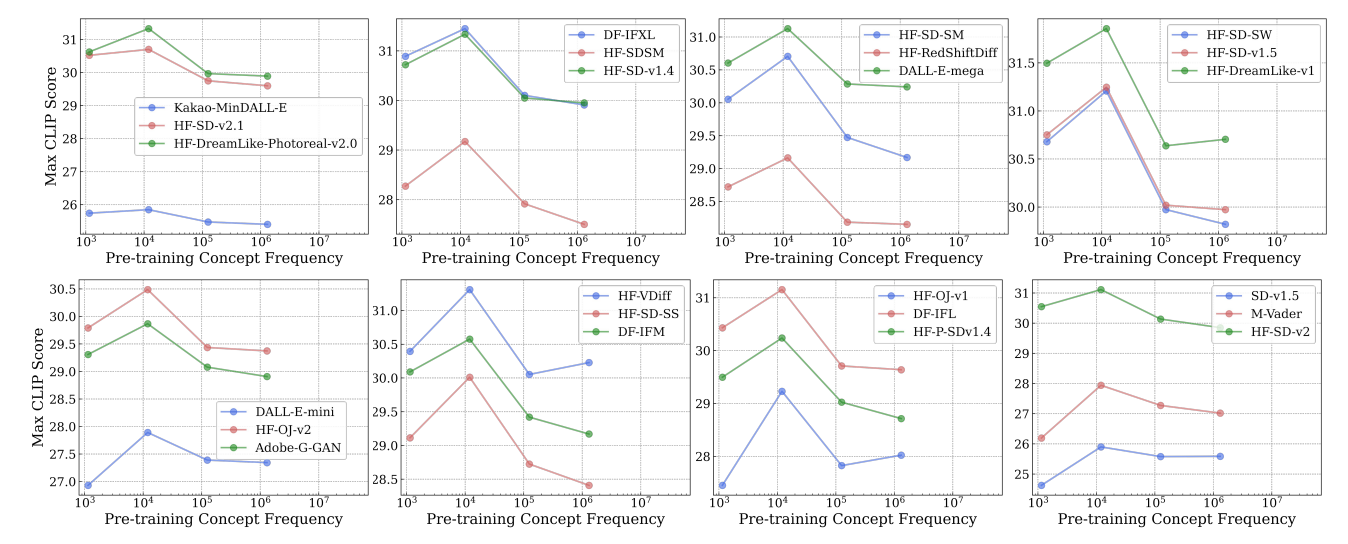

C. Concept Frequency is Predictive of Performance for T2I Models

D. Concept Frequency is Predictive of Performance across Concepts only from Image and Text Domains

F. Why and How Do We Use RAM++?

G. Details about Misalignment Degree Results

I. Classification Results: Let It Wag!

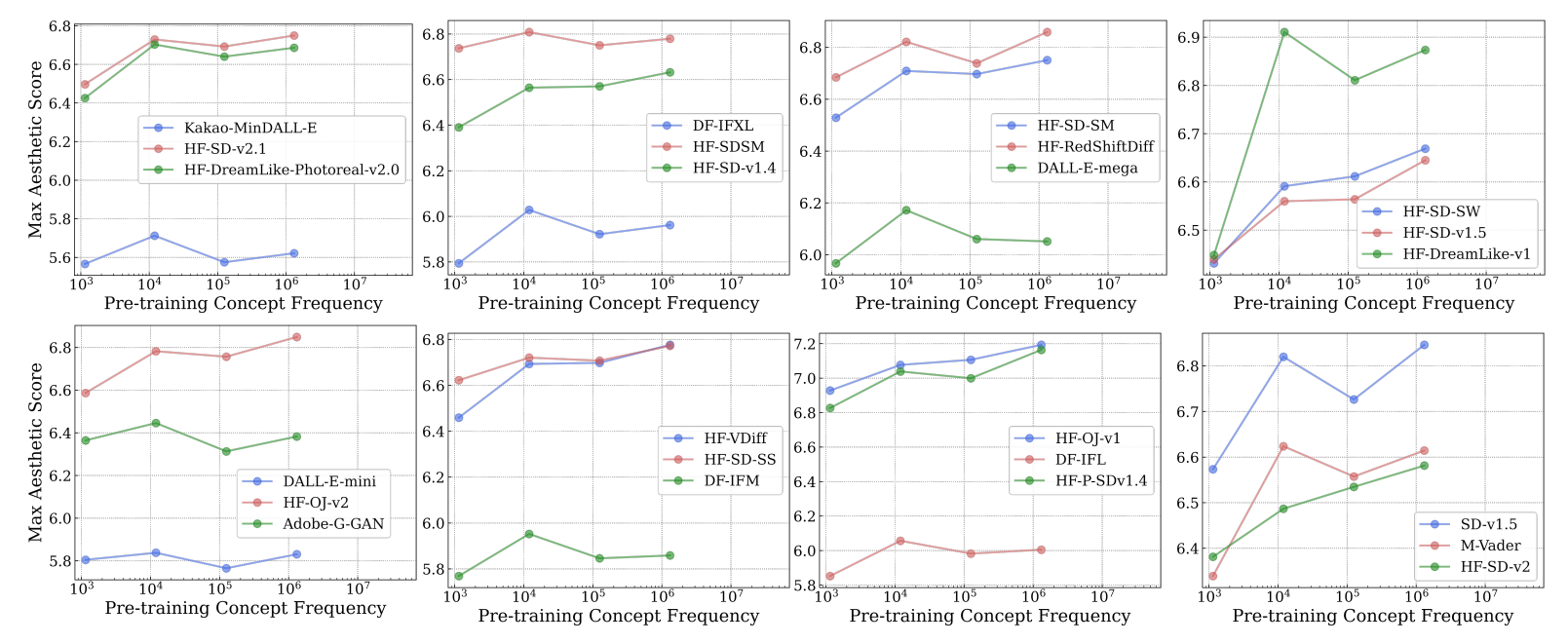

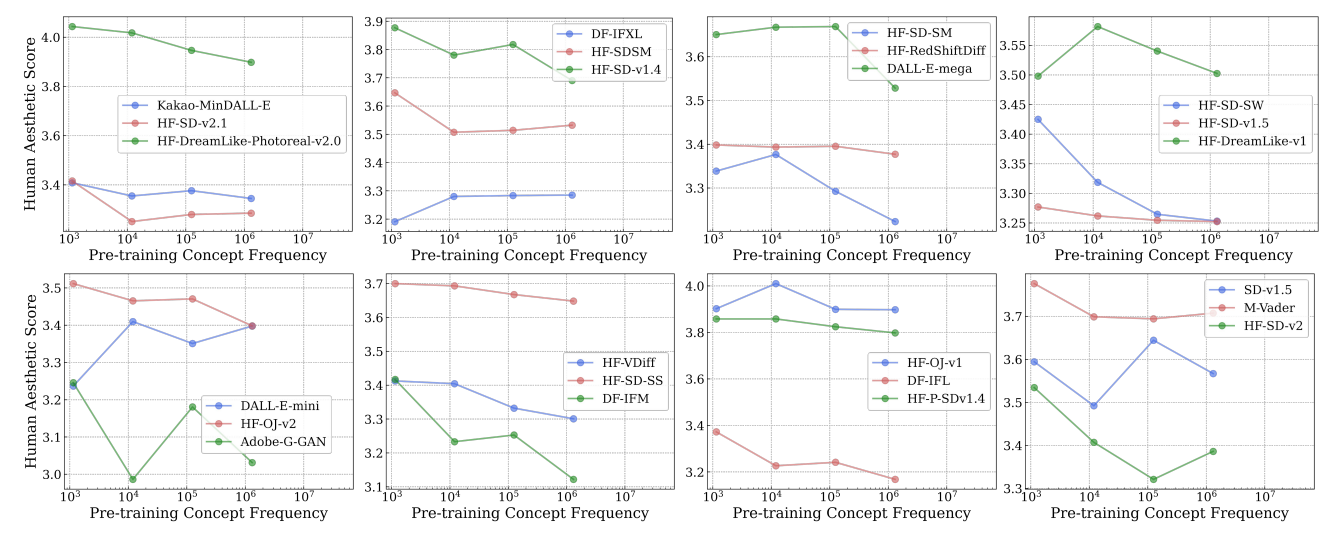

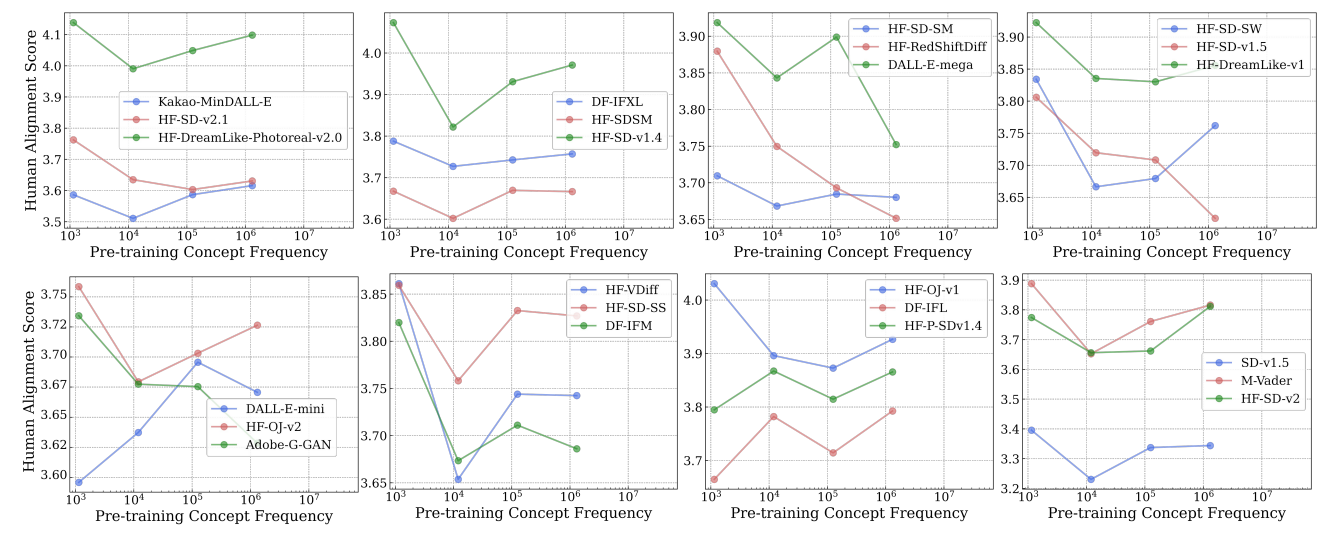

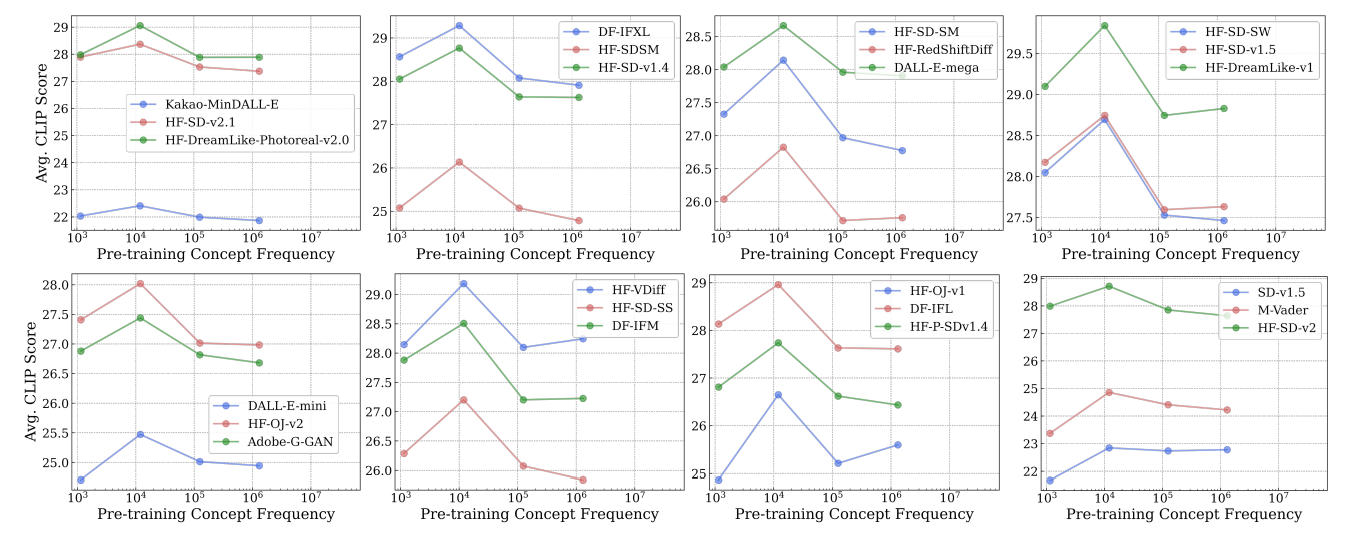

C Concept Frequency is Predictive of Performance for T2I Models

We extend the results from Fig. 3 with Figs. 11 to 15. As with Fig. 3, due to the high concept frequency, the scaling trend is weaker. Furthermore, we do see inconsistency in the trends for the human-rated scores retrieved from HEIM [71], hence we perform a small scale human evaluation to check them.

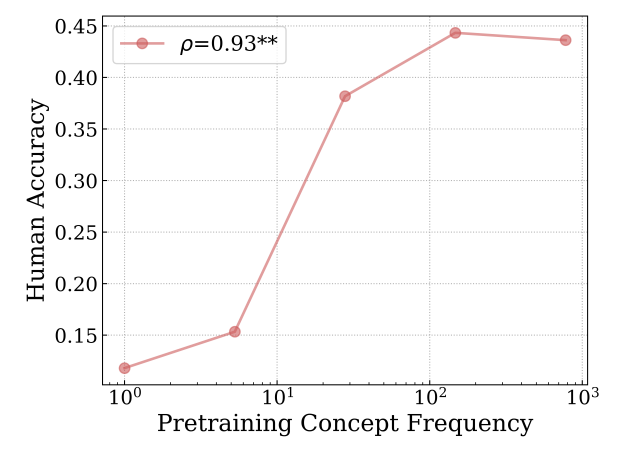

\ Given the societal relevance [23], we decided to test Stable Diffusion [96] (v1.4) on generating public figures. We scraped 50,000 people from the “20230123-all” Wikidata JSON dump by filtering for entities listed as “human” [8], and scraped a reference image for the human study for each person if an image was available. After computing concept frequency from LAION-Aesthetics text captions (using suffix array [70]), we found that ≈10,000 people were present in the pretraining dataset. Note that to ensure the people’s names were treated as separate words, we computed frequency for strings of the format “ {entity} ”. We then randomly sample 360 people (for which a reference image was available) normalized by frequency [22] for the human study. For generating images with Stable Diffusion, we used the prompt “headshot of {entity}”, in order to specify to the model that “{entity}” is referring to the person named “{entity}” [50].

\ We assessed image-text alignment with a human study with 6 participants, where each participant was assigned 72 samples; for consistency, of the 360 total samples, we ensured 10% were assigned to 3 participants. Provided with a reference image, the participants were asked if the sample accurately depicts the prompt. Three choices were provided: “Yes” (score=1.), “Somewhat” (score=0.5), and “No” (score=0.). Accuracy was computed by averaging the scores.

\ As can be seen in Fig. 16, we observe a log-linear trend between concept frequency and zero-shot performance. Thus, we observe that the log-linear trend between concept frequency and zero-shot performance consistently holds even for T2I models.

\

\

\

\

\

\

\

:::info Authors:

(1) Vishaal Udandarao, Tubingen AI Center, University of Tubingen, University of Cambridge, and equal contribution;

(2) Ameya Prabhu, Tubingen AI Center, University of Tubingen, University of Oxford, and equal contribution;

(3) Adhiraj Ghosh, Tubingen AI Center, University of Tubingen;

(4) Yash Sharma, Tubingen AI Center, University of Tubingen;

(5) Philip H.S. Torr, University of Oxford;

(6) Adel Bibi, University of Oxford;

(7) Samuel Albanie, University of Cambridge and equal advising, order decided by a coin flip;

(8) Matthias Bethge, Tubingen AI Center, University of Tubingen and equal advising, order decided by a coin flip.

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by The FewShot Prompting Publication

The FewShot Prompting Publication | Sciencx (2025-07-09T11:00:04+00:00) How Concept Frequency Affects AI Image Accuracy. Retrieved from https://www.scien.cx/2025/07/09/how-concept-frequency-affects-ai-image-accuracy/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.