This content originally appeared on DEV Community and was authored by Danielle Ellis

The code we choose to use can impact the speed and the performance of our program. How would we know which algorithm is most efficient? Big O Notation is used in Computer Science and measures how quickly the runtime of an algorithm based on the number of input in a function.

Big O looks at the worst case scenario or the max number of steps to take in a problem. On the other hand, Big Omega looks at the best case scenario or the least number of steps to take in a problem.

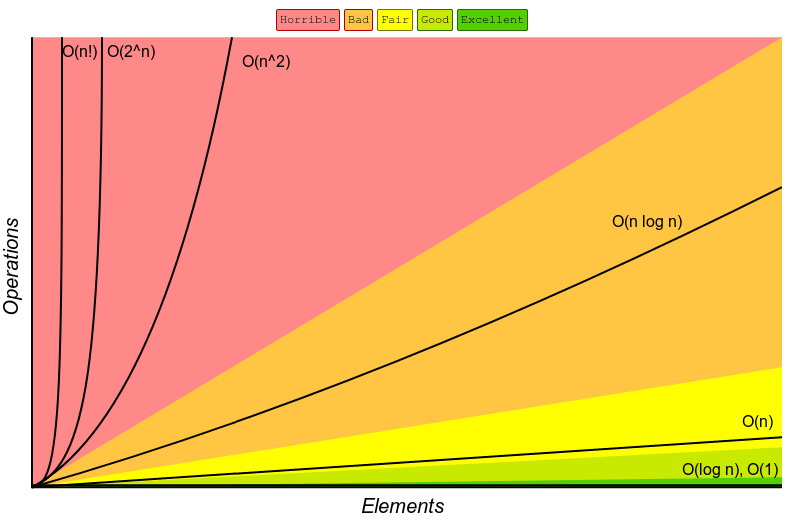

Common Runtimes from least to greatest effectiveness:

- O(n^2): Quadratic time - as (n) grows, runtime squares.

- O(n): Linear - as (n) scales, runtime scales.

- O(log n): Logarithmic time - halves dataset until it finds (n).

- O(1): Constant - as (n) grows, there is no impact.

Big O Complexity chart

This chart shows the runtime with green shaded area being the most effective to the red shaded areas being the least effective.

This content originally appeared on DEV Community and was authored by Danielle Ellis

Danielle Ellis | Sciencx (2021-09-26T23:04:51+00:00) Big O Basics. Retrieved from https://www.scien.cx/2021/09/26/big-o-basics/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.