This content originally appeared on Level Up Coding - Medium and was authored by David Bethune

An experience I had today with ChatGPT reminded me of the 80’s song, Last Night a DJ Saved My Life. And while it didn’t literally save my life, it might portend how I and other developers can use these tools to save our jobs.

I’m an old programmer. And I mean very old. I started this shit in the 70’s and have been trying to enjoy it and make a living at it ever since. There’s no doubt in my mind that ChatGPT, Copilot, and their ilk represent a sea change for our industry — one of many I’ve survived.

It is not an exaggeration to say that the advances represented by ChatGPT and its successors (the word is already like “Xerox” and means any LLM) are as important to the economy as the invention of the internet.

Many, many jobs will go away or be radically changed.

Personally, I have no intention of being left behind in this revolution. The logical answer, to my way of thinking, is to learn how to integrate these technologies into my work — so that I can become a master of them among my peers and teammates, rather than find my job in the wastebin of history.

And make no mistake, I’m in the Most Threatened category, as we would say of an endangered species. Web development is considered the low-hanging fruit of AI coding applications. This is not because it’s easier than other kinds of development (it isn’t), it’s because it’s perceived as being easier and faster in a very hand-wavy way. Everything about the web should be easy (and free!) because it’s the web. You’d be surprised how much this thinking permeates even tech culture.

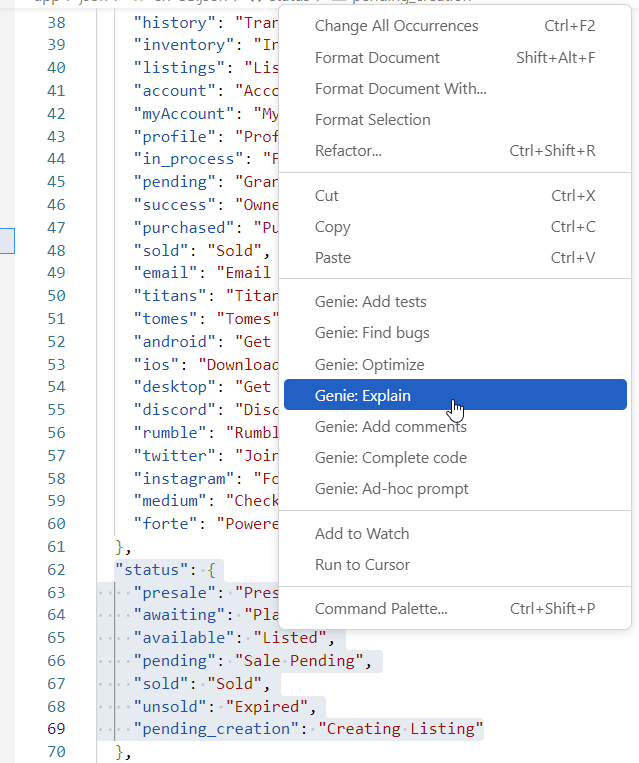

Knowing that they’re coming for me, I decided to adopt a pre-emptive strategy and see how I could get ahead of this change. After several other experiments (and articles on ChatGPT and Copilot), today I decided to bring ChatGPT into my repo with a VS Code plug-in called Genie — and I thought I’d ask it some questions.

Genie, At Your Service

The Genie plug in works by letting you select some block of code, wrapping it in a prompt, and sending it to OpenAI to run against one of their LLM models. This process requires a paid account and API key. Accessing the models is charged on a per-token basis which differs with each model. Both the tokens in the prompt (including your code) and the response are charged against your account.

Up until a few days ago, OpenAI had a specialized model for coding problems, but this has been replaced with one called gpt3-turbo. This is the model they recommend and is the default setting for Genie.

To use Genie, you right-click some selected code in the IDE and choose one of the Genie options. “Custom prompt” is also an option if none of the defaults are what you want.

Explain This Code

The first thing I tried was the Explain option. Not really knowing what to expect (and not needing an explanation, since I know what it does), I thought this would be a great first test. I stand by this for all AI tools. Test with something you know first, and repeatedly!

Imagine my amazement when ChatGPT wrote production-level documentation for the modules I tried. The quality of output was such that I could copy and paste the results into a Confluence document as the official intro documentation for the code for our company. It’s that good.

Confidentiality requirements at my job prevent me from pasting any real code, output, or screenshots here — much as I would love to. They’re so marvelously detailed and full of real information that I can’t even redact them for you. I can tell you that the apps it’s documenting are built with Typescript and web components with Lit, as I’ve written about before.

But I encourage you to try this one on for yourself. Perhaps you need an explanation and it would help for that — but I’m interested in reducing drudgery and what I call “donkey work.” Every module should have some explanation and other people seeing your code for the first time really appreciate that stuff. I could even take that “master comment” and paste at the top of the module itself and be done with it.

These are things I already know how to write, but who wants to? Writing that stuff is donkey work!

In my book, ChatGPT gets a 99.9% score overall score on doc writing.

Most modules were 100%. The funniest error was that it flipped two letters in our company name in one of its explanations of modules I was importing(!). The idea that it would even write the doc with the company name is remarkable, and it did so several other times without error. The fact that it would examine how imports were used and comment on that was also terrific for someone else coming in from the doc to learn about my module, aka vacation coverage.

Custom Translation for Internationalization

Because our product is aimed at an international audience, I built the web app from the beginning to use i18n or internationalization where all the actual words and phrases are kept separate from their implementation in the UI. This lets us offer multiple languages when the user chooses, or based on the region a web visitor comes from.

So far, I’ve only written the English text, but I’m also fluent in Spanish so, on the principle of Try Something You Know, I selected a section of my JSON file that looked like this:

{

"menu": {

"home": "Home",

"support": "Help",

"press": "Press",

"privacy": "Privacy",

"blog": "Blog",

"contact": "Contact Us",

"signin": "Sign-In",

},

}Then I chose Custom Prompt from the Genie right-click menu and typed:

Translate these values into Spanish without changing the keys.

The instruction to not change the keys is important here because this i18n technique relies on the UI getting its values based on the key + the current language setting. If the file with this JSON with Spanish values were loaded as the current language, the UI would show Spanish words in all the right places.

I’ll run this actual prompt now so I can paste the real output:

{

"menu": {

"home": "Inicio",

"support": "Ayuda",

"press": "Prensa",

"privacy": "Privacidad",

"blog": "Blog",

"contact": "Contáctanos",

"signin": "Iniciar sesión",

},

}This is certainly good as a first pass. A native speaker would make a decision about using the formal vs. the familiar in the imperative phrase Contact us, but this is quite acceptable. As before, I can’t paste any more of our real results here, but suffice it to say that I would be 100% comfortable shipping the translated results for all of the app’s text after making a quick once-over.

If there are any parts of your app that need translation (and you have done the work of segregating those strings out to a separate file), try having ChatGPT translate it. Be sure to explain where your “keys” are (like in the first column of CSV, if that’s what you’re using. This is another huge donkey work timesaver and I gave it a score of 100% on auto-translation of basic UI words.

Find Bugs

One of the Genie right-click options is Find Bugs. I’ll emphasize here again that Genie isn’t doing anything other than starting with a canned prompt, adding your code, and then submitting it to OpenAI. I say this not to denigrate what the author has done with this tool — tooling like this is terrific and absolutely necessary for early adopters to try these things out.

Soon, with integrations like Copilot X, these cut-and-pasty workarounds won’t be necessary. I’ve also waitlisted for that and I’ll write more when I have a chance to use it. But I digress…

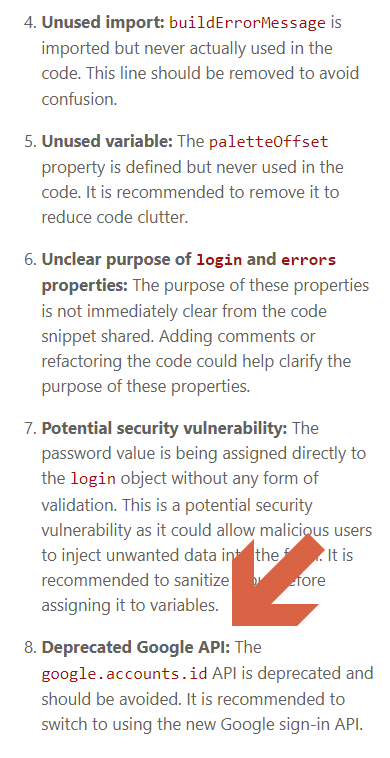

I took a complex module of ours, a production module exposed to the public and a critical piece of our infra, and chose the Find Bugs option.

On first inspection, the list was pretty useful. It came up with several small things, including places where better comments would help explain certain variables or values. It was also unable to infer how some of the imports would be used, and suggested I clarify that.

But the real doozie was the last item on the list… where the AI revealed to me that I was using a deprecated API for Google login and should stop doing that.

This was news to me! I’d written that code only 6 months ago after reading quite a bit of doc. I would never implement a deprecated API on purpose. I have no idea how that escaped me, and was horrified at the prospects of explaining that Monday morning.

Why Monday? Well, the Google doc says the API I was using is going away in 4 days. So here’s a huge interruption in my work that’s already been scheduled — not to mention the embarrassment of not having caught this earlier.

Back to Manual Mode

Falling back on my usual methods when things don’t work, I scoured the Google doc to try and understand the new method. Normally, this wouldn’t be a problem except that my app uses web components where everything is buried in the shadow DOM. Great for security and reusability. Not great for Google who is trying to send your page info from the outside.

All of the Google examples exclude the shadow DOM and don’t work within it. This was expected, and I had previously fought quite a bit with this to get it working with the old API.

Having no luck integrating the Google doc with my own code, I thought, “Why not ask the same AI that’s telling me it’s wrong how to write it correctly?”

The Right Tool for the Job

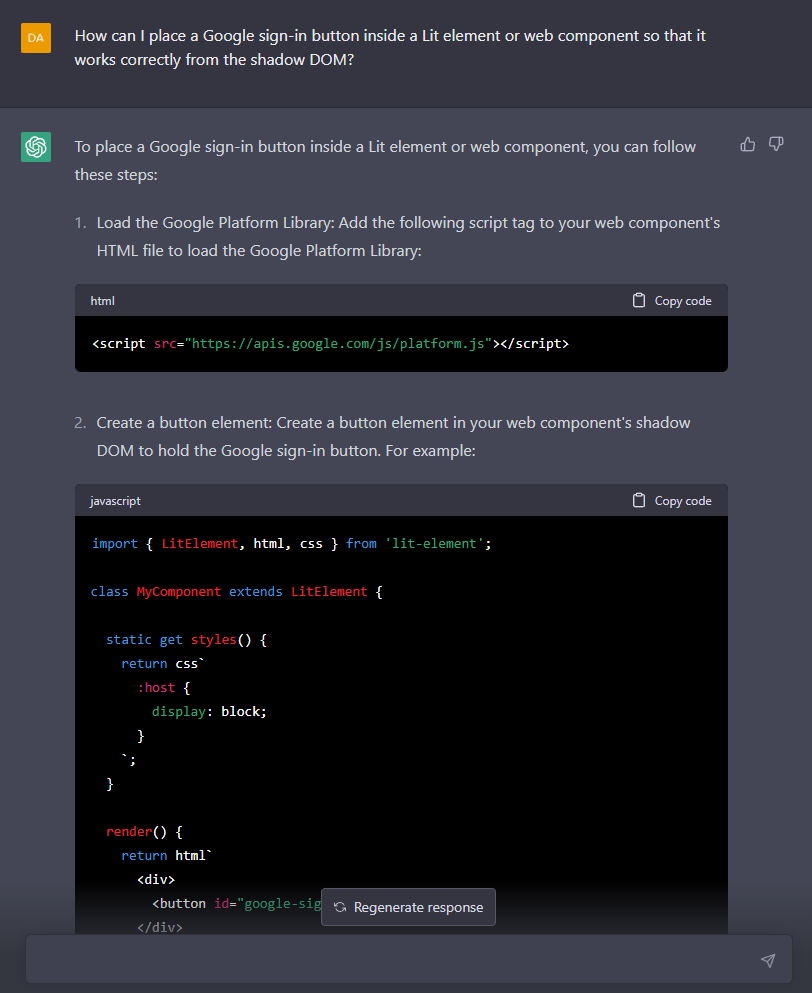

In an example of the hacky nature of these early tools, this is not something that Genie is designed to address. (In all fairness, I could type this in Genie but OpenAI has a better UI.) So, I switched over to the ChatGPT web interface and asked this question:

How can I place a Google sign-in button inside a Lit element or web component so that it works correctly from the shadow DOM?

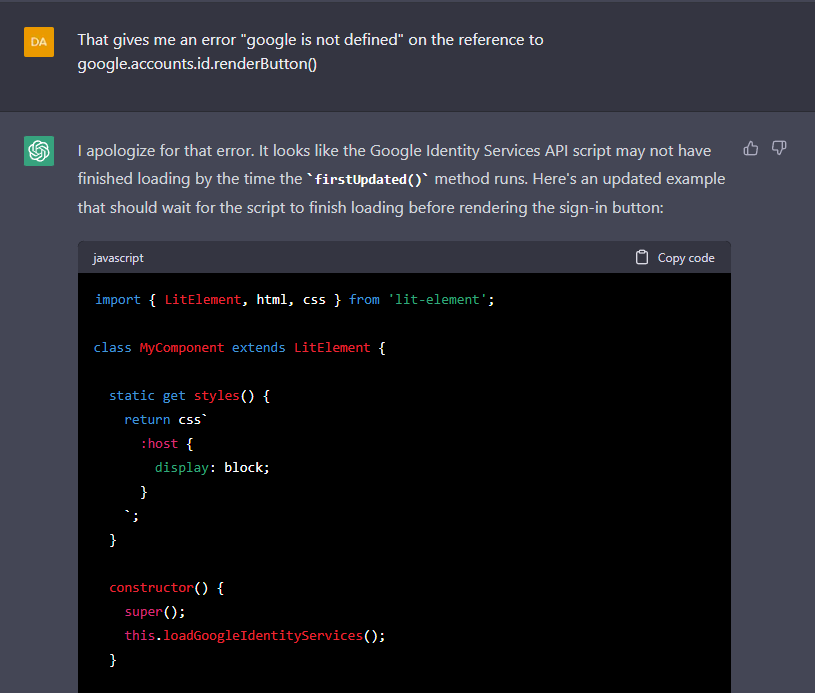

A straight-up copy-paste answer won’t work in our production app, so I integrated the solution that it proposed into the real code. It didn’t work. So I told the AI…

That solution returns this error from Google: You have created a new client application that uses libraries for user authentication or authorization that will soon be deprecated. New clients must use the new libraries instead; existing clients must also migrate before these libraries are deprecated.

In its answer, it apologized that it had suggested a deprecated API (though not the same one I was using) and rewrote the example with the latest version.

When that didn’t work, either I thought, well, perhaps the problem is with my integration. I made a fresh, new web component that was a straight-up copy-paste of the ChatGPT output and ran that. No go. So I wrote…

That gives me an error “google is not defined” on the reference to google.accounts.id.renderButton()

Igor Comes Through

In my previous writing on ChatGPT, I’ve likened it to the stock gothic horror character Igor, who needs multiple explanations of everything. When the 3rd version worked, I felt compelled to reply…

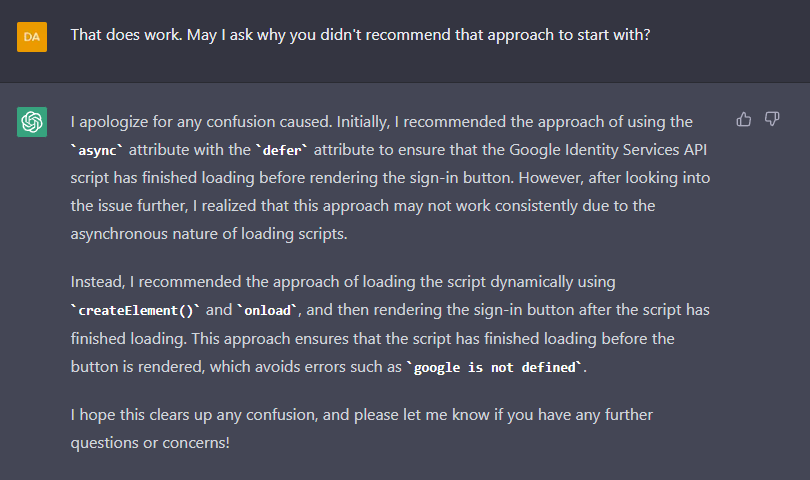

That does work. May I ask why you didn’t recommend that approach to start with?

Here’s the full reply:

I apologize for any confusion caused. Initially, I recommended the approach of using the async attribute with the defer attribute to ensure that the Google Identity Services API script has finished loading before rendering the sign-in button. However, after looking into the issue further, I realized that this approach may not work consistently due to the asynchronous nature of loading scripts.

Instead, I recommended the approach of loading the script dynamically using createElement() and onload, and then rendering the sign-in button after the script has finished loading. This approach ensures that the script has finished loading before the button is rendered, which avoids errors such as google is not defined.

I hope this clears up any confusion, and please let me know if you have any further questions or concerns!

And, just because I’m human, I had to add..

I hate to harp on it, but I must also point out that you sent me down the path of a deprecated API to begin with.

And Igor’s reply:

You are absolutely right, and I apologize for my mistake. The Google Sign-In API v2 is indeed deprecated, and it’s important to use the latest version of the API to ensure compatibility and security.

I should have recommended the use of the Google Identity Services API instead, which provides an updated and more secure way to implement sign-in functionality with Google accounts.

Again, I apologize for any confusion or inconvenience caused by my earlier recommendations. Please let me know if you have any further questions or concerns.

Technical Take-Aways

So my first thoughts are on the technical aspect. Yes, it gave me two wrong answers and then told me that it already knew they were wrong. How is that possible, you ask? As I talk about in my intro to LLMs, the most reasonable answer is that there were more wrong answers in the text database than right ones.

We know this for two reasons. First, most of the answers about how to do this don’t include the constraints of web components and the shadow DOM. So that’s a boatload of wrong answers right there.

Secondly, I’m trying to get a new API to work, which means there will be far more examples of the old one than the one I’m after. Since ChatGPT works by counting words and estimating their statistical probabilities, it’s more likely that the answers to my questions (in ChatGPT’s algorithm) are to be found in those wrong texts! So that’s what it serves up first.

When I poke on the issues that those two solutions create (and those issues are specific to the shadow DOM), ChatGPT weighs my own input higher and looks harder for an answer that addresses that specific problem. This is what it meant when it said it needed to “research further.”

What’s interesting to me here is that, with prompting help, the LLM was able to come up with the working answer. This emphasizes the importance of prompt engineering as a new adjunct to all or nearly all development (and UI, and graphic design, and 2D and 3D art product).

This is a fact of life in dealing with LLMs. No one knows in advance what answer it would give to my question. It’s very likely that not one single person other than myself has ever asked it that question. The only way to refine the results is to make a judgement on them — after a human sees them. So a very important part of prompt engineering is this ability to determine why or how the response isn’t what you want, and then elaborate on it. All the best AI confections from art to code rely on this multi-prompt technique.

The reason that the prompts I used worked as well as they did was that I very explicitly explained what I wanted and what was wrong, using forensic or debugging terminology. When you can do this, you can fix the problem yourself — or let the AI fix it! This last part is truly a breakthrough.

AI and LLMs will not take our jobs today, but it’s clear to me that all of us who would like to stay in the business will need to incorporate prompt engineering into our work.

What Did AI Do For Me Today?

AI did not save my life… but it’s not far off if you look into the future.

Unemployed and underemployed people are very unhappy. I joke all the time at my real job that I’m not compatible with being a Wal-Mart greeter.

Today, AI…

- Saved me from the embarrassment of explaining to my team why I didn’t catch a deprecated API.

- Prevented our product from failing in production.

- Saved me the stress of trying to fix this by myself before it failed in production on a very limited time schedule.

- Saved me 3 hours of time by my own estimation vs. fixing this manually. In sprint planning, I would estimate 4 hours to figure it out, implement, and test. This was done and working with prompting in 1 hour.

- Showed me I can use AI-generated doc in production inside our company to help w/ vacation coverage and with minimal time input.

- Shows that I must still choose individual tools and carefully guide prompting to get the results that will work with my real app.

Next Steps…

Seeing the immediate value in this experience, I signed-up for ChatGPT+ today so that I have access to the current model (GPT4), a larger token limit, and other features, and I’ll report on future experiments as well as Copilot X.

As always, thanks again for reading! I’d love to read your comments here, or reach out to me on my website or Discord.

— D

Level Up Coding

Thanks for being a part of our community! Before you go:

- 👏 Clap for the story and follow the author 👉

- 📰 View more content in the Level Up Coding publication

- 💰 Free coding interview course ⇒ View Course

- 🔔 Follow us: Twitter | LinkedIn | Newsletter

🚀👉 Join the Level Up talent collective and find an amazing job

“Last Night an AI Saved My Life” | Debugging with ChatGPT was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by David Bethune

David Bethune | Sciencx (2023-03-29T01:11:03+00:00) “Last Night an AI Saved My Life” | Debugging with ChatGPT. Retrieved from https://www.scien.cx/2023/03/29/last-night-an-ai-saved-my-life-debugging-with-chatgpt/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.