This content originally appeared on Level Up Coding - Medium and was authored by Christopher Angell

We’ve all been there. Happily using an app, and then, click, spinning ball. The app no longer responds, and we have to wait an eternity before we can interact with it again. Nothing kills user experience faster than a slow, unresponsive app. The solution is typically simple: push the painful task off to a background thread, process, or server, and wait for the result to come back all the while the user can happily interact with a responsive application. But how do you do this at scale in a complicated application that may have many actions that need to be coordinated between gui components? Here we introduce one solution by invoking an application event bus and embedding the asynchronous requirements into the event bus allowing both synchronous and asynchronous events to be handled by it, cleanly and simultaneously separating logical concerns of an application behind an event interface and concentrating the asynchronous nature of the application in a single location.

In this article, we’ll discuss a desktop application, but the principle can be more broadly applied if with some creativity. Notably, if your target language and framework support cooperative multitasking (think async/await), then some modification will be needed, but read on for some inspiration and possible ideas nonetheless. The repository for the example can be found on Github.

Motivating the event bus

To understand the need for introducing an event bus, let’s consider a common example of two windows and an application service that needs to coordinate behavior. Here, a search window will send queries to a database, which will return short results that can then be selected and displayed in a non-modal results window that always stays open next to the search window. Additionally, the results window allows for modifying the results and updating the result in the database. In principle, the result window or database could subsequently trigger the search window to update its search results.

The naïve solution to this situation is shown in Fig. 1 on the left. Since the result window and search window are at the same logical level of the application there’s no clear way to make one a subordinate of the other. Both depend on the database, and the search window affects what’s shown in the results window, and the result window could in principle affect the search window via an update of a result. This solution would require giving the search window a direct reference to the internal state of the result window to update and giving to both the result window and the search window a direct reference to the database, and possibly handing a reference to the search window to the result window. We could make a container object to hold both windows, and coordinate their behavior, but that solution wouldn’t scale well, and the behavior would be hard coded into that component. Either way we choose, we only have three components here, and we already have a ball of highly coupled mud.

By introducing an event bus, it is possible to disentangle the three components and have them talk only to the event bus as shown in Fig. 1 on the right. By abstracting their behavior to reflect only receivable events, the several components can be free of needing to know the internals of other objects and can rely on the event bus to transmit intent across the application. The event bus works by registering a handler for a given event, and then emitting events on the bus in response to interactions with the application or transmitting results from service layer components, like databases and network connections. In this way, additional behavior can be added into an application by registering another event handler to the event bus. Examples include sending email or text notification at the end of a long running process or providing database status updates to all windows of the applications. The sender of events is neatly abstracted away from receiver, reducing coupling between components.

GUI’s and asynchronous behavior

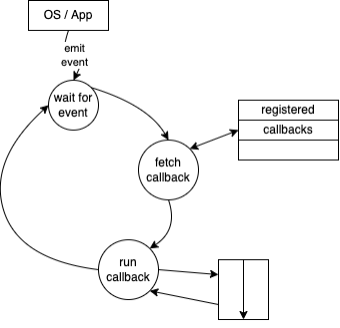

To understand why asynchronous behavior in a gui application is intrinsically necessary, and why this detail needs special care, we need to first understand how a gui event loop works. Shown in Fig. 2, the event loop runs on the main thread of the application and waits for an event, usually a gui interaction from the OS. During this time the thread is completely idle. As soon as an event arrives, the thread comes alive and searches for handlers connected to the event. It then runs each handler in turn, and then upon completion returns to its quiescent state waiting for another event to arrive. In its fury of activity on event arrival marks a very important caveat: it is one thread, and only one thread and can’t do anything else while it works away. All subsequent gui interactions are blocked. Hence the spinning ball if your handler takes too long to run by doing things like downloading a website or accessing the database from the main thread. Hence, the need to move such activity off the main thread so that the event loop can keep chugging away.

But, you might ask, why does the gui only run on one thread? Gui’s typically require that gui components should only be created and updated from the same thread as the gui event loop. As we’ll see below, because of this gui systems provide a way to trigger the event loop safely from other threads allowing control over updates to safely move back to the main thread. Hence, you can safely spawn other threads to do work provided that they use this built-in mechanism to ensure that updates that affect the gui will occur on the main thread. On a side note, you might be able to get away with doing gui updates from other threads but do expect your application to mysteriously and inexplicably crash once in a while if you do.

Here, the event bus can come to the rescue, and provide a way to handle asynchronous behavior, while simultaneously ensuring updates to the gui always occurs on the main thread, and enable an application that can flexibly grow as needed. Concentrating the asynchronous nature in a single location also simplifies a gui application design by providing only one point and one mechanism where results of a background thread need to be moved back to the gui event loop thread. The asynchronous event loop solves these problems by introducing the concept of asynchronous worker handlers, things meant to run on separate threads, and synchronous gui update handlers, things that are meant to run on the main thread. While maintaining the split, the present solution solves the gui update problem by simply pushing all emitted events to the main thread, and then handle gui updates appropriately and dispatch worker handlers to separate threads. Note that events emitted from the main thread do make a little round trip in the solution below, but the present solution is the simplest solution that maintains the abstractions necessary and ensures that all events originate from the main thread.

A practical example

To demonstrate the above-mentioned architecture example as shown on the right in Fig. 1, Python will be used. It should be stressed that the principles are language and gui agnostic. Nevertheless, only Python standard library modules will be used. Snippets of the code are given below, the full example can be found here. Here are the modules that were used:

- tkinter — a gui included in the Python standard library. There are many, many examples and howto’s that explain how to use it, so it will be mostly cut from the code below.

- concurrent.futures — the recommended way to do concurrency in Python. It uses the actor model to dispatch tasks to thread or process pools.

- queue — thread-safe queues. They can be used to safely send information between threads without the need of locks.

- collections — the namedtuple data type was used to provide atomic objects that have pre-defined boilerplate. Similar to a dataclass but less flexible and can be used pre-Python 3.7.

Starting with the end in mind

To see in code how everything will come together, “__main__.py” is shown. As can be seen, the database, and the two windows each depend only on the event bus and not each other.

To test out the asynchronous nature of app, clone the repository, and run “python -m event_example”. There should be no stalling of the app. Then change lines in “db.py” that read “is_async=True” to “is_async=False”. You should now notice the app becomes temporarily unresponsive when buttons are clicked.

The asynchronous event bus

The event bus must do four things: it must create and manage the thread pool asynchronous tasks are dispatched to, provide a way to register handlers, provide a mechanism to emit events, and ensure in emitting that the emitted events all start on the main thread, independent of the thread that calls the emit function.

The thread pool (line 30) uses the default settings for ThreadPoolExecutor. This is usually good enough. Particularly in Python, it’s important to use a thread pool, as opposed to simply spawning a new thread each time, as to avoid an explosion of threads and the ensuing thread starvation that occurs due to the GIL (global interpreter lock). Even with IO bound threads, Python has tricky concurrency problems that motivate keeping the total number of threads to a reasonable number. A good upper bound is typically 20 threads.

Handler registration is done in the connect method (lines 75 and 77). Two separate mappings of events to handlers are maintained, one for handlers that should be called synchronously, and one for handlers that should be called asynchronously. Note, there is no limitation on an event: an incoming event could reasonably have both multiple synchronous and asynchronous handlers. A good reason for doing this is if synchronous gui updates are needed when a given event is dispatched, notifying the system that the corresponding asynchronous processing has started. Additionally, here, the handler function or method is appended to a list keeping a strong reference to the underlying object in the case of a method. This causes a problem if the object should otherwise go out of scope and be garbage collected. For ideas on how to handle this, see caveats below.

To emit an event, as shown in syncemit() (line 52), the thread-unsafe version, handlers are queried from the mappings from event types, simply looping over all the synchronous handlers and calling them in turn, while dispatching the asynchronous handlers to the thread pool.

The mechanism for ensuring an event is handled on the main thread is embedded in emit() (line 36), the thread-safe version for emitting. The incoming event is pushed onto a queue, and then the gui event loop is sent a special event that was registered in the EventBus init method on line N. When that gui event is pushed onto the gui event loop (line 42), it is handled in turn from the main thread calling the registered callbacks (registered on line 34), here being _emit() on line 44. _emit() pops each event bus event off the queue and since we are guaranteed to be on the main thread, the synchronous version of emit can be called safely directly.

A queue is used to move event bus events between threads because the gui does not provide a mechanism in the gui event loop to ferret information other than that a gui event has occurred. For tkinter, that gui event is simply a special identifying string for which we can register callbacks that will be executed when that event is seen in the gui event loop. Since the event bus events can in principle be anything, a mechanism is needed to store that event bus event while the application waits for the gui event loop to act telling the event bus it to process the event bus events from the main thread. Due to the possibility of receiving multiple events on the event bus before processing can occur due to the asynchronous nature of the application, a variable size container is needed, and the thread-safe queue library fits nicely into this need.

The handlers

In each of the three working classes, the search window, the result window, and the database, event handler methods are connected to the event bus upon initialization. Taking a look at the database class, there is one each for searching, fetching, and updating, shown connected on lines 26–28 as asynchronous functions. The searching and fetching are unique also in that they send their own events out along the event bus, lines 38 and 44. The emit as mentioned above is thread safe, so even though they will be called on separate threads than the main thread, the resulting update will safely come up on the main thread. Full code for the other two classes is similar with the exception that they directly update the gui, so their handlers are synchronously connected, and code can be seen here.

The events

Shown here is the template of an event. Using classes as event structure is helpful as it allows routing based on event type, but any object, such as a dictionary (hashmap), that can carry an common identifier and carry a payload as long as used consistently will work as an event. namedtuple is used here simply because it provides boilerplate for instantiating an object. Not all event classes are shown here — they are identical to how “SearchEvent” is created.

Caveats and Considerations

The above event bus was coded as simply as possible and is acceptable as long as the following refinements are unnecessary. There are likely many refinements that would be needed for a production system. Such refinements include concerns for object lifecycle when connecting methods, filtering events based on properties, prioritizing handlers and halting handling sequence early, reporting asynchronous event status, and reporting errors that occur on the event bus. The first three are implemented in the Python encore.events library, and the reader is directed there for a working example.

Object lifecycle can be important if the objects whose methods get connected to the event bus are expected to be deleted at some point such as when a window closes. Above, a hard reference is kept of the method preventing the object from being garbage collected. To allow expected garbage collection, a weak reference to the object should be kept, and each time an event is handled will the requisite method be bound and then discarded. Checking how your target language handles references will be instructive to ensure proper object lifecycle in your implementation.

Event filtering can help prevent the proliferation of event classes and allow handlers to focus only on specific events with the attributes they are interested in. This way, the emitting function need only emit one event class, and can add attributes as necessary. This is also helpful if, mentioned below, sending out status events as it allows handlers to focus only on statuses that they are interested in. Of course, filtering is not necessary if you are willing to tolerate many classes.

Prioritizing handlers is another form of filtering where multiple handlers are registered for a given event, but the handlers are called in order of priority, each deciding whether or not it can handle the event. The first handler that can handle the event marks it as handled, and no further handlers are called. This is an example of the chain of responsibility pattern.

Reporting on asynchronous events is useful when the status of the event needs to be displayed on a dashboard such as when a data query will be executed and is executing. Such states include waiting (in case the thread pool is full, and can’t immediately handle the request), executing, failed and completed. This level of reporting typically requires placing a runner inside the future that runs the event handler and reports on status before and after execution. An example of this type of runner is found in the traits_futures Python library.

Reporting of failures in both synchronous and asynchronous handlers can be helpful to the user as well as for debugging purposes. A way to do this is to register a failure event when the handler is registered that will be emitted if that handler fails. Another way is to embed the originating event in a common failure event and abstract out the name of the embedded event to allow filtering so that handlers can be registered with filtering so that only failure events for the interested original event will be received.

Summary

In this article, we presented a way to simultaneously ensure blocking tasks are handled asynchronously while ensuring that components remain loosely coupled by communicating through an event bus. This combination can be helpful to reason about concurrency in an application, as well as improve maintainability and extensibility by not having to re-write the concurrency model repeatedly thru out the app. Simply change the handler type to “is_async=True” is enough. Here, a desktop application was covered but the same ideas could apply to server applications especially if computational tasks are necessary preventing the utilization of async/await style concurrency. Another extension to the event bus is dispatching the task to a separate server such as one running Dask and using an external event broker to ferret the computational results back to the client-facing server and on to the client. See “Architectural Patterns in Python” for an example with Redis. Though two separate patterns were introduced here, a concurrency model and an event architecture, the hope is this will inspire extensions in your own applications that will make your applications responsive and easy to maintain and extend.

Crafting an Asynchronous Event Bus was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Christopher Angell

Christopher Angell | Sciencx (2022-02-28T14:14:21+00:00) Crafting an Asynchronous Event Bus. Retrieved from https://www.scien.cx/2022/02/28/crafting-an-asynchronous-event-bus/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.