Some time ago I received a great assignment from a client I had worked with before, they had a requirement for an image server for their high-traffic newspaper website and mobile applications. The service needed to crop, resize and recompress JPGs, PNGs and GIFs in real-time and on-demand. I will describe how I made one of the fastest image servers and how I achieved this using a “slow” Node.js server.

I will describe some of the problems I encountered and the design decisions I had to make, but let’s start with a general overview of the system.

The newspaper editors would use their content management system to upload source images to an S3 bucket. These images were sometimes as big as 14 MB and on occasion, they would even try to upload PDF files as an image, so there was no guarantee that the files would be in a useable format.

The newspaper’s website and mobile apps request scaled and cropped versions of the originals from the image server as an HTTP request. The image server then downloads the source file from S3, determines the file type, crops and resizes it and returns it according to spec. Simple, right? Surely we could just horizontally scale up the number of servers and Bob would be our uncle. Well, that is what I thought, but it was wrong. We got nowhere near good-enough performance to resize images in real-time. I had to change my approach and would enter a whole new world of performance optimisations.

I had been comparing various image resize libraries on NPM and running microbenchmarks on them. Most of them used some form of optimised C(++) code to resize the image. In theory they were fast, but we couldn’t get any reasonable performance with these libraries.

The first problem was that it simply took too long for Node.js to start a new image resize process for every request. The most naive implementations used the command-line version of ImageMagick, a popular and fast image processing library. Starting the process, providing it with data and waiting for it to return took quite some time. Packages that used the Node.js FFI (foreign function interface) were doing a bit better, but the FFI overhead was still quite large.

The second problem was that it took relatively long to download an image from S3. This was the second bottleneck to work on.

Finally, we had to know as soon as possible what the image type was and the original dimensions. Requests with invalid crop values or unsupported images had to be discarded so that the memory could be freed up. We had to read the image metadata from the binary file headers while downloading the file.

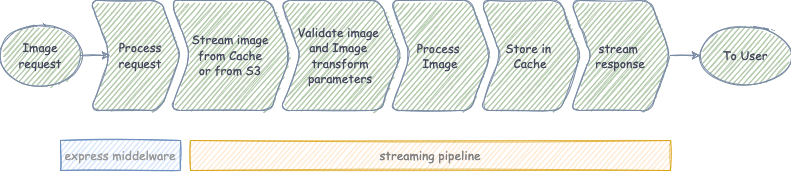

Working with Dario Mannu we came to the realisation that the best approach was a stream pipeline to download and process the images, while at the same time caching both the input as well as the output, the modified image.

When the request and response sizes for your server application are relatively small, a middleware-based pipeline like that of the Express framework is a good solution. But when the application has to deal with images that might be 14MB big, a middleware-based system might become problematic. The application would only need the first few hundred kilobytes of the image to extract metadata, but in a promise-based system, we will have to wait till the image is fully downloaded before we can examine the metadata. This is not what we want, we want to start processing as soon as we receive the first bytes and when we see a problem we need to fail fast, abort any further data streams and send a relevant status code to the client. For a 200 status code result we also want to push data to the client as soon as possible, instead of writing the whole result in one go.

Node.js streams are desgned for this kind of data pipelining. It allows you to start reading data as soon as it comes in, and when the application can’t handle it quickly enough, backpressure will be provided to optimise memory usage. Streams can be forked, or shared between different consumers and ended early when we want to fail an operation.

The data stream pipeline ended up something like the following:

- Incoming request for an image with transformation parameters,

- Sanitise request in middleware and proceed to the route handler

- Load source image

- Either stream from memory cache or request image from S3 as a stream

- Pipe image into memory cache if not exists

- Read initial binary data from the image header in Javascript to find out the image type and dimensions.

- Pipe image data to the ImageMagick service worker pool

- Pipe the image into the frontend cache

- Pipe the image to the response and to the client.

I will go through these steps in a bit more detail and explain the level of detail we went into to make this application quite special.

The first steps are simple, we check the request in our middleware to validate the parameters. The handler retrieves the image from S3 using s3.getObject(‘objectName’). This method returns a stream we can pipe or read from. In the code below are the first few steps. If AWS returns an error, we handle it in the error-handling middleware. When we get the AWS headers, then we set it for our response to the client.

var stream = s3.getObject(req.params.id)

.on(‘error’, next)

.on(‘httpHeaders’, function (headers) {

res.set(‘content-type’, headers[‘content-type’]);

});

This part was pretty simple, but the next step will become a bit more complicated. We parse the parameters from the request object. Options are crop, resize and quality.

We want to check if these options are valid for the image we are dealing with. We do not want to scale the image up, and we don’t support a crop that starts at an X position that is higher than the image is wide for example.

stream.pipe(imageSizeChecker(imageOptions))

.on(‘error’, next)

.on(‘nonValidImage’, function (err) {

next(err);

})

.on(‘validImage’, function (imageData) {

We pipe our image bytes to the image-size-checker library which uses image-size, we don’t wait till we have the full image in memory, but we do it progressively by using the partial image buffer. Most image formats have a binary header with metadata.

We decided against using a lot of elastic compute units. With an elastic setup smaller servers are added and removed when load changes. The problem is that an elastic setup will need a separate caching solution, like Redis. In our case that won’t work, since it is the amount of data that is the real problem and not the speed of external services. In memory cache isn’t a viable solution for an elastic system, smaller servers mean less memory for caching and more cache misses. The obvious solution is to use fewer but bigger servers with more memory and more processor power.

As an example we could use an AWS instance with 8GB of memory. We would set apart 4GB for a memory cache with raw images. If the images are 4MB on average, that would allow us to cache 1000 images in an LRU cache. In practice, this would nearly completely cut out any excess s3 requests. But what if two requests come in at the same time for an identical image I hear you think? When a request is in progress we save a reference to the S3 stream in cache, so that a second request can easily fork and process that stream. Yes, streams are amazing for this use case.

So back to the problem of the image resizing and cropping. There was no good off-the-shelf Node.js plugin to resize images. Based on inspiration from a different Node.js package we built a pool of long-running C processes to do the hard work. Per Node.js server we can spin up multiple C processes to do image manipulation. A few iterations of benchmarking got us the ideal pool size for the specific server instance we were using.

The image processing library used the ImageMagick C API to scale, crop and compress images. It was my first project in C and if the documentation of the library had been a bit better, the project could have been delivered faster.

Our C program keeps running constantly and listens for image data and processing instructions on stdin. Resized images are returned to stdout and handled by the Node.js process. I won’t go much more into the C programming of this application, I think it is out of the scope for this article. It is enough to say that this process has a very low overhead and multiple worker services can work in parallel.

This image server does operate behind a CDN, but we thought it to be worthwhile to add a second in-memory cache for resized images. Not every client would always communicate directly through the CDN, for example for internal requests.

The finished product.

The end-product was a server that can handle large amounts of requests in a short time, for example when a new mobile newspaper bundle is composed. The server often manages to resize a JPEG image on demand in under 500ms. Of course the specific times vary depending on the complexity and size of the input image, where complex images take more time to encode.

Some examples:

A complex textured image with a size of 3300×1900 resized to 200px wide in 650ms

The same image resized to 1250px wide took only 590ms.

A simpler image without much texture can be resized quicker.

A 1600×900 image without much texture can be resized to 800px in 115ms

Any subsequent requests come straight from cache and can be served in around 35ms.

The service can change the quality of compression, crop and resize images. When the resize-ratio is suitable it will resample for even faster results, because no re-compression is necessary. The C code is highly optimised and could only be improved if it would use GPU re-compression, which would drastically change the server requirements.

AWS consultants have investigated if they could provide a faster or more cost-effective solution, but as of now, this image server is still in use after several years, something I am quite proud of.

If you made it all the way here, then I would like to thank you for your perseverance. This was one of the most fun projects that I have worked on and one of the most successful. Maybe I would take a different approach if I would do this now, but this met all the requirements that I had at the time, and the proof is that it is still being used.

Bit: Build Better UI Component Libraries

Say hey to Bit. It’s the #1 tool for component-driven app development.

With Bit, you can create any part of your app as a “component” that’s composable and reusable. You and your team can share a toolbox of components to build more apps faster and consistently together.

- Create and compose “app building blocks”: UI elements, full features, pages, applications, serverless, or micro-services. With any JS stack.

- Easily share, and reuse components as a team.

- Quickly update components across projects.

- Make hard things simple: Monorepos, design systems & micro-frontends.

Learn more

- How We Build Micro Frontends

- How we Build a Component Design System

- How to reuse React components across your projects

- Painless monorepo dependency management with Bit

- Sharing Types Between Your Frontend and Backend Applications

How I Wrote a Lightning-Fast Image Server in Node.js was originally published in Bits and Pieces on Medium, where people are continuing the conversation by highlighting and responding to this story.

Laurent Zuijdwijk | Sciencx (2022-07-27T09:05:52+00:00) How I Wrote a Lightning-Fast Image Server in Node.js. Retrieved from https://www.scien.cx/2022/07/27/how-i-wrote-a-lightning-fast-image-server-in-node-js/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.