This content originally appeared on Level Up Coding - Medium and was authored by John Olatubosun

With the rise of LLMs (Large Language Models), it has become easier for developers to build AI features into their applications. This new wave has led to many innovations in the AI space, especially given that many of these models are open-source.

A common hurdle when building an application with an LLM is that it is limited to only the information it has been trained with. However, with vector embedding and a RAG approach, we can enable the LLM to use specific information from our data source when prompted. And guess, what, that is what this article is about 😌. Put on your AI hat and let's begin!

It would help if you had a basic understanding of linear algebra to follow along easily in this article. However, I will try to explain things as simply as I can.

Ohh, wait… I forgot to explain what an LLM is.

What is an LLM?

An LLM, which stands for Large Language Model, is an artificial intelligence model that can understand and generate human-like text. They can be used for various tasks, from answering questions to generating summaries, and much more. LLMs can perform a wide variety of tasks depending on what data they are trained on.

Now, let's begin!

What is a Vector?

If you asked me in 2013/2014, I would have said it was an entertaining PC game 😂… Ahhh, fun times.

A vector is a mathematical entity that represents the displacement or direction of a point from the origin. It has components corresponding to each dimension, and these components can be used to calculate the vector’s magnitude and direction.

For example, the vector [x, y] corresponds to a point on the 2-dimensional graph (2d because it has 2 components). Its magnitude is the distance from the origin, and its direction can be described by the angle it forms with the x-axis. An example is [2,5].

In computers, vectors are represented as one-dimensional arrays of numbers, with each number corresponding to a component in a particular dimension.

2d_vector = [

2,

5

]

nd_vecotor = [

1.2942,

9.0294,

-3.3922,

4.2921,

...

]

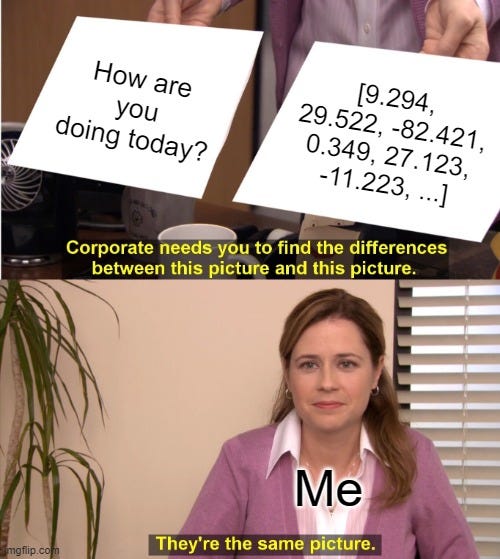

It is important to know that vectors can have more than 2 dimensions. When working with machine learning and artificial intelligence, we can have vector dimensions of up to thousands and even hundreds of thousands.

Embedding and Embedding Models

In general, embedding is the process of transforming data into a more compact and useful format, typically into a vector (an array of numbers in computing).

The main goal of embedding is to convert data into a numerical format, while still preserving its meaning and relationship so that computational models can easily process and analyze the data. In most cases, this process is used to convert data (words, sentences, images, and even categorical data) to numerical vectors, while still preserving their meaning.

If you look at the simulated embedding above, you will notice that the vector generated for cat and dog is closer together than that generated for spoon. This is because both a cat and a dog are animals and also pets, while a spoon is a home utensil.

An embedding model is a type of machine learning model that is designed to generate embeddings from given data. These models can capture meaningful relationships and patterns in the data (such as text, images, and categorical data), and convert them to vectors in a continuous vector space.

I’m sure you are wondering what a continuous vector space is. A continuous vector space is a mathematical space in which each point (or vector) can take on any value from a continuous range.

For example, in 2-dimensional space (an x-y graph), the continuous vector space includes all the possible points in the space, represented as pairs of real numbers [x,y]. This is important in embedding because it enables meaningful comparison between generated vectors (embeddings), as the vectors have the same number of components (array elements) and consist of only real numbers.

Vector Embeddings

A vector embedding is the representation of data such as words, sentences, or images, as vectors (arrays of numbers) in n-dimensional space.

The essence of vector embedding is that since all the vectors are in the same continuous vector space (or, you can say they have the same number of dimensions) we can easily locate similar vecotrs.

An embedding model is used to convert complex data to vectors in a continuous vector space (vector embeddings), where similar vectors (data points) are close together.

There are a large number of models that can be used to generate vector embeddings. Most models offer the option to configure the number of dimensions used when generating the vector. Typically, the larger the number of dimensions, the more context and meaning can be represented, however, this would increase the computational complexity

Vector Database

As the name suggests, a vector database is a database for vectors 😌

It is a specialized type of database designed for working with vectors. It can store, manage, and search data that are stored as vectors

There are a lot of options for vector databases, we have; Chroma, Pinecone, pgvector, and much more. I enjoy using pgvector on Supabase

R.A.G

Commonly mistaken for the cotton fabric in your mother's kitchen, R.A.G stands for Retrieval Augmented Generation. Earlier, I mentioned that an LLM is limited to the data it was trained on. It cannot access up-to-date, real-time, or other external data. RAG solves this problem by taking a three-step approach.

Step 1—Retrieval: When working with an LLM, an input prompt is given. In the Retrieval phase of RAG, this prompt is analyzed, and based on its content, necessary or related information is retrieved from an external data source.

Step 2 — Augmented: In this stage the retrieved information is then used to modify the prompt giving it contextual information

Step 3 — Generation: The updated prompt is then fed into an LLM, which it uses to generate a response

Putting it all together, RAG is an approach that allows LLM to work with external information, outside of what it was trained on. This process involves first retrieving information relevant to the initial user prompt and then augmenting this prompt with the fetched information before sending it to the LLM to generate a response.

RAG can be combined with vector embeddings. With this combination, in the retrieval stage, a vector search is done using cosine similarity or Euclidean distance (these are fancy math formulas for calculating the distance between two vectors, allowing us to determine which vectors are closer together). The initial prompt in the retrieval phase is converted to a vector and compared with other vectors in the vector database to get closely related vectors in the database. The original content of the retrieved vectors is then returned to be used in the subsequent stages.

Conclusion

In conclusion, with the use of vectors and an RAG approach anyone can build complex AI-powered applications. I love to hear about what you are building in the comments

I hope you got some value from this, and as always please leave a like and a comment. Also, subscribe to my Youtube channel 👉 https://www.youtube.com/@tolu-john

If it’s not too late, Happy New Year to you 🎉🎉… I wish you a year replete with blessings

See you in the next one 🫡

Vector Embeddings and RAG Systems was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by John Olatubosun

John Olatubosun | Sciencx (2025-01-15T15:14:12+00:00) Vector Embeddings and RAG Systems. Retrieved from https://www.scien.cx/2025/01/15/vector-embeddings-and-rag-systems/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.