This content originally appeared on HackerNoon and was authored by Reinforcement Technology Advancements

Table of Links

3 Model and 3.1 Associative memories

6 Empirical Results and 6.1 Empirical evaluation of the radius

6.3 Training Vanilla Transformers

7 Conclusion and Acknowledgments

Appendix B. Some Properties of the Energy Functions

Appendix C. Deferred Proofs from Section 5

Appendix D. Transformer Details: Using GPT-2 as an Example

Appendix B. Some Properties of the Energy Functions

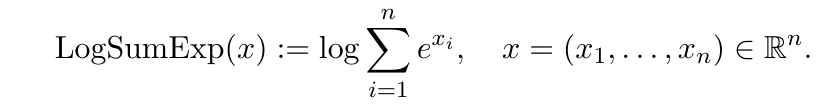

We introduce some useful properties of the LogSumExp function defined below. This is particularly useful because The softmax function, widely utilized in the Transformer models, is the gradient of the LogSumExp function. As shown in (Grathwohl et al., 2019), the LogSumExp corresponds to the energy function of the a classifier.

\

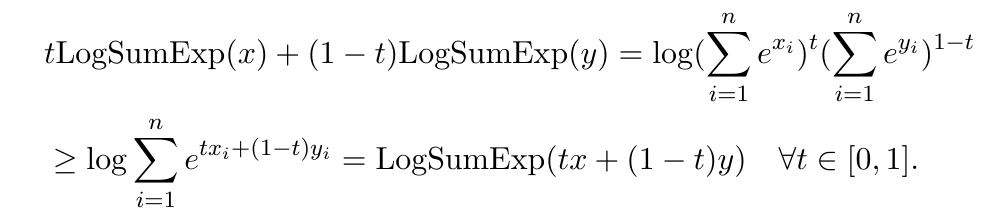

\ Lemma 1 LogSumExp(x) is convex.

\ Proof

\

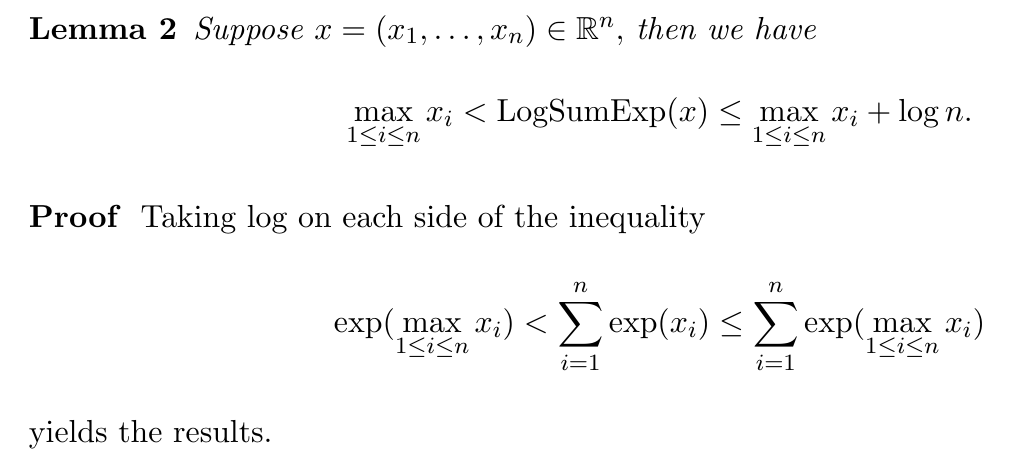

\

\ Consequently, we have the following smooth approximation for the min function.

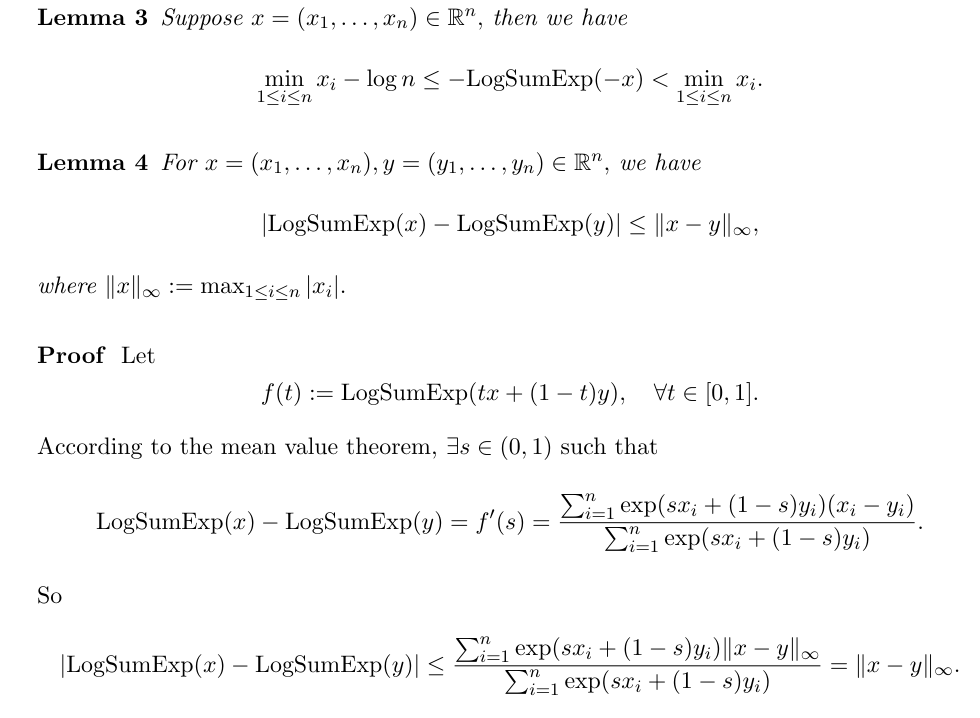

\

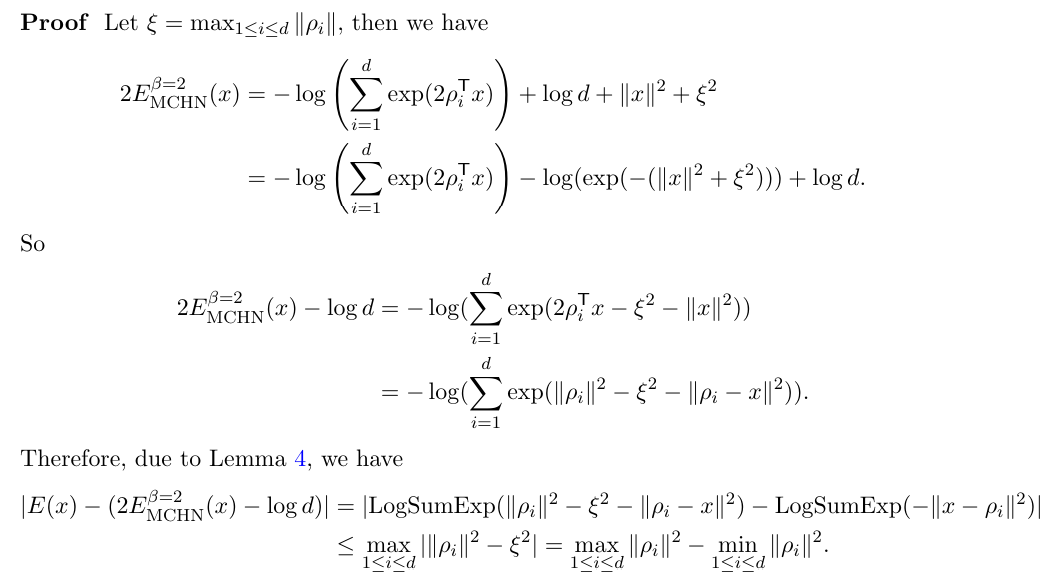

B.1 Proof of Proposition 2

\

:::info Authors:

(1) Xueyan Niu, Theory Laboratory, Central Research Institute, 2012 Laboratories, Huawei Technologies Co., Ltd.;

(2) Bo Bai baibo (8@huawei.com);

(3) Lei Deng (deng.lei2@huawei.com);

(4) Wei Han (harvey.hanwei@huawei.com).

:::

:::info This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by Reinforcement Technology Advancements

Reinforcement Technology Advancements | Sciencx (2025-06-24T02:15:03+00:00) LogSumExp Function Properties: Lemmas for Energy Functions. Retrieved from https://www.scien.cx/2025/06/24/logsumexp-function-properties-lemmas-for-energy-functions/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.