This content originally appeared on HackerNoon and was authored by Maksim Nechaev

Introduction

Let’s be honest: the journey looks almost the same for everyone. At first, you open ChatGPT and think: “Wow, this is magic!” A couple of lines of text and suddenly you’ve got code, a marketing blurb, or even a recipe suggestion.

\ Then comes the next stage. The magic slowly turns into chaos. Prompts get longer, workarounds pile up, you start chaining calls together-and one day you realize: “Okay, I’m spending more time explaining to the model what I need than actually doing the task itself.”

\ The third stage is where things finally get interesting. That’s when you realize the chaos needs taming, and you start thinking like an engineer. Not as someone tinkering with a toy, but as an architect who’s building a system.

\ So let me ask you: where are you right now on this maturity curve? Still playing around with prompts? Stuck in endless spaghetti chains? Or maybe standing right at the edge of something bigger?

\ In this article, I want to walk you through a simple maturity model for LLM systems-a journey from quick experiments to full-fledged architecture, where agents work together like a well-oiled team. And yes, at the top level we’ll talk about something I call AAC (Agent Action Chains)-an approach that helps finally break free from the chaos. But before we get there, let’s take an honest look at how most projects evolve.

\

Why We Need a Maturity Model

Right now, LLM systems don’t have a standard growth path. Every developer or team takes their own route, trying out hacks, inventing their own approaches. On paper, that sounds like freedom and creativity. In reality, it often turns into chaos.

\ Look around: some projects stall at the “just add another prompt and it’ll work” stage. Others build long chains of calls that look nice on a diagram but collapse under the first real load. And then there are the no-code maps that spiral into a nightmare of a hundred blocks connected in every direction. On demo day, it still looks alive. But as soon as it hits production, nothing scales, nothing is tracked, and nobody can even tell where things broke.

\ These aren’t isolated cases-they’re a pattern. Dozens of teams and startups end up wasting months walking the same trial-and-error path, reinventing the same wheel again and again.

\ That’s where a maturity model comes in. It gives you a simple map: where you are now, and what needs to change to move forward. Other fields went through this before. Agile maturity models helped companies figure out whether they were truly agile-or just renaming tasks as “sprints.” DevOps maturity did the same for release processes, showing how automated and repeatable they really were.

\ LLM systems are at the same turning point today. The hype is massive, but the maturity is almost zero. Without a shared model of progress, we’ll just keep drowning in chaotic prompts and spaghetti systems.

\

Level 1 - The Script (Prompting Playground)

This is where almost everyone starts. One prompt in ChatGPT or a single API call-and boom, you’ve got an answer. It works “in the moment,” but only as long as you can keep the details in your head.

\

Signs.

Chaotic queries, no repeatability, unpredictable results. Today it works, tomorrow the model gives you something completely different.

\

Risks.

Zero control. Nothing here can be integrated into a real product or business process. Everything depends on luck.

\

When it makes sense.

Quick experiments, prototyping ideas, or those first “wow moments” when you’re just getting a feel for what LLMs can do. But staying here is dangerous-this isn’t a system, it’s just a sandbox.

\

Level 2 - The Complex Prompt (Prompt Engineering 2.0)

At this stage, people start “casting spells” with text. A single query isn’t enough anymore-so you get long prompts with role instructions, detailed steps, and even mini-scenarios baked in. Sometimes it feels less like writing a prompt and more like coding a tiny program in English.

\

Signs.

You start to feel the “magic of wording”: change one phrase and the model spits out something completely different. Some people even build prompt libraries, but underneath, it’s still just one big monolith.

\

Risks.

As complexity grows, the prompt turns into a monster that can’t be maintained. Adding a new step often means rewriting everything. Testing is painful. Scaling this approach? Nearly impossible.

\

When it makes sense.

Complex prompts are still useful in the right context: quick MVPs, marketing use cases, or research projects. Sometimes they deliver an impressive result “here and now.” But long-term, they don’t hold up-this is a temporary crutch, not a real foundation.

\

Level 3 - The Linear Chain

The next step after the “giant prompt” is to connect multiple LLM calls into a sequence. Now the system isn’t one massive block of text-it’s a series of steps: extract the data, process it, then generate an answer based on that.

Signs.

At this stage, the first workflows start to appear-whether it’s in LangChain, n8n, or Make.com. People begin thinking in steps, breaking big problems down into sub-tasks. There’s a bit of logic: “first classify, then fetch context, then generate the response.” It’s already much better than one giant monolith of a prompt-but it’s still strictly linear, with no branching or flexibility.

\

Risks.

The biggest issue is rigidity. These chains are carved in stone: change one step, and you often end up rewriting everything else. Adding new scenarios is painful, and errors tend to break the whole chain at once. It’s like the early days of microservices without an orchestrator-technically modular, but still held together with duct tape.

\

When it makes sense.

This level works fine for small bots or simple automations: parsing emails, generating summaries, drafting quick responses. It’s a good starting point. But in any real product, it quickly becomes a limitation. And this is usually the moment teams realize: without architecture, you won’t get much further.

\

Level 4 - Spaghetti (Ad-hoc Systems)

This is where the real pain begins. When a simple linear chain no longer works, developers start piling on “branches” and “if-else” conditions. Temporary memory appears-sometimes it’s just an array in code, sometimes a custom storage hack, sometimes a variable passed clumsily between nodes. The logic grows messy, and the system stops being linear.

\

Signs.

Workflows in no-code platforms start to look like spiderwebs: dozens of nodes, tangled connections, loops everywhere. Code-based projects aren’t much better: logic scattered across prompts and helper functions, with critical conditions hidden right inside the text of the prompts themselves. From the outside, it’s unreadable-and nearly impossible to explain to someone new.

\

Risks.

These systems are a nightmare to maintain. When something breaks, figuring out where is almost impossible. Errors are hidden, debugging is nonexistent, and everything depends on that one person who “knows how it works.” Scaling or handing it off to another team? Forget it. This is a dead-end branch.

\

When it makes sense.

Honestly? Never. Spaghetti systems usually emerge as a byproduct of experimentation, but staying here kills growth. Many teams hit this stage and finally realize: the solution isn’t “just one more hack”-it’s real architecture. And that’s exactly what sets the stage for the next level of maturity.

\

Level 5 - Orchestrator + Roles (System Design Thinking)

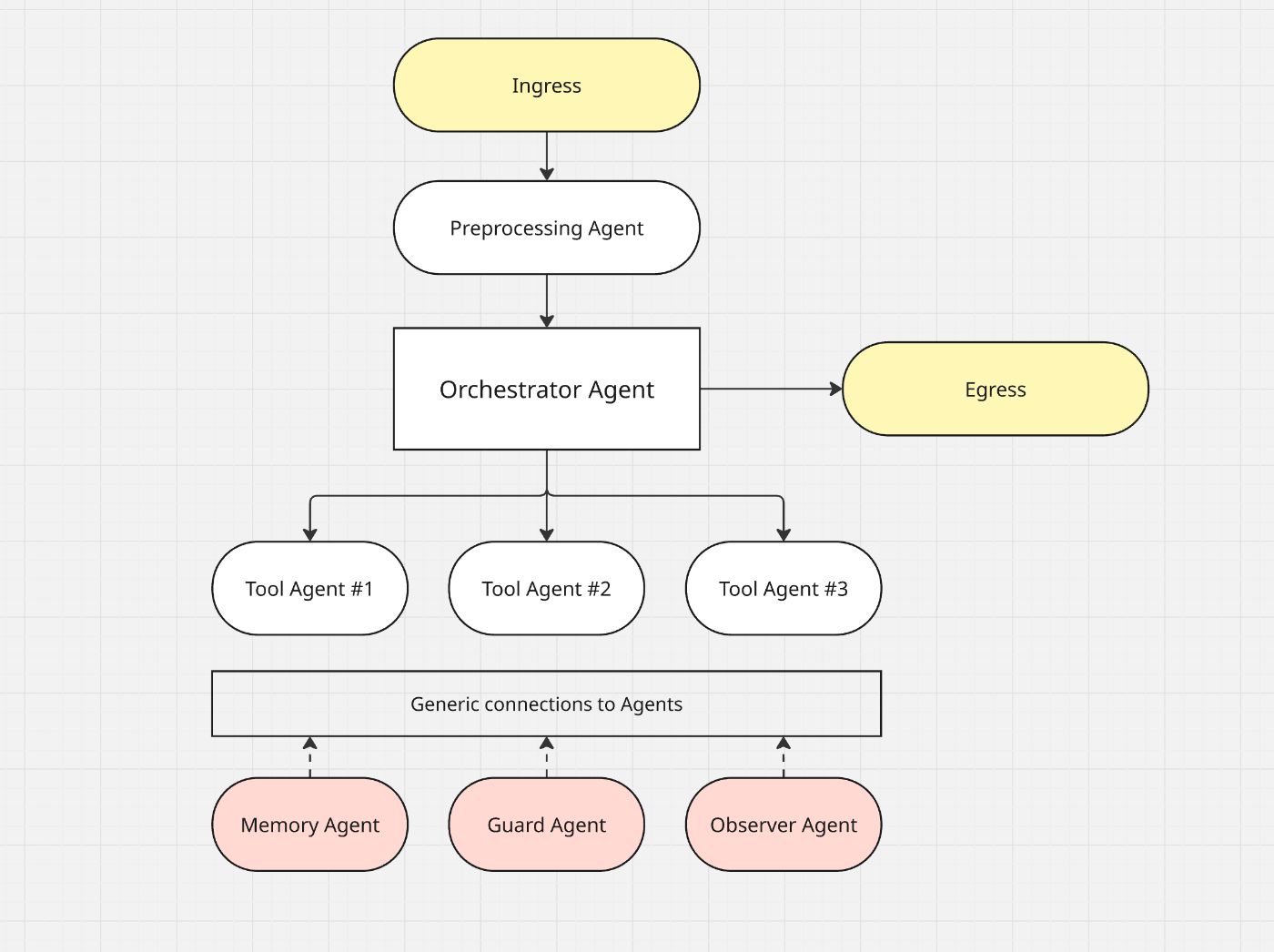

This is the stage where chaos finally turns into a system. Instead of one endless chain or a spaghetti mess of branches, you get a structured design with clearly defined roles. Each part of the system knows its job:

Orchestrator - the brain that decides who does what and in what order.

Specialists - narrow experts, each handling a specific task: classification, response generation, data retrieval.

Memory - makes sure the system isn’t living like a goldfish, giving it access to past context and knowledge.

Guard - catches errors and ensures resilience, so one failure doesn’t bring everything down.

Observer - monitors execution, collects logs, and provides visibility.

Egress - polishes the final output and delivers it to the next stage.

\

Signs.

At this level, you see formal contracts (often JSON) connecting roles. You can test components individually, swap or extend them without breaking the entire system. The fragile prototype evolves into a modular architecture you can actually build on.

\

Risks.

Yes, this requires more upfront effort. You have to think architecturally, design roles, and resist the urge to just “throw in another prompt.” But that investment pays back quickly if your system is meant for real users and scale.

\

When it makes sense.

Production environments, business processes, and real-world products. Anything beyond experiments will eventually need to evolve into this stage. It’s the only place where scalability, maintainability, and predictability become possible.

\ And this is exactly where AAC (Agent Action Chains) comes in-the architecture I developed as a practical maturity model for LLM systems. AAC formalizes the roles, adds discipline, and enables that crucial leap from “prompt hacking” to true engineering.

\

How to Use This Model

The real value of a maturity model is that it works like a mirror. It lets you take an honest look at your project, see where you are, and figure out what needs to change to move forward.

\

For self-assessment.

If you’re building something solo or in a small team, the model is basically a quick checklist. Ask yourself: “Are we still living in prompt-land? Have we built simple chains? Or are we already drowning in spaghetti?” That snapshot helps you spot tomorrow’s bottlenecks today-and prepare for them in advance.

\

For teams.

The model becomes a shared language. A product manager, an engineer, and an analyst don’t need to get lost in technical details-they can just name the level. “We’re at the chain stage, but we need to move past spaghetti ASAP”-and everyone knows exactly what that means. Less friction, more productive conversations.

\

For investors and partners.

The model also acts as a signal of team maturity. A startup still working with raw prompts can deliver flashy demos, sure-but it’s a risky bet. A team already thinking in terms of roles, orchestration, and observability, though? That’s a team building something that can actually live in production and scale.

\ The evolution of LLM systems follows the same arc as any technology: first comes the magic, then the chaos, and finally-the engineering. We start with simple scripts, get stuck in complex prompts, try chaining things together, drown in spaghetti… and only then realize: it’s time to build architecture.

\ A maturity model helps us face that reality: to see where we are, and to know where we need to go next. For some, it means letting go of “monster-prompts.” For others, it means escaping the trap of chaotic chains. And for a few, it’s the call to step up to the next level-where orchestrators, roles, and real system design finally emerge.

\ That’s where AAC (Agent Action Chains) enters the picture-an architecture that formalizes this top level of maturity. But AAC isn’t magic. It’s the result of walking the path. You only get there by going through the earlier stages.

\

:::tip 👉 Here’s the AAC system design pattern if you want to dive deeper.

:::

\

This content originally appeared on HackerNoon and was authored by Maksim Nechaev

Maksim Nechaev | Sciencx (2025-08-26T02:00:08+00:00) The 5 Stages of LLM Systems: From Playground Hacks to Real Architecture. Retrieved from https://www.scien.cx/2025/08/26/the-5-stages-of-llm-systems-from-playground-hacks-to-real-architecture/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.