This content originally appeared on Level Up Coding - Medium and was authored by Vuong Ngo

I spent three hours instrumenting Claude Code’s network traffic to understand something that wasn’t in the documentation: how do skills get invoked automatically while slash commands require explicit triggers? And why do sub-agents seem to operate in a completely different context than the main conversation?

The answer turned out to be cleaner than I expected. Claude Code uses five distinct prompt augmentation mechanisms, each injecting content at different points in the API request structure. Understanding these injection points changed how I build AI-powered development workflows — and exposed some critical security implications that aren’t obvious from the user-facing documentation.

What you’ll learn

- The exact API injection points for CLAUDE.md, output styles, slash commands, skills, and sub-agents

- How to choose the right mechanism based on scope, activation pattern, and security requirements

- Why skills are unsuitable for production (and when MCP is the right alternative)

- Performance implications of tool-based vs. direct prompt injection

- Common debugging pitfalls when multiple mechanisms conflict

All network traces and analysis data are available at this repo. This analysis is based on reverse engineering the actual network traffic — not official documentation or speculation.

Why This Matters: The Architecture Behind AI Development Tools

Most developers treat Claude Code as a black box. You create a CLAUDE.md file and it “just works.” You install a skill and it “magically” activates. But when things don’t work — when a skill fails to trigger, or Claude ignores your CLAUDE.md instructions, or sub-agents lose context — you’re stuck guessing.

I wanted to understand the actual mechanics. Not what these features do (the docs explain that), but how they work: What gets sent to the API? When? In what format? How does the model decide whether to invoke a skill vs. ignore it?

The methodology was straightforward: modify Claude Code to log all API requests/responses, run five isolated conversation sessions (one per mechanism), and trace the exact JSON payloads. The results revealed a surprisingly clean architecture with minimal overlap between mechanisms.

The Five Mechanisms at a Glance

The architecture operates on three distinct layers:

System-level: Output Styles (modifies Claude’s identity/behavior)

Message-level: CLAUDE.md, Slash Commands, Skills (adds context/instructions)

Conversation-level: Sub-Agents (delegates to isolated conversations)

Understanding these layers is critical because they have different performance characteristics, security implications, and failure modes. Let’s examine each mechanism in detail.

Mechanism 1: CLAUDE.md — The Invisible Project Context

CLAUDE.md is the most subtle mechanism because it requires zero configuration beyond creating the file. If `/path/to/CLAUDE.md` exists in your project root, Claude Code automatically reads it and injects the content into every user message as a `<system-reminder>`. This happens transparently — you won’t see it in the Claude Code UI, but it’s in every API request.

{

"messages": [{

"role": "user",

"content": [{

"type": "text",

"text": "<system-reminder>\nAs you answer the user's questions, you can use the following context:\n# claudeMd\nCodebase and user instructions are shown below. Be sure to adhere to these instructions. IMPORTANT: These instructions OVERRIDE any default behavior and you MUST follow them exactly as written.\n\nContents of /path/to/CLAUDE.md (project instructions, checked into the codebase):\n\n[your CLAUDE.md content]\n</system-reminder>"

}]

}]

}Key characteristics:

Automatic and persistent: Once CLAUDE.md exists, it’s injected into every request. No opt-in, no activation command.

User message placement: Unlike system prompts (which define Claude’s identity), CLAUDE.md appears in the user message array. This means it’s project-specific *context*, not identity modification.

High-priority preamble: The “IMPORTANT: These instructions OVERRIDE any default behavior” signals high weight, but it’s still user context, not system-level instruction.

Minimal performance cost: Typical CLAUDE.md files are 1–5KB, negligible overhead per request.

When to use CLAUDE.md:

✅ Team-wide coding standards and conventions (committed to git)

✅ Architecture decisions and design patterns to follow

✅ Domain knowledge and business logic context

✅ References to other project documentation (via `@file.md` syntax)

When NOT to use CLAUDE.md:

❌ Temporary instructions (use direct prompts instead)

❌ User-specific preferences (use output styles)

❌ Workflow automation (use slash commands)

Mechanism 2: Output Styles — Session-Level Behavior Mutation

When you run `/output-style software-architect`, Claude Code appends a text block to the `system` array in the API request. This isn’t a one-time injection — it persists and gets included in every subsequent request until you explicitly change it or end the session.

{

"system": [

{"type": "text", "text": "You are Claude Code, Anthropic's official CLI…"},

{"type": "text", "text": "# Output Style: software-architect\n\n[style instructions including tone, format, technical depth requirements…]"}

],

"messages": […]

}Key characteristics:

System-level mutation: This modifies Claude’s identity/behavior, not just context. It’s fundamentally different from CLAUDE.md which adds user context.

Session-scoped persistence: Once set, the output style persists across all messages until explicitly changed. This is session state, not per-message injection.

Low performance cost: ~2KB per request, negligible overhead.

When to use output styles:

✅ Session-wide response format changes (concise, verbose, structured)

✅ Technical depth adjustments (explain like senior dev vs. junior dev)

✅ Tone modifications (formal, casual, educational)

When NOT to use output styles:

❌ Single-turn modifications (use slash commands instead)

❌ Project-wide standards (use CLAUDE.md)

❌ Temporary format changes (just say “respond in JSON” directly)

Pro tip:

Run `/output-style` with no arguments to see the current active style. Make this a habit when debugging unexpected behavior.

Mechanism 3: Slash Commands — Deterministic Prompt Templates

Slash commands are the simplest mechanism: pure string substitution with zero intelligence. When you run `/review @file.js`, Claude Code reads `.claude/commands/review.md`, replaces any `{arg1}` placeholders with your arguments, and injects the result into the current user message.

{

"messages": [{

"role": "user",

"content": [{

"type": "text",

"text": "<command-message>review is running…</command-message>\n\n[content from review.md with {arg1} replaced by @file.js]\n\nARGUMENTS: @file.js"

}]

}]

}Key characteristics:

Single-turn injection: The command content is injected into the current message only. It doesn’t persist to the next turn.

Deterministic activation: Commands only trigger when explicitly invoked with `/command-name`. No model decision-making.

No intelligence: This is pure template substitution. The model doesn’t “decide” whether to use the command — you explicitly trigger it.

Minimal latency: Direct injection, no tool calling overhead.

When to use slash commands:

✅ Repeatable workflows you want explicit control over (code review, deployment checklists)

✅ Multi-step procedures you run frequently

✅ Standard prompts with variable inputs (file paths, feature names)

When NOT to use slash commands:

❌ Tasks you want Claude to invoke automatically (use skills instead)

❌ One-off prompts (just type them directly)

❌ Session-wide behavior (use output styles)

Decision rule:

If you’re running the same slash command in every message, you’re using the wrong mechanism — switch to an output style. Slash commands are for occasional, explicit invocation.

Mechanism 4: Skills — Model-Invoked Capabilities

This is where things get interesting. Skills are invoked autonomously by Claude based on semantic matching between your request and the skill description in `SKILL.md` frontmatter. When there’s a match, Claude calls the `Skill` tool, which injects the skill content into the conversation as a `tool_result`.

// Step 1: Assistant decides to use skill

{

"role": "assistant",

"content": [{

"type": "tool_use",

"name": "Skill",

"input": {"command": "slack-gif-creator"}

}]

}

// Step 2: Skill content returned

{

"role": "user",

"content": [{

"type": "tool_result",

"tool_use_id": "…",

"content": "[complete SKILL.md content injected here]"

}]

}

Key characteristics:

Model-decided activation: Unlike slash commands, Claude autonomously decides whether to invoke the skill based on semantic matching.

Tool calling overhead: This is a two-step process (model decision → tool result), adding significant latency. I measured 3–5 seconds for skill-based responses vs. 1–2 seconds for direct prompts.

Direct code execution: Skills can run arbitrary bash commands. This is the critical security issue.

Naive discovery: Skill matching is simple substring/semantic matching on the description field. If your description doesn’t contain keywords that match the user’s request, the skill won’t activate.

The security problem:

Skills execute code directly via bash. There’s no structured I/O, no schema validation, no access control. Compare this to MCP (Model Context Protocol):

Skills: Unstructured I/O → Direct code execution → Requires sandbox/isolation

MCP: Structured JSON I/O → Schema validation → Access-controlled tools

For anything beyond personal prototyping, skills are unsuitable. If you’re building tools that touch sensitive data or run in multi-user environments, use MCP instead.

When to use skills:

✅ Personal prototyping where you control the environment

✅ Domain-specific automation you want Claude to invoke automatically

✅ Workflows that benefit from autonomous activation

When to use MCP instead:

✅ Production deployments

✅ Multi-user environments

✅ Sensitive data access

✅ Reusable tools across multiple AI applications

✅ Anything requiring proper security boundaries

Debugging skill activation failures:

Skills fail to activate more often than you’d expect. The matching mechanism is naive — if your `description` field doesn’t contain specific keywords that match the user’s request, the skill won’t trigger.

Fix: Make skill descriptions extremely explicit. Include trigger phrases, use cases, and specific keywords. There’s no matching score or debug mode to see why a skill didn’t activate.

Mechanism 5: Sub-Agents — Isolated Conversation Delegation

Sub-agents are architecturally the most interesting because they spawn a completely separate conversation. When Claude delegates a task, it calls the `Task` tool with a `subagent_type` parameter (e.g., “Explore”, “software-architect”) and a prompt. The sub-agent then runs in an isolated conversation with its own system prompt, executes autonomously through multiple steps, and returns results to the main conversation.

// Part 1: Main conversation delegates

{

"role": "assistant",

"content": [{

"type": "tool_use",

"name": "Task",

"input": {

"subagent_type": "Explore",

"prompt": "Analyze the authentication flow in the codebase and identify all entry points…"

}

}]

}

// Part 2: Sub-agent runs in isolated conversation

{

"system": "[Explore agent system prompt with specialized instructions]",

"messages": [{

"role": "user",

"content": "Analyze the authentication flow in the codebase…"

}]

}

// Part 3: Results returned to main conversation

{

"role": "user",

"content": [{

"type": "tool_result",

"tool_use_id": "…",

"content": "[sub-agent findings and analysis]"

}]

}

Key characteristics:

Complete context isolation: Sub-agents don’t see your main conversation history at all. This is both a feature (clean delegation) and a limitation (can’t reference prior discussion).

Separate system prompt: Each sub-agent type has its own specialized system prompt optimized for its task (exploration, code review, research, etc.).

Autonomous multi-step execution: Sub-agents run through multiple tool calls and reasoning steps before returning results.

Double API call cost: You’re running two separate conversations — main thread + sub-agent. This has performance and cost implications at scale.

CLAUDE.md still injected: Despite context isolation, sub-agents DO receive the CLAUDE.md content. Project-level standards are preserved even in delegated tasks.

When to use sub-agents:

✅ Multi-step autonomous tasks where exact steps aren’t known upfront

✅ Codebase analysis and exploration

✅ Security audits that need to examine multiple files

✅ Research tasks that require following multiple paths

When NOT to use sub-agents:

❌ Simple queries that can be answered directly

❌ Tasks requiring main conversation context (sub-agents are isolated)

❌ Performance-critical paths (double API call overhead)

The context bridging problem:

Sub-agents can’t access your main conversation history. If the sub-agent needs context from your prior discussion, you must explicitly include it in the delegation prompt.

Example workaround:

Instead of: "Analyze the auth flow"

Be explicit: "Analyze the auth flow. We're using OAuth 2.0 with PKCE. Focus on the token refresh mechanism we discussed earlier - specifically how expired tokens are handled in the background service."

Performance implications:

I measured sub-agent responses taking 2–3x longer than direct responses due to:

1. Main conversation → delegation decision

2. Sub-agent conversation (multiple turns)

3. Results aggregation and return

Use sub-agents when the value of autonomous multi-step execution justifies the latency cost.

Decision Matrix: Choosing the Right Mechanism

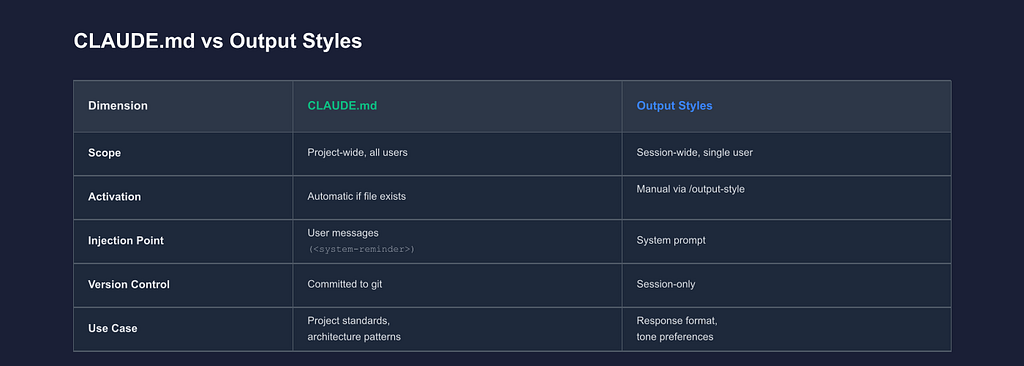

CLAUDE.md vs. Output Styles

Rule of thumb:

- Team-wide standards? → CLAUDE.md (committed to repo)

- Personal preferences? → Output styles (session-only)

- Project context that applies to everyone? → CLAUDE.md

- Temporary behavior change for current session? → Output styles

Skills vs. Slash Commands vs. MCP

Rule of thumb:

- Need Claude to decide when to invoke? → Skills or MCP

- Need explicit control over when it runs? → Slash commands

- Need security and production-readiness? → MCP only

- Personal prototyping? → Skills or slash commands

- Multi-user or sensitive data? → Never use skills, use MCP

Output Styles vs. Sub-Agents

Rule of thumb:

- Changing how Claude formats or structures responses? → Output style

- Delegating a complex multi-step task? → Sub-agent

- Need conversation context preserved? → Output style

- Need clean isolated execution? → Sub-agent

Critical Technical Observations from Network Analysis

1. Tool Call Overhead is Significant

Skills and sub-agents both use the tool calling mechanism, which adds measurable roundtrip latency:

Direct prompt flow: User message → LLM response (1 API call)

Tool-based flow: User message → LLM tool decision → Tool execution → Tool result → Final response (2+ API calls)

Measured latency:

- Direct prompts: 1–2 seconds

- Skills/sub-agents: 3–5 seconds

This 2–3x latency penalty is justified when you need autonomous decision-making or multi-step execution. It’s not justified for simple tasks that could be handled with direct prompts.

Design implication: Don’t default to skills or sub-agents for everything. Use them when the value of autonomous invocation or isolated execution justifies the performance cost.

2. Skill Discovery is Surprisingly Naive

Skill activation is based on simple substring and semantic matching against the `description` field in your SKILL.md frontmatter. There’s no sophisticated intent matching, no confidence scoring, no debugging output to see why a skill didn’t activate.

Common failure mode:

You create a skill with description: “Helps with database optimization”

User asks: “Can you improve the performance of my queries?”

Skill doesn’t activate because there’s insufficient keyword overlap.

Fix: Make skill descriptions extremely explicit with multiple trigger phrases:

- -

description: Database query optimization, SQL performance tuning, slow query analysis. Use this when users mention: slow queries, database performance, query optimization, SQL tuning, index recommendations, execution plan analysis.

- -

Include specific keywords, use cases, and trigger phrases. Treat the description as a keyword matching target, not a human-readable summary.

3. CLAUDE.md Gets Injected Everywhere (Including Sub-Agents)

CLAUDE.md content appears in every user message as a `<system-reminder>`, which means it’s included in:

- Main conversation messages

- Sub-agent conversation messages

- Every turn, every request

Implication: A 3KB CLAUDE.md file adds:

- ~15KB per 5-turn conversation (3KB × 5 messages)

- ~30KB for conversations involving sub-agents (main + sub-agent conversation)

Design implication: Keep CLAUDE.md concise. Use the `@file.md` reference syntax to pull in additional documentation only when needed, rather than embedding everything directly.

Example:

# CLAUDE.md

## Architecture Overview

[Brief 2–3 paragraph summary]

For detailed architecture documentation, see @docs/architecture.md

For API patterns, see @docs/api-patterns.md

4. Sub-Agent Context Isolation Has No Workarounds

Sub-agents run in completely isolated conversations with zero access to main conversation history. There’s no “context bridging” feature, no selective sharing, no way to automatically pass relevant context.

The only workaround: Be extremely explicit in delegation prompts.

Bad delegation:

"Analyze the auth flow"

Good delegation:

"Analyze the authentication flow in our Next.js application. Context: We use OAuth 2.0 with PKCE for client authentication and JWT tokens for API access. The issue we're investigating is token refresh failures after 24 hours. Focus on: 1) Token storage mechanisms, 2) Refresh token rotation logic, 3) Background token refresh in the API client service."

Include all necessary context explicitly. Sub-agents can’t “remember” your earlier conversation.

Practical Implementation Recommendations

For Individual Developers

1. Start with slash commands for repeatable tasks

They’re deterministic, debuggable, and have no activation ambiguity. Build your workflow library in `.claude/commands/`.

2. Use output styles sparingly

Session-wide behavior changes have cognitive overhead. It’s easy to forget what style is active and waste time debugging. Prefer explicit per-message instructions unless you truly need session-wide changes.

3. Prototype with skills, move to MCP for anything serious

Skills are great for personal experimentation, but the security model (direct code execution) makes them unsuitable for anything beyond local prototyping.

4. Reserve sub-agents for genuinely complex tasks

The double API call overhead isn’t justified for simple queries. Use sub-agents when you need multi-step autonomous execution and can’t predict the exact steps upfront.

For Teams

1. Use CLAUDE.md for team-wide standards

Commit it to git alongside `.claude/commands/`. This ensures everyone gets the same project context and coding standards.

2. Standardize workflows with slash commands

Keep them in version control. Document them. Make them part of your team’s development process.

3. Avoid skills in shared environments

The sandbox requirement and direct code execution make skills unsuitable for multi-user setups. Build MCP servers instead — they’re reusable across applications and have proper security boundaries.

4. Document your CLAUDE.md clearly

Invisible context causes debugging nightmares. Include a comment at the top explaining what standards/context are defined and when they were last updated.

5. Monitor sub-agent usage patterns

The double API call has cost implications at scale. Track which tasks genuinely benefit from sub-agent delegation vs. which could be handled more efficiently with direct prompts.

For Production Systems

1. Never use skills for anything sensitive

Direct code execution with no schema validation or access control is unacceptable in production environments.

2. MCP should be the default for external integrations

Structured I/O, schema validation, access control, and reusability across AI applications make MCP the only viable choice for production.

3. Implement cost tracking for sub-agents

Each sub-agent invocation is effectively 2+ API calls. At scale, this has real cost implications. Track usage and optimize high-frequency paths.

4. Version control all prompt augmentation files

CLAUDE.md, `.claude/commands/`, `.claude/output-styles/` should all be in git with proper review processes.

Conclusion: Orthogonal Mechanisms, Not Competing Alternatives

The critical insight from reverse engineering Claude Code: **these five mechanisms are orthogonal, not competing.**

They operate on different architectural layers:

- System-level: Output styles modify Claude’s identity and behavior

- Message-level: CLAUDE.md, slash commands, and skills add context and instructions

- Conversation-level: Sub-agents delegate entire tasks to isolated conversations

Use them together:

- CLAUDE.md provides project context and standards

- Output styles control response format and style

- Slash commands automate repeatable workflows

- Skills add autonomous capabilities

- Sub-agents handle complex multi-step tasks

The architecture is cleaner than I expected. The separation of concerns is well-designed. But there are critical gotchas:

1. CLAUDE.md is invisible

Always check it when debugging unexpected behavior. Invisible context is the hardest to debug.

2. Security model matters

Skills execute code directly. For anything beyond personal tooling, use MCP. The convenience of skills doesn’t justify the security risk in production.

3. Performance characteristics differ significantly

Tool-based mechanisms (skills, sub-agents) have 2–3x latency overhead compared to direct injection (slash commands, CLAUDE.md). Choose based on whether autonomous invocation justifies the cost.

4. Context isolation in sub-agents is absolute

There’s no way to automatically share main conversation context. Be explicit in delegation prompts.

Claude Code’s prompt augmentation architecture is powerful when you understand the injection points, activation patterns, and trade-offs. Use each mechanism for its intended purpose, and avoid the temptation to force-fit one mechanism for all use cases.

Related Resources

- Complete network traces and analysis: github.com/AgiFlow/claude-code-prompt-analysis and toolkit to build analysis tool

- Claude Code documentation: docs.claude.com/claude-code

- Model Context Protocol specification: modelcontextprotocol.io

- Anthropic skills repository: github.com/anthropics/skills

About this research: This analysis is based on instrumenting Claude Code’s network traffic and reverse engineering the actual API payloads sent to Anthropic’s API. Implementation details may change in future versions.

Want to discuss these findings or share your own insights? I’m @Agimon_AI on Twitter and happy to dive deeper into any of these mechanisms.

Reverse Engineering Claude Code: How Skills different from Agents, Commands and Styles was originally published in Level Up Coding on Medium, where people are continuing the conversation by highlighting and responding to this story.

This content originally appeared on Level Up Coding - Medium and was authored by Vuong Ngo

Vuong Ngo | Sciencx (2025-10-20T03:36:36+00:00) Reverse Engineering Claude Code: How Skills different from Agents, Commands and Styles. Retrieved from https://www.scien.cx/2025/10/20/reverse-engineering-claude-code-how-skills-different-from-agents-commands-and-styles/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.