This content originally appeared on HackerNoon and was authored by The Tech Reckoning is Upon Us!

Table of Links

-

4.1 Multi-hop Reasoning Performance

4.2 Reasoning with Distractors

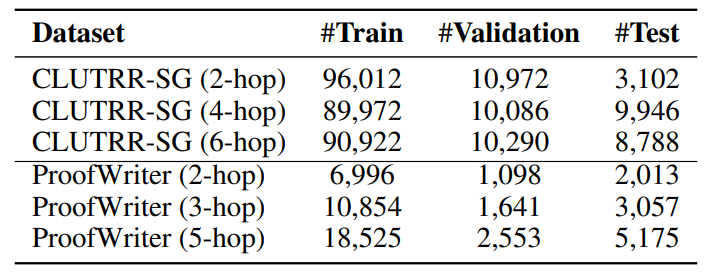

\ A. Dataset

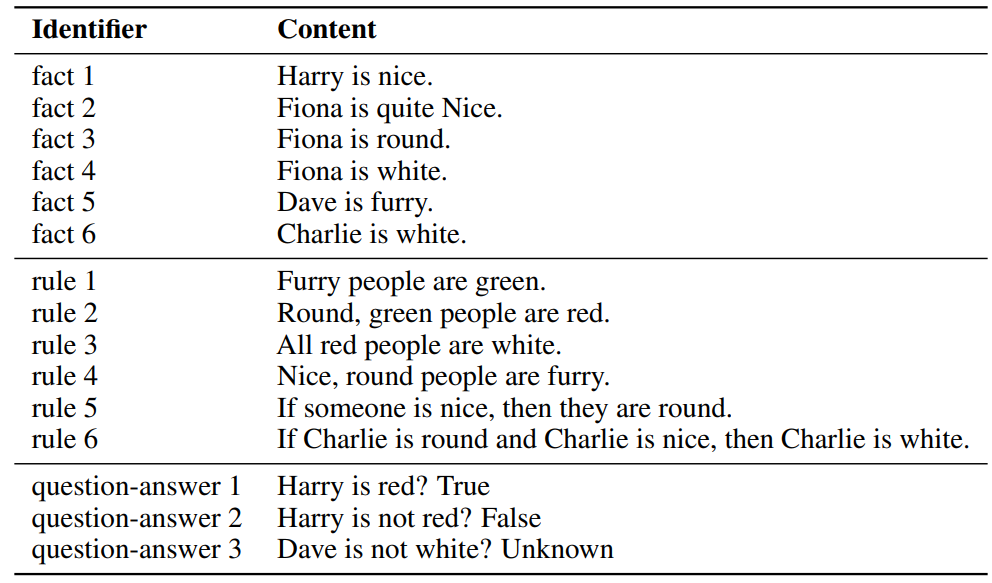

B. In-context Reasoning with Distractors

E. Experiments with Large Language Models

C Implementation Details

We select GPT-2-base [59] as the model for our method and all the baselines. We use the version implemented by the Huggingface Transformers library [78]. All the experiments for RECKONING

\

\

are conducted on a cluster with NVIDIA A100 (40GB) GPUs. All the baseline experiments are conducted on a local machine with NVIDIA RTX 3090 GPU (24GB).

\ Fine-tuned In-context Reasoning We set the train batch size to 16 and train the model for 6 epochs with early stopping based on the validation label accuracy. We set the learning rate to 3e-5 and use the AdamW optimizer with ϵ set to 1e-8. We validate the model on the development set for every epoch and select the best checkpoint using the validation accuracy as the metric.

\ RECKONING In the inner loop, we generally perform 4 gradient steps for lower-hop questions (2, 3, 4-hop) and 5 gradient steps for higher-hop questions (5 and 6-hop). We select the AdamW [46] as the optimizer for the inner loop since the main task is language modeling. The inner-loop learning rate is set to 3e-5 before training, and the algorithm dynamically learns a set of optimal learning rates when converged. In our experiments and analysis, we only report the results from RECKONING with a multi-task objective since its performance is better than the single-task objective. In the outer loop, we also use the AdamW with a learning rate of 3e-5. For both optimizers, we set ϵ to 1e-8. We set the train batch size to 2 due to memory limitations. We apply the technique of gradient accumulation and set the accumulation step to 2. We train the model for 6 epochs with early stopping. For each epoch, we validate the model twice: once in the middle and once at the end. We select the best model checkpoint based on the validation label accuracy

\

:::info Authors:

(1) Zeming Chen, EPFL (zeming.chen@epfl.ch);

(2) Gail Weiss, EPFL (antoine.bosselut@epfl.ch);

(3) Eric Mitchell, Stanford University (eric.mitchell@cs.stanford.edu)';

(4) Asli Celikyilmaz, Meta AI Research (aslic@meta.com);

(5) Antoine Bosselut, EPFL (antoine.bosselut@epfl.ch).

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

This content originally appeared on HackerNoon and was authored by The Tech Reckoning is Upon Us!

The Tech Reckoning is Upon Us! | Sciencx (2025-10-29T15:29:18+00:00) Technical Setup for RECKONING: Inner Loop Gradient Steps, Learning Rates, and Hardware Specification. Retrieved from https://www.scien.cx/2025/10/29/technical-setup-for-reckoning-inner-loop-gradient-steps-learning-rates-and-hardware-specification/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.